Chapter 4 The Family of Autoregressive Moving Average Models

“Essentially, all models are wrong, but some are useful”, George Box

In this chapter we introduce a class of time series models that is considerably flexible and among the most commonly used to describe stationary time series. This class is represented by the Seasonal AutoRegressive Integrated Moving Average (SARIMA) models which, among others, combine and include the autoregressive and moving average models seen in the previous chapter. To introduce this class of models, we start by describing a sub-class called AutoRegressive Moving Average (ARMA) models which represent the backbone on which the SARIMA class is built. The importance of ARMA models resides in their flexibility as well as their capacity of describing (or closely approximating) almost all the features of a stationary time series. The autoregressive parts of these models describe how consecutive observations in time influence each other while the moving average parts capture some possible unobserved shocks thereby allowing to model different phenomena which can be observed in various fields going from biology to finance.

With this premise, the first part of this chapter introduces and explains the class of ARMA models in the following manner. First of all we will discuss the class of linear processes, which ARMA models belong to, and we will then proceed to a detailed description of autoregressive models in which we review their definition, explain their properties, introduce the main estimation methods for their parameters and highlight the diagnostic tools which can help understand if the estimated models appear to be appropriate or sufficient to well describe the observed time series. Once this is done, we will then use most of the results given for the autoregressive models to further describe and discuss moving average models, for which we underline the property of invertibility, and finally the ARMA models. Indeed, the properties and estimation methods for the latter class are directly inherited from the discussions on the autoregressive and moving average models.

The second part of this chapter introduces the general class of SARIMA models, passing through the class of ARIMA models. These models allow to apply the ARMA modeling framework also to time series that have particular non-stationary components to them such as, for example, linear and/or seasonal trends. Extending ARMA modeling to these cases allows SARIMA models to be an extremely flexible class of models that can be used to describe a wide range of phenomena.

4.1 Linear Processes

In order to discuss the classes of models mentioned above, we first present the class of linear processes which underlie many of the most common time series models.

Definition 4.1 (Linear Process) A time series, \((X_t)\), is defined to be a linear process if it can be expressed as a linear combination of white noise as follows:

\[{X_t} = \mu + \sum\limits_{j = - \infty }^\infty {{\psi _j}{W_{t - j}}} \]

where \(W_t \sim \mathcal{N}(0, \sigma^2)\) and \(\sum\limits_{j = - \infty }^\infty {\left| {{\psi _j}} \right|} < \infty\).

Note, the latter assumption is required to ensure that the series has a limit. Furthermore, the set of coefficients \[{( {\psi _j}) _{j = - \infty , \cdots ,\infty }}\] can be viewed as a linear filter. These coefficients do not have to be all equal nor symmetric as later examples will show. Generally, the properties of a linear process related to mean and variance are given by:

\[\begin{aligned} \mu_{X} &= \mu \\ \gamma_{X}(h) &= \sigma _W^2\sum\limits_{j = - \infty }^\infty {{\psi _j}{\psi _{h + j}}} \end{aligned}\]

The latter is derived from

\[\begin{aligned} \gamma \left( h \right) &= Cov\left( {{x_t},{x_{t + h}}} \right) \\ &= Cov\left( {\mu + \sum\limits_{j = - \infty }^\infty {{\psi _j}{w_{t - j}}} ,\mu + \sum\limits_{j = - \infty }^\infty {{\psi _j}{w_{t + h - j}}} } \right) \\ &= Cov\left( {\sum\limits_{j = - \infty }^\infty {{\psi _j}{w_{t - j}}} ,\sum\limits_{j = - \infty }^\infty {{\psi _j}{w_{t + h - j}}} } \right) \\ &= \sum\limits_{j = - \infty }^\infty {{\psi _j}{\psi _{j + h}}Cov\left( {{w_{t - j}},{w_{t - j}}} \right)} \\ &= \sigma _w^2\sum\limits_{j = - \infty }^\infty {{\psi _j}{\psi _{j + h}}} \\ \end{aligned} \]

Within the above derivation, the key is to realize that \(Cov\left( {{w_{t - j}},{w_{t + h - j}}} \right) = 0\) if \(t - j \ne t + h - j\).

Lastly, another convenient way to formalize the definition of a linear process is through the use of the backshift operator (or lag operator) which is itself defined as follows:

\[B\,X_t = X_{t-1}.\]

The properties of the backshift operator allow us to create composite functions of the type

\[B^2 \, X_t = B (B \, X_t) = B \, X_{t-1} = X_{t-2}\] which allows to generalize as follows

\[B^k \, X_t = X_{t-k}.\] Moreover, we can apply the inverse operator to it (i.e. \(B^{-1} \, B = 1\)) thereby allowing us to have, for example:

\[X_t = B^{-1} \, B X_t = B^{-1} X_{t-1}\]

Having defined the backshift operator, we can now provide an alternative definition of a linear process as follows:

\[{X_t} = \mu + \psi \left( B \right){W_t}\]

where \(\psi ( B )\) is a polynomial function in \(B\) whose coefficients are given by the linear filters \((\psi_j)\) (we’ll describe these polynomials further on).

Example 4.2 (Linear Process of White Noise) The white noise process \((X_t)\), defined in 2.4.1, can be expressed as a linear process as follows:

\[\psi _j = \begin{cases} 1 , &\mbox{ if } j = 0\\ 0 , &\mbox{ if } |j| \ge 1 \end{cases}.\]

and \(\mu = 0\).

Therefore, \(X_t = W_t\), where \(W_t \sim WN(0, \sigma^2_W)\)

Example 4.3 (Linear Process of Moving Average Order 1) Similarly, consider \((X_t)\) to be a MA(1) process, given by 2.4.4. The process can be expressed linearly through the following filters:

\[\psi _j = \begin{cases} 1, &\mbox{ if } j = 0\\ \theta , &\mbox{ if } j = 1 \\ 0, &\mbox{ if } j \ge 2 \end{cases}.\]

and \(\mu = 0\).

Thus, we have: \(X_t = W_t + \theta W_{t-1}\)

Example 4.4 (Linear Process and Symmetric Moving Average) Consider a symmetric moving average given by:

\[{X_t} = \frac{1}{{2q + 1}}\sum\limits_{j = - q}^q {{W_{t + j}}} \]

Thus, \((X_t)\) is defined for \(q + 1 \le t \le n-q\). The above process would be a linear process since:

\[\psi _j = \begin{cases} \frac{1}{{2q + 1}} , &\mbox{ if } -q \le j \le q\\ 0 , &\mbox{ if } |j| > q \end{cases}.\]

and \(\mu = 0\).

In practice, if \(q = 1\), we would have:

\[{X_t} = \frac{1}{3}\left( {{W_{t - 1}} + {W_t} + {W_{t + 1}}} \right)\]

Example 4.5 (Autoregressive Process of Order 1) If \(\left\{X_t\right\}\) follows an AR(1) model defined in 2.4.3, the linear filters are a function of the time lag:

\[\psi _j = \begin{cases} \phi^j , &\mbox{ if } j \ge 0\\ 0 , &\mbox{ if } j < 0 \end{cases}.\]

and \(\mu = 0\). We would require the condition that \(\left| \phi \right| < 1\) in order to respect the condition on the filters (i.e. \(\sum\limits_{j = - \infty }^\infty {\left| {{\psi _j}} \right|} < \infty\)).4.2 Autoregressive Models - AR(p)

The class of autoregressive models is based on the idea that previous values in the time series are needed to explain current values in the series. For this class of models, we assume that the \(p\) previous observations are needed for this purpose and we therefore denote this class as AR(\(p\)). In the previous chapter, the model we introduced was an AR(1) in which only the immediately previous observation is needed to explain the following one and therefore represents a particular model which is part of the more general class of AR(\(p\)) models.

As earlier in this book, we will assume that the expectation of the process \(({X_t})\), as well as that of the following ones in this chapter, is zero. The reason for this simplification is that if \(\mathbb{E} [ X_t ] = \mu\), we can define an AR process around \(\mu\) as follows:

\[X_t - \mu = \sum_{i = 1}^p \phi_i \left(X_{t-i} - \mu \right) + W_t,\]

which is equivalent to

\[X_t = \mu^{\star} + \sum_{i = 1}^p \phi_i X_{t-i} + W_t,\]

where \(\mu^{\star} = \mu (1 - \sum_{i = 1}^p \phi_i)\). Therefore, to simplify the notation we will generally consider only zero mean processes, since adding means (as well as other deterministic trends) is easy.

A useful way of representing AR(\(p\)) processes is through the backshift operator introduced in the previous section and is as follows

\[\begin{aligned} {X_t} &= {\phi_1}{X_{t - 1}} + ... + {\phi_p}{X_{t - p}} + {W_t} \\ &= {\phi_1}B{X_t} + ... + {\phi_p}B^p{X_t} + {W_t} \\ &= ({\phi_1}B + ... + {\phi_p}B^p){X_t} + {W_t} \\ \end{aligned},\]

which finally yields

\[(1 - {\phi _1}B - ... - {\phi_p}B^p){X_t} = {W_t},\]

which, in abbreviated form, can be expressed as

\[\phi(B){X_t} = W_t.\]

We will see that \(\phi(B)\) is important to establish the stationarity of these processes and is called the autoregressive operator. Moreover, this quantity is closely related to another important property of AR(p) processes called causality. Before formally defining this new property we consider the following example which provides an intuitive illustration of its importance.

Example: Consider a classical AR(1) model with \(|\phi| > 1\). Such a model could be expressed as

\[X_t = \phi^{-1} X_{t+1} - \phi^{-1} W_t = \phi^{-k} X_{t+k} - \sum_{i = 1}^{k-1} \phi^{-i} W_{t+i}.\]

Since \(|\phi| > 1\), we obtain

\[X_t = - \sum_{j = 1}^{\infty} \phi^{-j} W_{t+j},\]

which is a linear process and therefore is stationary. Unfortunately, such a model is useless because we need the future to predict the future. These processes are called non-causal.

4.2.1 Properties of AR(p) models

In this section we will describe the main property of the AR(p) model which has already been mentioned in the previous paragraphs and therefore let us now introduce the property of causality in a more formal manner.

Definition: An AR(p) model is causal if the time series \((X_t)_{-\infty}^{\infty}\) can be written as a one-sided linear process: \[\begin{equation} X_t = \sum_{j = 0}^{\infty} \psi_j W_{t-j} = \frac{1}{\phi(B)} W_t = \psi(B) W_t, \tag{4.1} \end{equation}\]where \(\phi(B) = \sum_{j = 0}^{\infty} \phi_j B^j\), and \(\sum_{j=0}^{\infty}|\phi_j| < \infty\) and setting \(\phi_0 = 1\).

As discussed earlier this condition implies that only the past values of the time series can explain the future values of it and not viceversa. Moreover, given the expression of the linear filters given by \[\frac{1}{\phi(B)}\] it is obvious that a solution exists only when \(\phi(B) = \sum_{j = 0}^{\infty} \phi_j B^j \neq 0\) (thereby implying causality). A condition for this to be respected is for the roots of \(\phi(B) = 0\) to lie outside the unit circle.

Example 4.6 (Transform an AR(2) into a Linear Process) Consider an AR(2) process \[X_t = 1.3 X_{t-1} - 0.4 X_{t-2} + W_t,\] which we would like to transform into a linear process. This can be done using the following approach:

Step 1: The autoregressive operator of this model can be expressed as \[ \phi(B) = 1-1.3B+0.4B^2 = (1-0.5B)(1-0.8B), \] and has roots 2 and 1.25, both \(>1\). Thus, we should be able to convert it into a linear process.

Step 2: We know that if an AR(p) process has all its roots outside the unit circle, then we can write \(X_t = \frac{1}{\phi(B)} W_t\). By applying the partial fractions trick, we can inverse the autoregressive operator \(\phi(B)\) as follows: \[ \begin{aligned} \phi^{-1}(B) &= \frac{1}{(1-0.5B)(1-0.8B)} = \frac{c_1}{(1-0.5B)} + \frac{c_2}{(1-0.8B)} \\ &= \frac{c_2(1-0.5B) + c_1(1-0.8B)}{(1-0.5B)(1-0.8B)} = \frac{(c_1 + c_2)-(0.8c_1+0.5c_2)B}{(1-0.5B)(1-0.8B)}. \end{aligned} \]

To solve for \(c_1\) and \(c_2\): \[ \begin{cases} c_1 + c_2 &=1\\ 0.8c_1+0.5c_2 &=0 \end{cases} \to \begin{cases} c_1 &= -5/3\\ c_2 &= 8/3. \end{cases} \]

So we obtain \[ \phi^{-1}(B) = \frac{-5}{3(1-0.5B)} + \frac{8}{3(1-0.8B)}. \]

- Step 3: Using the Geometric series, i.e. \(a\sum_{j=0}^{\infty} r^j = \frac{a}{1-r}\) if \(|r| <1\), we have \[ \begin{cases} \frac{-5}{3(1-0.5B)} = -\frac{5}{3} \sum_{j=0}^\infty 0.5^j B^j, &\mbox{ if } |B| < 2 \\ \frac{8}{3(1-0.8B)} = \frac{8}{3} \sum_{j=0}^\infty 0.8^j B^j, &\mbox{ if } |B| < 1.25. \end{cases} \]

So we can express \(\phi^{-1}(B)\) as \[ \phi^{-1}(B) = \sum_{j=0}^\infty \Big[ -\frac{5}{3} (0.5)^j + \frac{8}{3} (0.8)^j \Big] B^j, \;\;\; \text{if } |B|<1.25. \]

- Step 4: Finally, we obtain \[ \begin{aligned} X_t &= \phi(B)^{-1} W_t = \sum_{j=0}^\infty \Big[ -\frac{5}{3} (0.5)^j + \frac{8}{3} (0.8)^j \Big] B^j W_t \\ &= \sum_{j=0}^\infty \Big[ -\frac{5}{3} (0.5)^j + \frac{8}{3} (0.8)^j \Big] W_{t-j}, \end{aligned} \] which verifies that the AR(2) is causal, and therefore is stationary.

Example 4.7 (Causal Conditions for an AR(2) Process) We already know that an AR(1) is causal with the simple condition \(|\phi_1|<1\). It seems natural to believe that an AR(2) should be causal (and therefore stationary) with the condition that \(|\phi_i| <1, \; i=1,2\). However, this is actually not the case as we illustrate below.

We can express an AR(2) process as \[ X_t = \phi_1 X_{t-1} + \phi_2 X_{t-2} + W_t = \phi_1 BX_t + \phi_2 B^2 X_t + W_t, \] thereby delivering the following autoregressive operator: \[ \phi(B) = 1-\phi_1 B - \phi_2 B^2 = \Big( 1-\frac{B}{\lambda_1} \Big) \Big( 1-\frac{B}{\lambda_2} \Big) \] where \(\lambda_1\) and \(\lambda_2\) are the roots of \(\phi(B)\) such that \[ \begin{aligned} \phi_1 &= \frac{1}{\lambda_1} + \frac{1}{\lambda_2}, \\ \phi_2 &= - \frac{1}{\lambda_1} \frac{1}{\lambda_2}. \end{aligned} \]

That is, \[\begin{aligned} \lambda_1 &= \frac{\phi_1 + \sqrt{\phi_1^2 + 4\phi_2}}{-2\phi_2}, \\ \lambda_2 &= \frac{\phi_1 - \sqrt{\phi_1^2 + 4\phi_2}}{-2\phi_2}. \end{aligned} \]

In order to ensure the causality of the model, we need the roots of \(\phi(B)\), i.e. \(\lambda_1\) and \(\lambda_2\), to lie outside the unit circle.

\[ \begin{cases} |\lambda_1| &> 1 \\ |\lambda_2| &> 1, \end{cases} \] if and only if \[ \begin{cases} \phi_1 + \phi_2 &< 1 \\ \phi_2 - \phi_1 &< 1 \\ |\phi_2| &<1. \end{cases} \]

We can show the if part of the statement as follows: \[ \begin{aligned} & \phi_1 + \phi_2 = \frac{1}{\lambda_1} + \frac{1}{\lambda_2} - \frac{1}{\lambda_1 \lambda_2} = \frac{1}{\lambda_1} \Big(1-\frac{1}{\lambda_2} \Big) + \frac{1}{\lambda_2} < 1 - \frac{1}{\lambda_2} + \frac{1}{\lambda_2} = 1 \;\; \text{since } 1-\frac{1}{\lambda_2} > 0, \\ & \phi_2 - \phi_1 = -\frac{1}{\lambda_1 \lambda_2} - \frac{1}{\lambda_1} - \frac{1}{\lambda_2} = -\frac{1}{\lambda_1} \Big( \frac{1}{\lambda_2} +1 \Big) - \frac{1}{\lambda_2} < \frac{1}{\lambda_2}+1-\frac{1}{\lambda_2} = 1 \;\; \text{since } \frac{1}{\lambda_2}+1 > 0, \\ & |\phi_2| = \frac{1}{|\lambda_1| |\lambda_2|} < 1. \end{aligned} \]

We can also show the only if part of the statement as follows:

Since \(\lambda_1 = \frac{\phi_1 + \sqrt{\phi_1^2 + 4\phi_2}}{-2\phi_2}\) and \(\phi_2 - 1 < \phi_1 < 1- \phi_2\), we have \[ \lambda_1^2 = \frac{(\phi_1 + \sqrt{\phi_1^2 + 4\phi_2})^2}{4\phi_2^2} < \frac{\Big( (1-\phi_2)+ \sqrt{(1-\phi_2)^2 + 4\phi_2} \Big)^2}{4\phi_2^2} = \frac{4}{4\phi_2^2} \leq 1. \]

Since \(\lambda_2 = \frac{\phi_1 - \sqrt{\phi_1^2 + 4\phi_2}}{-2\phi_2}\) and \(\phi_2 - 1 < \phi_1 < 1- \phi_2\), we have \[ \lambda_2^2 = \frac{(\phi_1 - \sqrt{\phi_1^2 + 4\phi_2})^2}{4\phi_2^2} < \frac{\Big( (\phi_2-1)+ \sqrt{(\phi_2-1)^2 + 4\phi_2} \Big)^2}{4\phi_2^2} = \frac{4\phi_2^2}{4\phi_2^2} = 1. \]

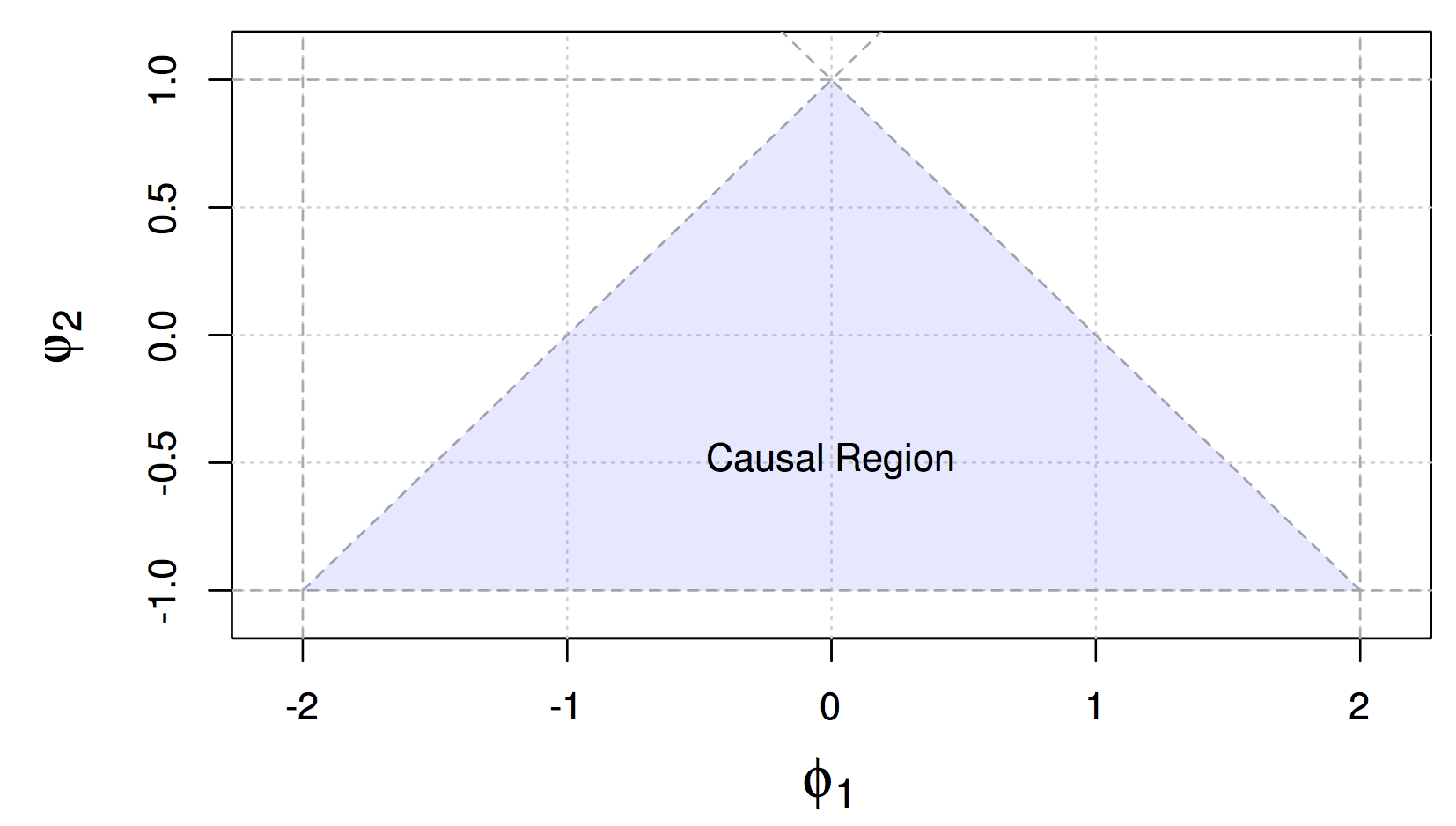

Finally, the causal region of an AR(2) is demonstrated as

Figure 4.1: Causal Region for Parameters of an AR(2) Process

4.2.2 Estimation of AR(p) models

Given the above defined properties of AR(p) models, we will now discuss how these models can be estimated, more specifically how the \(p+1\) parameters can be obtained from an observed time series. Indeed, a reliable estimation of these models is necessary in order to intepret and describe different natural phenomena and/or forecast possible future values of the time series.

A first approach builds upon the earlier definition of AR(\(p\)) models being a linear process. Recall that \[\begin{equation} X_t = \sum_{j = 1}^{p} \phi_j X_{t-j} \end{equation}\] which delivers the following autocovariance function \[\begin{equation} \gamma(h) = \text{cov}(X_{t+h}, X_t) = \text{cov}\left(\sum_{j = 1}^{p} \phi_j X_{t+h-j}, X_t\right) = \sum_{j = 1}^{p} \phi_j \gamma(h-j), \mbox{ } h \geq 1. \end{equation}\] Rearranging the above expressions we obtain the following general equations \[\begin{equation} \gamma(h) - \sum_{j = 1}^{p} \phi_j \gamma(h-j) = 0, \mbox{ } h \geq 1 \end{equation}\] and, recalling that \(\gamma(h) = \gamma(-h)\), \[\begin{equation} \gamma(0) - \sum_{j = 1}^{p} \phi_j \gamma(j) = \sigma_w^2. \end{equation}\]We can now define the Yule-Walker equations.

Definition: The Yule-Walker equations are given by \[\begin{equation} \gamma(h) = \phi_1 \gamma(h-1) + ... + \phi_p \gamma(h-p), \mbox{ } h = 1,...,p \end{equation}\] and \[\begin{equation} \sigma_w^2 = \gamma(0) - \phi_1 \gamma(1) - ... - \phi_p \gamma(p). \end{equation}\] which in matrix notation can be defined as follows \[\begin{equation} \Gamma_p \mathbf{\phi} = \mathbf{\gamma}_p \,\, \text{and} \,\, \sigma_w^2 = \gamma(0) - \mathbf{\phi}'\mathbf{\gamma}_p \end{equation}\]where \(\Gamma_p\) is the \(p\times p\) matrix containing the autocovariances \(\gamma(k-j)\), where \(j,k = 1, ...,p\), while \(\mathbf{\phi} = (\phi_1,...,\phi_p)'\) and \(\mathbf{\gamma}_p = (\gamma(1),...,\gamma(p))'\) are \(p\times 1\) vectors.

Considering the Yule-Walker equations, it is possible to use a method of moments approach and simply replace the theoretical quantities given in the previous definition with their empirical (estimated) counterparts that we saw in the previous chapter. This gives us the following Yule-Walker estimators \[\begin{equation} \hat{\mathbf{\phi}} = \hat{\Gamma}_p^{-1}\hat{\mathbf{\gamma}}_p \,\, \text{and} \,\, \hat{\sigma}_w^2 = \hat{\gamma}(0) - \hat{\mathbf{\gamma}}_p'\hat{\Gamma}_p^{-1}\hat{\mathbf{\gamma}}_p . \end{equation}\]These estimators have the following asymptotic properties.

Consistency and Asymptotic Normality of Yule-Walker estimators: The Yule-Walker estimators for a causal AR(\(p\)) model have the following asymptotic properties:

\[\begin{equation*} \sqrt{T}(\hat{\mathbf{\phi}}- \mathbf{\phi}) \xrightarrow{\mathcal{D}} \mathcal{N}(\mathbf{0},\sigma_w^2\Gamma_p^{-1}) \,\, \text{and} \,\, \hat{\sigma}_w^2 \xrightarrow{\mathcal{P}} \sigma_w^2 . \end{equation*}\] Therefore the Yule-Walker estimators have an asymptotically normal distribution and the estimator of the innovation variance is consistent. Moreover, these estimators are also optimal for AR(\(p\)) models, meaning that they are also efficient. However, there is also another method which allows to achieve this efficiency (also for general ARMA models that will be tackled further on) and this is the Maximum Likelihood Estimation (MLE) method. Considering an AR(1) model as an example, and assuming without loss of generality that its expectation is zero, we have the following representation of the AR(1) model \[\begin{equation*} X_t = \phi X_{t-1} + W_t, \end{equation*}\] where \(|\phi|<1\) and \(W_t \overset{iid}{\sim} \mathcal{N}(0,\sigma_w^2)\). Supposing we have observations \((x_t)_{t=1,...,T}\) issued from this model, then the likelihood function for this setting is given by \[\begin{equation*} L(\phi,\sigma_w^2) = f(\phi,\sigma_w^2|x_1,...,x_T) \end{equation*}\] which, for an AR(1) model, can be rewritten as follows \[\begin{equation*} L(\phi,\sigma_w^2) = f(x_1)f(x_2|x_1)\cdot \cdot \cdot f(x_T|x_{T-1}). \end{equation*}\] If we define \(\Omega_t^p\) as the information contained in the previous \(p\) observations (before time \(t\)), the above expression can be generalized for an AR(p) model as follows \[\begin{equation*} L(\phi,\sigma_w^2) = f(x_1,...,x_p)f(x_{p+1}|\Omega_{p+1}^p)\cdot \cdot \cdot f(x_T|\Omega_{T}^p) \end{equation*}\] where \(f(x_1,...,x_p)\) is the joint probability distribution of the first \(p\) observations. Going back to the AR(1) setting, based on our assumption on \((W_t)\) we know that \(x_t|x_{t-1} \sim \mathcal{N}(\phi x_{t-1},\sigma_w^2)\) and therefore we have that \[\begin{equation*} f(x_t|x_{t-1}) = f_w(x_t - \phi x_{t-1}) \end{equation*}\] where \(f_w(\cdot)\) is the distribution of \(W_t\). This rearranges the likelihood function as follows \[\begin{equation*} L(\phi,\sigma_w^2) = f(x_1)\prod_{t=2}^T f_w(x_t - \phi x_{t-1}), \end{equation*}\] where \(f(x_1)\) can be found through the causal representation \[\begin{equation*} x_1 = \sum_{j=0}^{\infty} \phi^j w_{1-j}, \end{equation*}\] which implies that \(x_1\) follows a normal distribution with zero expectation and a variance given by \(\frac{\sigma_w^2}{(1-\phi^2)}\). Based on this, the likelihood function of an AR(1) finally becomes \[\begin{equation*} L(\phi,\sigma_w^2) = (2\pi \sigma_w^2)^{-\frac{T}{2}} (1 - \phi^2)^{\frac{1}{2}} \exp \left(-\frac{S(\phi)}{2 \sigma_w^2}\right), \end{equation*}\] with \(S(\phi) = (1-\phi^2) x_1^2 + \sum_{t=2}^T (x_t -\phi x_{t-1})^2\). Once the derivative of the logarithm of the likelihood is taken, the minimization of the negative of this function is usually done numerically. However, if we condition on the initial values, the AR(p) models are linear and, for example, we can then define the conditional likelihood of an AR(1) as \[\begin{equation*} L(\phi,\sigma_w^2|x_1) = (2\pi \sigma_w^2)^{-\frac{T-1}{2}} \exp \left(-\frac{S_c(\phi)}{2 \sigma_w^2}\right), \end{equation*}\] where \[\begin{equation*} S_c(\phi) = \sum_{t=2}^T (x_t -\phi x_{t-1})^2 . \end{equation*}\] The latter is called the conditional sum of squares and \(\phi\) can be estimated as a straightforward linear regression problem. Once an estimate \(\hat{\phi}\) is obtained, this can be used to obtain the conditional maximum likelihood estimate of \(\sigma_w^2\) \[\begin{equation*} \hat{\sigma}_w^2 = \frac{S_c(\hat{\phi})}{(T-1)} . \end{equation*}\] The estimation methods presented so far are standard for these kind of models. Nevertheless, if the data suffers from some form of contamination, these methods can become highly biased. For this reason, some robust estimators are available to limit this problematic if there are indeed outliers in the observed time series. One of these methods relies on the estimator proposed in Kunsch (1984) who underlines that the MLE score function of an AR(p) is given by \[\begin{equation*} \kappa(\mathbf{\theta}|x_j,...x_{j+p}) = \frac{\partial}{\partial \mathbf{\theta}} (x_{j+p} - \sum_{k=1}^p \phi_k x_{j+p-k})^2, \end{equation*}\] where \(\theta\) is the parameter vector containing, in the case of an AR(1) model, the two parameters \(\phi\) and \(\sigma_w^2\) (i.e. \(\theta = [\phi \,\, \sigma_w^2]\)). This delivers the estimating equation \[\begin{equation*} \sum_{j=1}^{n-p} \kappa (\hat{\mathbf{\theta}}|x_j,...x_{j+p}) = 0 . \end{equation*}\] The score function \(\kappa(\cdot)\) is clearly not bounded, in the sense that if we arbitrarily move a value of \((x_t)\) to infinity then the score function also goes to infinity thereby delivering a biased estimation procedure. To avoid that outlying observations bias the estimation excessively, a bounded score function can be used to deliver an M-estimator given by \[\begin{equation*} \sum_{j=1}^{n-p} \psi (\hat{\mathbf{\theta}}|x_j,...x_{j+p}) = 0, \end{equation*}\] where \(\psi(\cdot)\) is a function of bounded variation. When conditioning on the first \(p\) observations, this problem can be brought back to a linear regression problem which can be applied in a robust manner using the robust regression tools available inR such as rlm or lmrob. However, another available tool in R which does not require a strict specification of the distribution function (also for general ARMA models) is the method = "rgmwm" option within the estimate() function (in the simts package). This function makes use of a quantity called the wavelet variance (denoted as \(\boldsymbol{\nu}\)) which is estimated robustly and then used to retrieve the parameters \(\theta\) of the time series model. The robust estimate is obtained by solving the following minimization problem

\[\begin{equation*}

\hat{\boldsymbol{\theta}} = \underset{\boldsymbol{\theta} \in \boldsymbol{\Theta}}{\text{argmin}} (\hat{\boldsymbol{\nu}} - \boldsymbol{\nu}(\boldsymbol{\theta}))^T\boldsymbol{\Omega}(\hat{\boldsymbol{\nu}} - \boldsymbol{\nu}({\boldsymbol{\theta}})),

\end{equation*}\]

where \(\hat{\boldsymbol{\nu}}\) is the robustly estimated wavelet variance, \(\boldsymbol{\nu}({\boldsymbol{\theta}})\) is the theoretical wavelet variance (implied by the model we want to estimate) and \(\boldsymbol{\Omega}\) is a positive definite weighting matrix. Below we show some simulation studies where we present the results of the above estimation procedures in absence and in presence of contamination in the data. As a reminder, so far we have mainly discussed three estimators for the parameters of AR(\(p\)) models (i.e. Yule-Walker, maximum likelihod, and RGMWM estimators).

Using the simts package, the first three estimators can be computed as follows:

mod = estimate(AR(p), Xt, method = select_method, demean = TRUE)In the above sample code Xt denotes the time series (a vector of length \(T\)), p is the order of the AR(\(p\)) and demean = TRUE indicates that the mean of the process should be estimated (if this is not the case, then use demean = FALSE). The select_method input can be (among others) "mle" for the maximum likelihood and "yule-walker" for the Yule-Walker estimator or "rgmwm" for the RGMWM. For example, if you would like to estimate a zero mean AR(3) with the MLE you can use the code:

mod = estimate(AR(3), Xt, method = "mle", demean = FALSE)On the other hand, if one wishes to estimate the parameters of this model through the RGMWM one can use the following syntax:

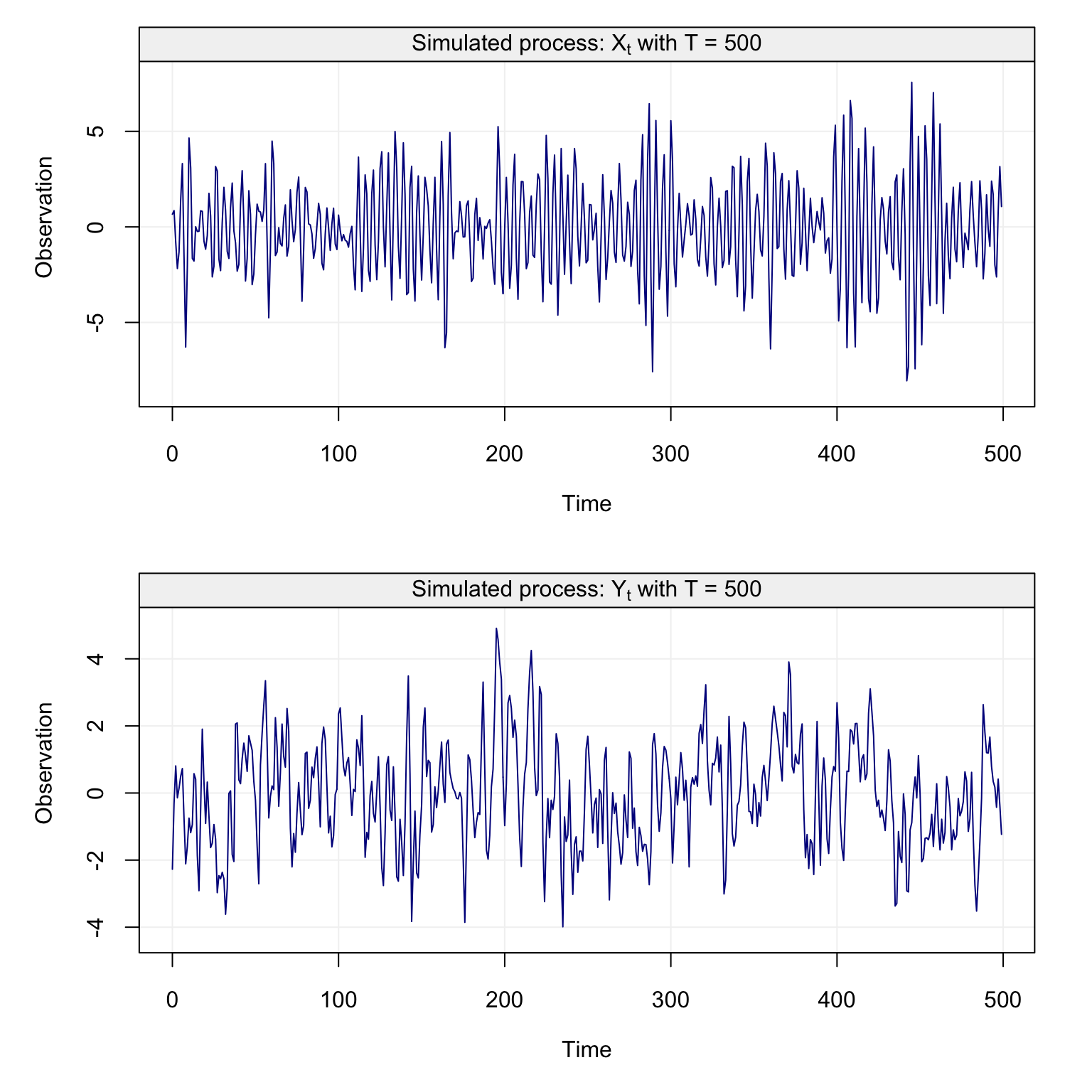

mod = estimate(AR(3), Xt, method = "rgmwm", demean = FALSE)Removing the mean is not strictly necessary for the rgmwm (or gmwm) function since it won’t estimate it and can consistently estimate the parameters of the time series model anyway. We now have the necessary R functions to deliver the above mentioned estimators and we can now proceed to the simulation study. In particular, we simulate three different processes \(X_t, Y_t, Z_t\) by using the first as an uncontaminated process defined as \[X_t = 0.5 X_{t-1} - 0.25 X_{t-2} + W_t,\] with \(W_t \overset{iid}{\sim} N(0, 1)\). This first process \((X_t)\) is uncontaminated while the other two processes are contaminated versions of the first that can often be observed in practice. The first type of contamination can be seen in \((Y_t)\) and is delivered by replacing a portion of the original process with a process defined as \[U_t = 0.90 U_{t-1} - 0.40 U_{t-2} + V_t,\] where \(V_t \overset{iid}{\sim} N(0, 9)\). The second form of contamination can be seen in \((Z_t)\) and consists in the so-called point-wise contamination where randomly selected points from \(X_t\) are replaced with \(N_t \overset{iid}{\sim} N(0, 9)\).

The code below performs the simulation study where it can be seen how the contaminated processes \((Y_t)\) and \((Z_t)\) are generated. Once this is done, for each simultation the code estimates the parameters of the AR(2) model using the three different estimation methods.

# Load simts

library(simts)

# Number of bootstrap iterations

B = 250

# Sample size

n = 500

# Proportion of contamination

eps = 0.05

# Number of contaminated observations

cont = round(eps*n)

# Simulation storage

res.Xt.MLE = res.Xt.YW = res.Xt.RGMWM = matrix(NA, B, 3)

res.Yt.MLE = res.Yt.YW = res.Yt.RGMWM = matrix(NA, B, 3)

res.Zt.MLE = res.Zt.YW = res.Zt.RGMWM = matrix(NA, B, 3)

# Begin bootstrap

for (i in seq_len(B)){

# Set seed for reproducibility

set.seed(1982 + i)

# Generate processes

Xt = gen_gts(n, AR(phi = c(0.5, 0.25), sigma2 = 1))

Yt = Zt = Xt

# Generate Ut contamination process that replaces a portion of original signal

index_start = sample(1:(n-cont-1), 1)

index_end = index_start + cont - 1

Yt[index_start:index_end] = gen_gts(cont, AR(phi = c(0.9,-0.4), sigma2 = 9))

# Generate Nt contamination that inject noise at random

Zt[sample(n, cont, replace = FALSE)] = gen_gts(cont, WN(sigma2 = 9))

# Fit Yule-Walker estimators on the three time series

mod.Xt.YW = estimate(AR(2), Xt, method = "yule-walker",

demean = FALSE)

mod.Yt.YW = estimate(AR(2), Yt, method = "yule-walker",

demean = FALSE)

mod.Zt.YW = estimate(AR(2), Zt, method = "yule-walker",

demean = FALSE)

# Store results

res.Xt.YW[i, ] = c(mod.Xt.YW$mod$coef, mod.Xt.YW$mod$sigma2)

res.Yt.YW[i, ] = c(mod.Yt.YW$mod$coef, mod.Yt.YW$mod$sigma2)

res.Zt.YW[i, ] = c(mod.Zt.YW$mod$coef, mod.Zt.YW$mod$sigma2)

# Fit MLE on the three time series

mod.Xt.MLE = estimate(AR(2), Xt, method = "mle",

demean = FALSE)

mod.Yt.MLE = estimate(AR(2), Yt, method = "mle",

demean = FALSE)

mod.Zt.MLE = estimate(AR(2), Zt, method = "mle",

demean = FALSE)

# Store results

res.Xt.MLE[i, ] = c(mod.Xt.MLE$mod$coef, mod.Xt.MLE$mod$sigma2)

res.Yt.MLE[i, ] = c(mod.Yt.MLE$mod$coef, mod.Yt.MLE$mod$sigma2)

res.Zt.MLE[i, ] = c(mod.Zt.MLE$mod$coef, mod.Zt.MLE$mod$sigma2)

# Fit RGMWM on the three time series

res.Xt.RGMWM[i, ] = estimate(AR(2), Xt, method = "rgmwm",

demean = FALSE)$mod$estimate

res.Yt.RGMWM[i, ] = estimate(AR(2), Yt, method = "rgmwm",

demean = FALSE)$mod$estimate

res.Zt.RGMWM[i, ] = estimate(AR(2), Zt, method = "rgmwm",

demean = FALSE)$mod$estimate

}Having performed the estimation, we should now have 250 estimates for each AR(2) parameter and each estimation method. The code below takes the results of the simulation and shows them in the shape of boxplots along with the true values of the parameters. The estimation methods that are denoted as follows:

- YW: Yule-Walker estimator

- MLE: Maximum Likelihood Estimator

- RGMWM: the robust version of the GMWM estimator

Figure 4.2: Boxplots of the empirical distribution functions of the Yule-Walker (YW), MLE and GMWM estimators for the parameters of the AR(2) model when using the Xt process (first row of boxplots), Yt process (second row of boxplots) and Zt (third row of boxplots).

It can be seen how all methods appear to properly estimate the true parameter values on average when they are applied to the simulated time series from the uncontaminated process \((X_t)\). However, the MLE appears to be slightly more efficient (less variable) compared to the other methods and, in addition, the robust method (RGMWM) appears to be less efficient than the other two estimators. The latter is a known result since robust estimators usually pay a price in terms of efficiency (as an insurance against bias).

On the other hand, when checking the performance of the same methods when applied to the two contaminated processes \((Y_t)\) and \((Z_t)\) it can be seen that the standard estimators appear to be (highly) biased for most of the estimated parameters (with one exception) while the robust estimator remains close (on average) to the true parameter values that we are aiming to estimate. Therefore, when there’s a suspicion that there could be some (small) contamination in the observed time series, it may be more appropriate to use a robust estimator.

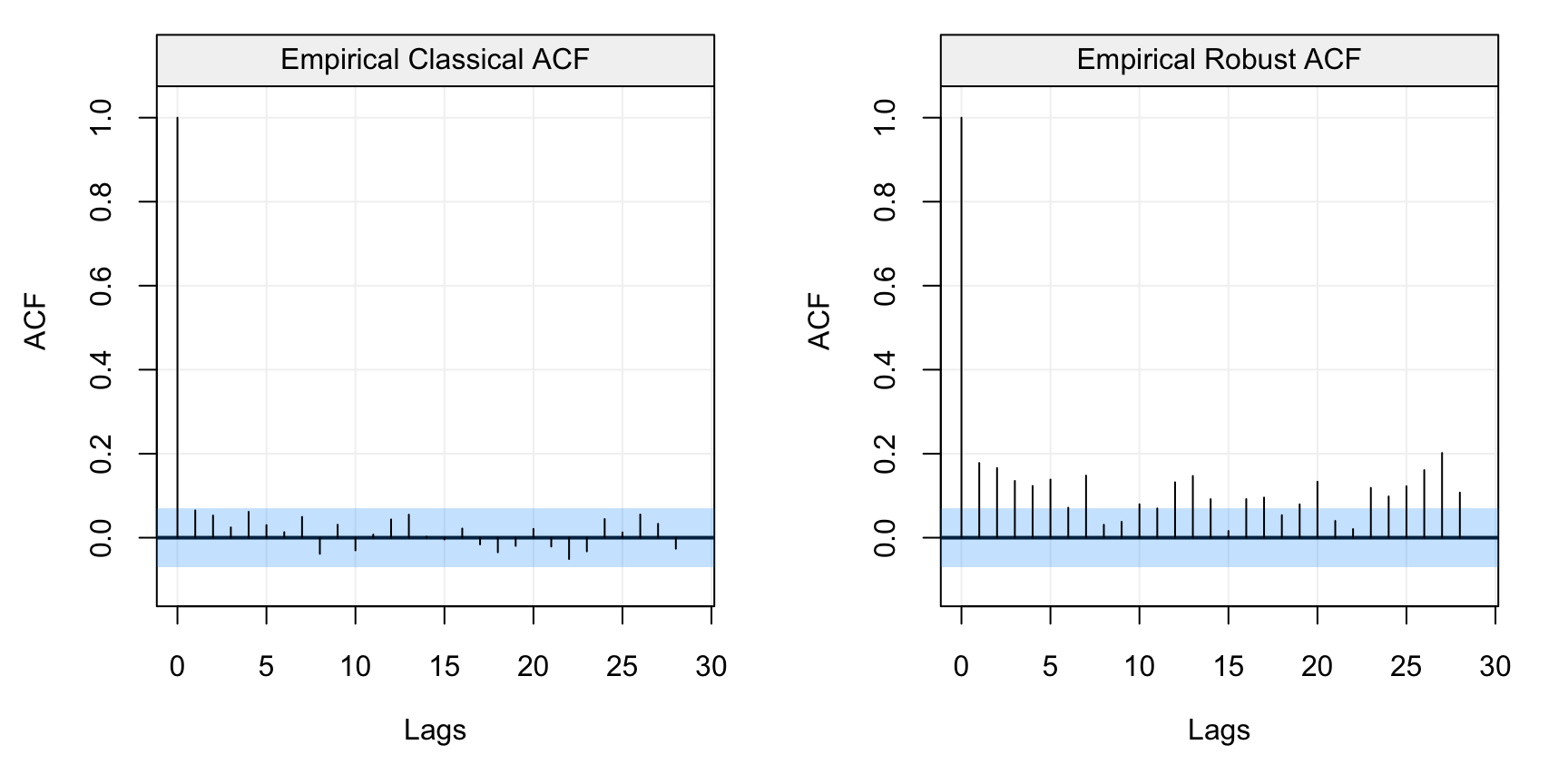

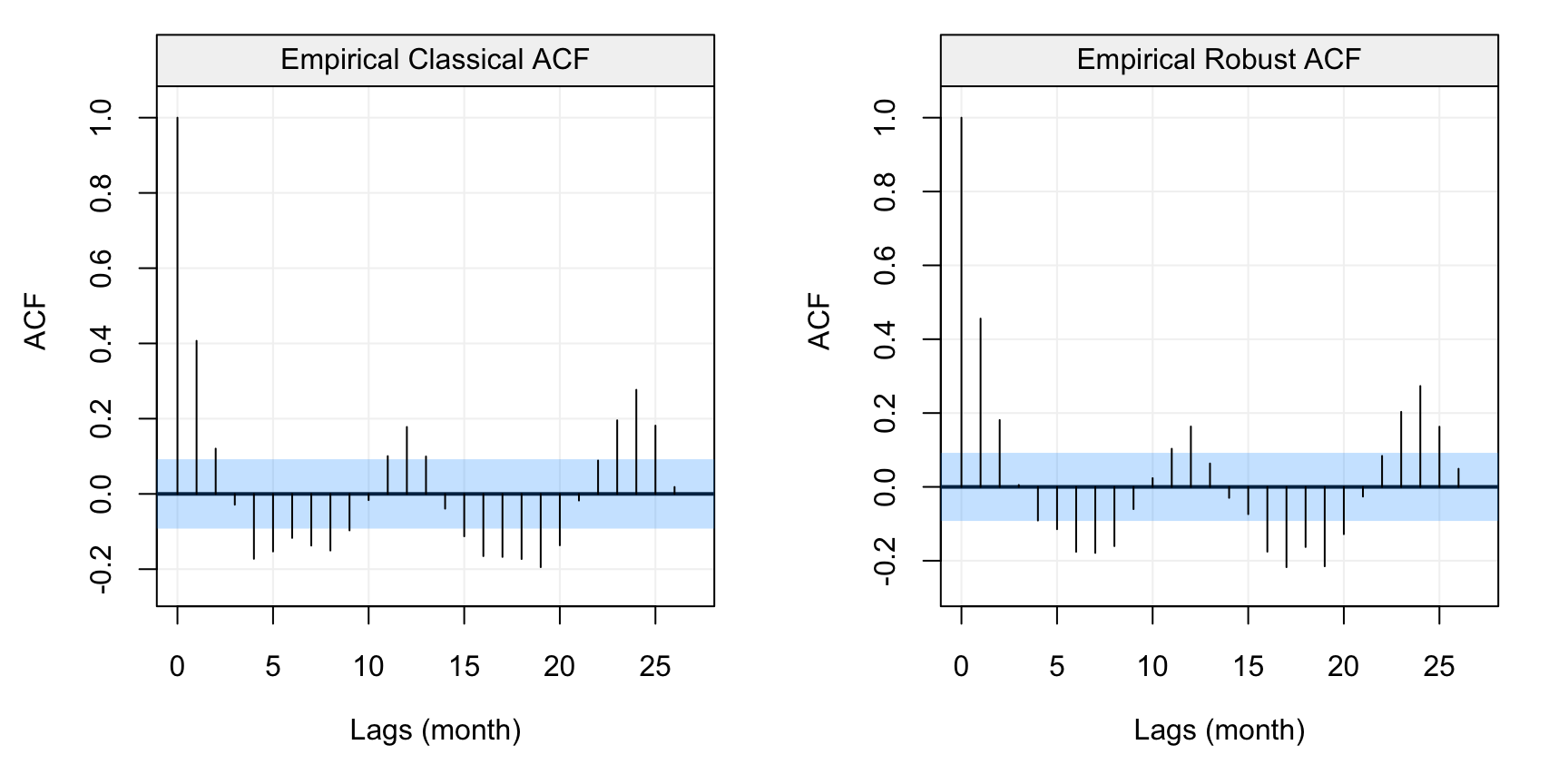

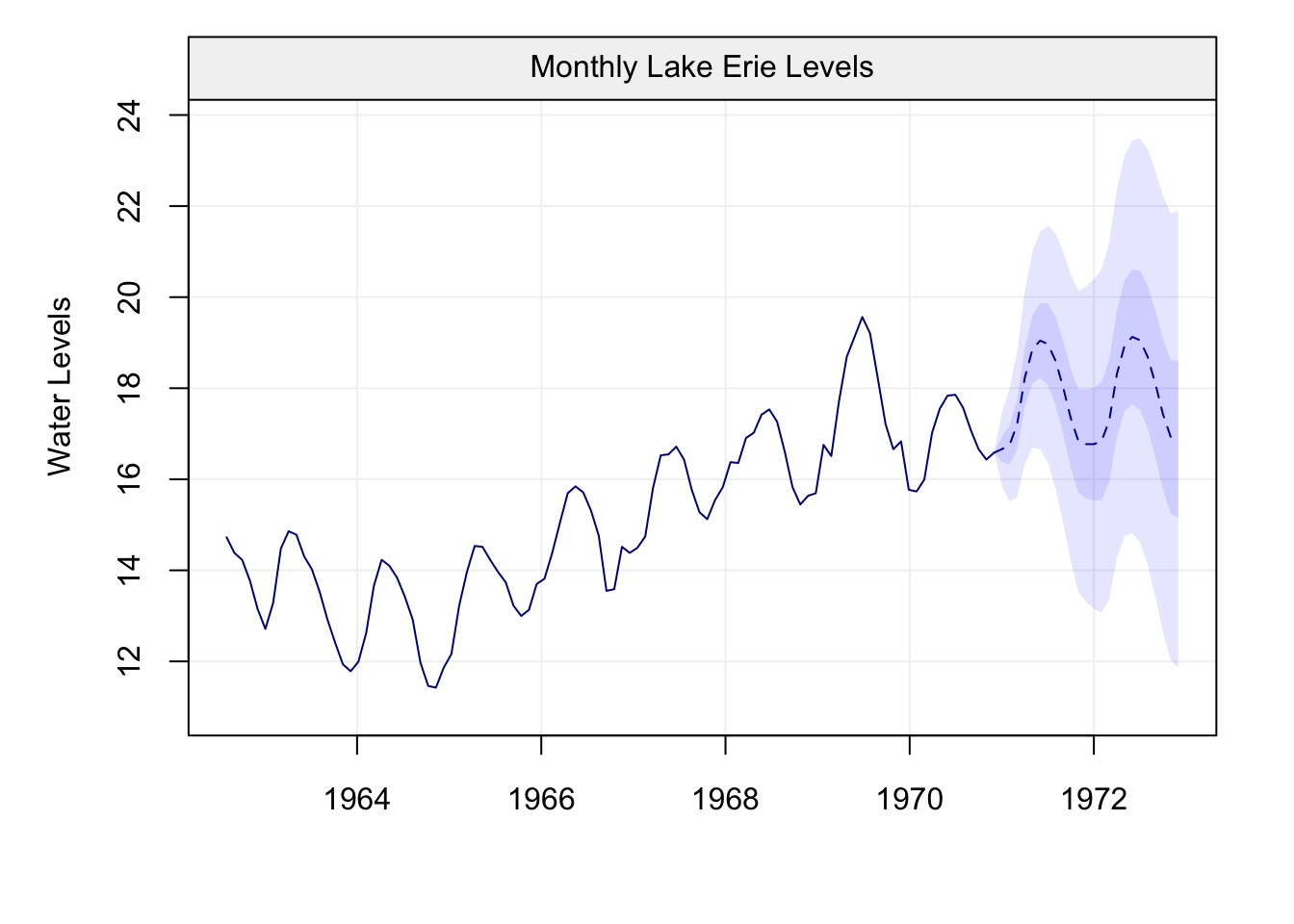

To conclude this section on estimation, we now compare the above studied estimators in different applied settings where we can highlight how to assess which estimator is more appropriate according to the type of setting. For this purpose, let us start with an example we have already checked in the previous chapter when discussing standard and robust estimators of the ACF, more specifically the data on monthly precipitations. As mentioned before when discussing this example, the importance of modelling precipitation data lies in the fact that its usually used to successively model the entire water cycle. Common models for this purpose are either the white noise (WN) model or the AR(1) model. Let us compare the standard and robust ACF again to understand which of these two models seems more appropriate for the data at hand.

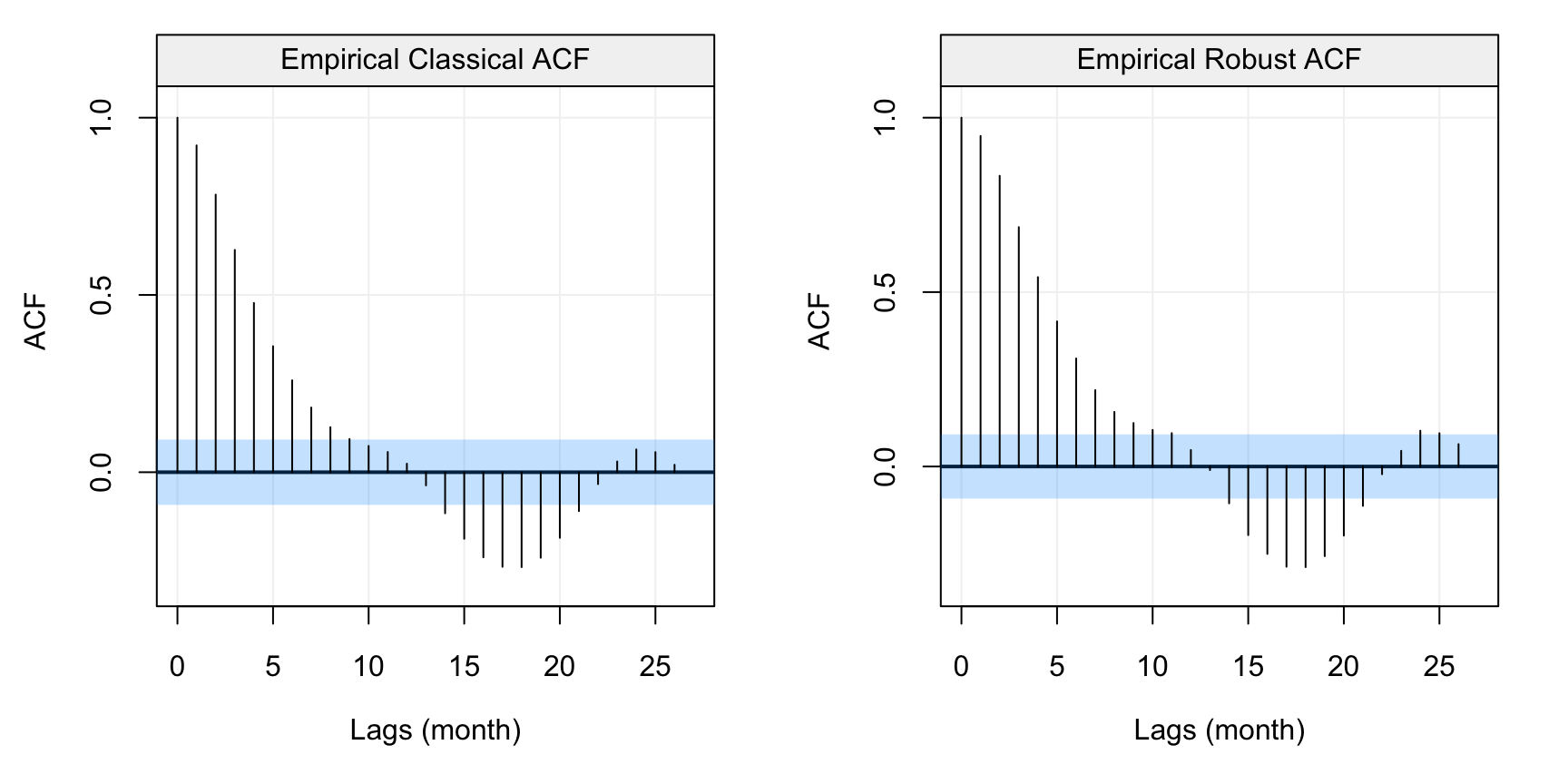

compare_acf(hydro)

Figure 4.3: Standard (left) and robust (left) estimates of the ACF function on the monthly precipitation data (hydro)

As we had underlined in the previous chapter, the standard ACF estimates would suggest that there appears to be no correlation among lags and consequently, the WN model would be the most appropriate. However, the robust ACF estimates depict an entirely different picture where it can be seen that there appears to be a significant autocorrelation over different lags which exponentially decay. Although there appears to be some seasonality in the plot, we will assume that the correct model for this data is an AR(1) since that’s what hydrology theory suggests. Let us therefore estimate the parameters of this model by using a standard estimator (MLE) and a robust estimator (RGMWM). The estimates for the MLE are the following:

mle_hydro = estimate(AR(1), as.vector(hydro), method = "mle", demean = TRUE)

# MLE Estimates

c(mle_hydro$mod$coef[1], mle_hydro$mod$sigma2)## ar1

## 0.06497727 0.22205713From these estimates it would appear that the autocorrelation between lagged variables (i.e. lags of order 1) is extremely low and that (as suggested by the standard ACF plot) a WN model may be more appropriate. Considering the robust ACF however, it is possible that the MLE estimates may not be reliable in this setting. Hence, let us use the RGMWM to estimate the same parameters.

rgmwm_hydro = estimate(AR(1), hydro, method = "rgmwm")$mod$estimate

# RGMWM Estimates

t(rgmwm_hydro)## AR SIGMA2

## Estimates 0.4048702 0.1065875In this case, we see how the autocorrelation between lagged values is much higher (0.4 compared to 0.06) indicating that there is a stronger dependence in the data than what is suggested by standard estimators. Moreover, the innovation variance is smaller compared to that of the MLE. This is also a known phenomenon when there’s contamination in the data since it leads to less dependence and more variability being detected by non-robust estimators. This estimate of the variance also has a considerable impact on forecast precision (as we’ll see in the next section).

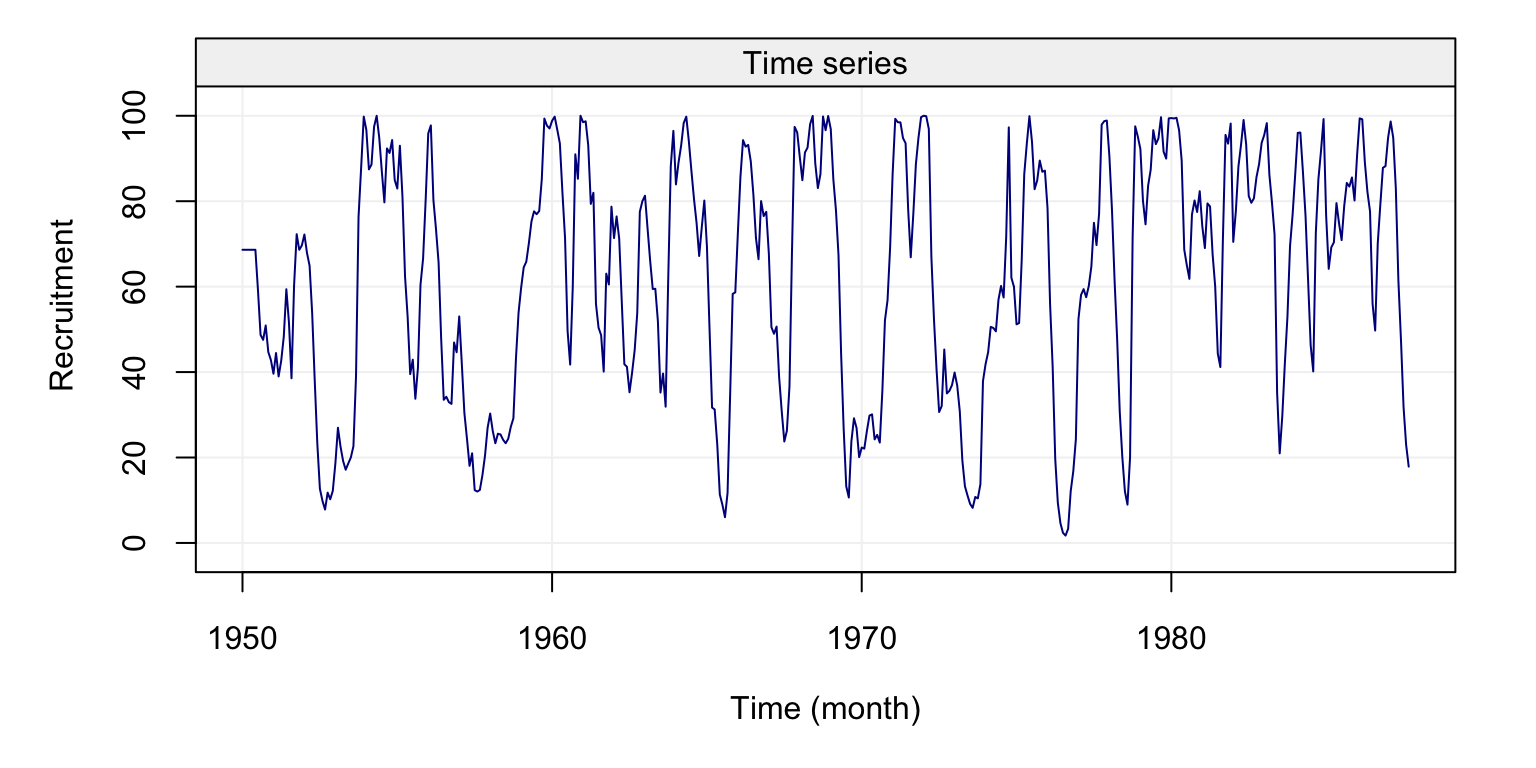

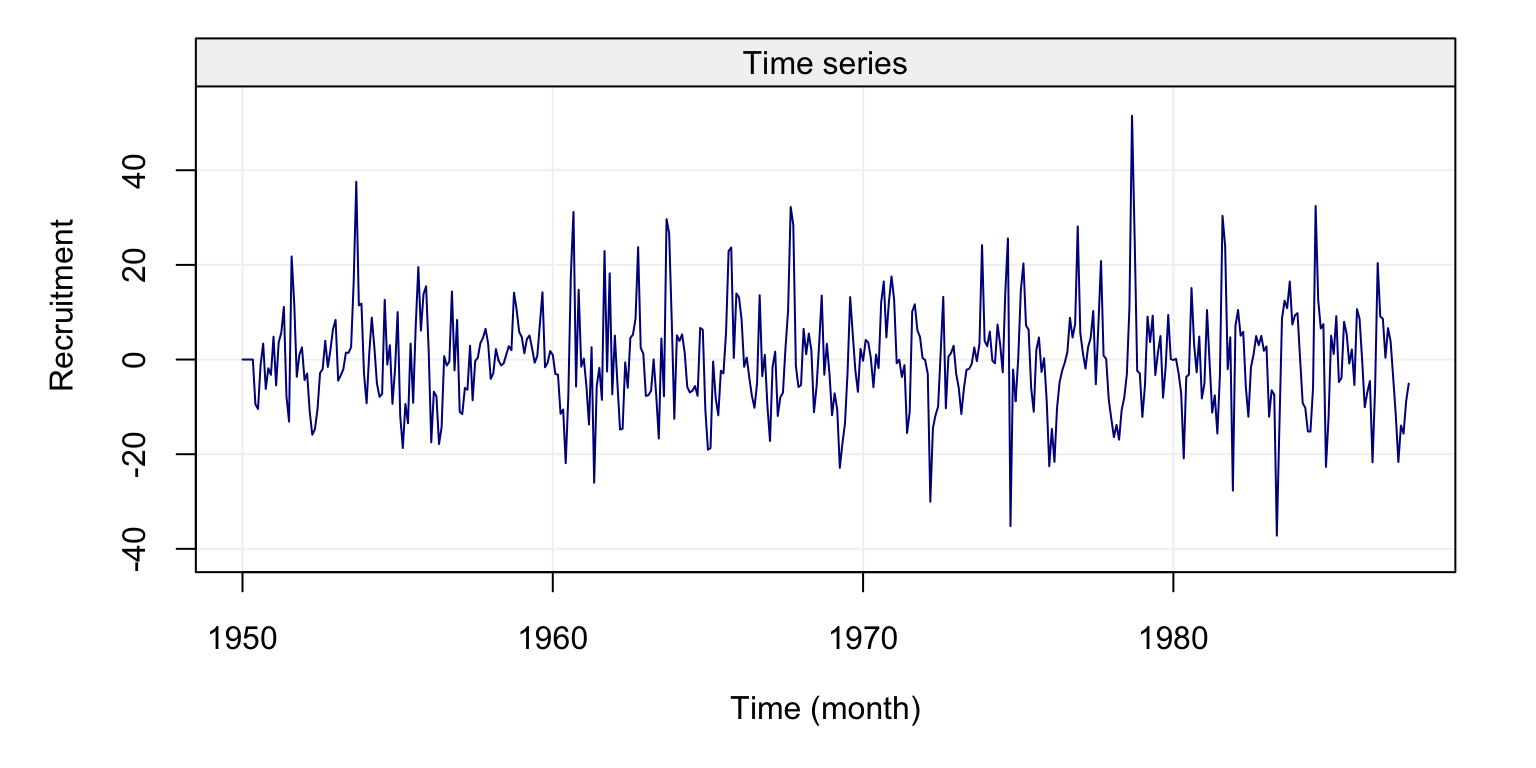

A final applied example that highlights the (potential) difference between estimators according to the type of setting is given by the “Recruitment” data set (in the astsa library). This data refers to the presence of new fish in the population of the Pacific Ocean and is often linked to the currents and temperatures passing through the ocean. As for the previous data set, let us take a look at the data itself and then analyse the standard and robust estimations of the ACF.

# Format data

fish = gts(rec, start = 1950, freq = 12, unit_time = 'month', name_ts = 'Recruitment')

# Plot data

plot(fish)

Figure 4.4: Plot of the time series on fish recruitment monthly data in the Pacific Ocean from 1950 to 1987

compare_acf(fish)

Figure 4.5: Standard (left) and robust (left) estimates of the ACF function on the monthly fish recruitment data (rec)

We can see that there appears to be a considerable dependence between the lagged variables which decays (in a similar way to the ACF of an AR(\(p\))). Also in this case we see a seasonality in the data but we won’t consider this for the purpose of this example. Given that there doesn’t appear to be any significant contamination in the data, let us consider the Yule-Walker and MLE estimators. The MLE highly depends on the assumed parametric distribution of the time series (i.e. usually Gaussian) and, if this is not respected, the resulting estimations could be unreliable. Hence, a first difference of the time series can often give an idea of the marginal distribution of the time series.

# Take first differencing of the recruitment data

diff_fish = gts(diff(rec), start = 1950, freq = 12, unit_time = 'month', name_ts = 'Recruitment')

# Plot first differencing of the recruitment data

plot(diff_fish)

Figure 4.6: Plot of the first difference of the time series on fish recruitment monthly data in the Pacific Ocean from 1950 to 1987

From the plot we can see that observations appear to be collected around a constant value and fewer appear to be further from this value (as would be the case for a normal distribution). However, various of these “more extreme” observations appear to be quite frequent suggesting that the underlying distribution may have a heavier tail compared to the normal distribution. Let us compare the standard and robust ACF estimates of this new time series.

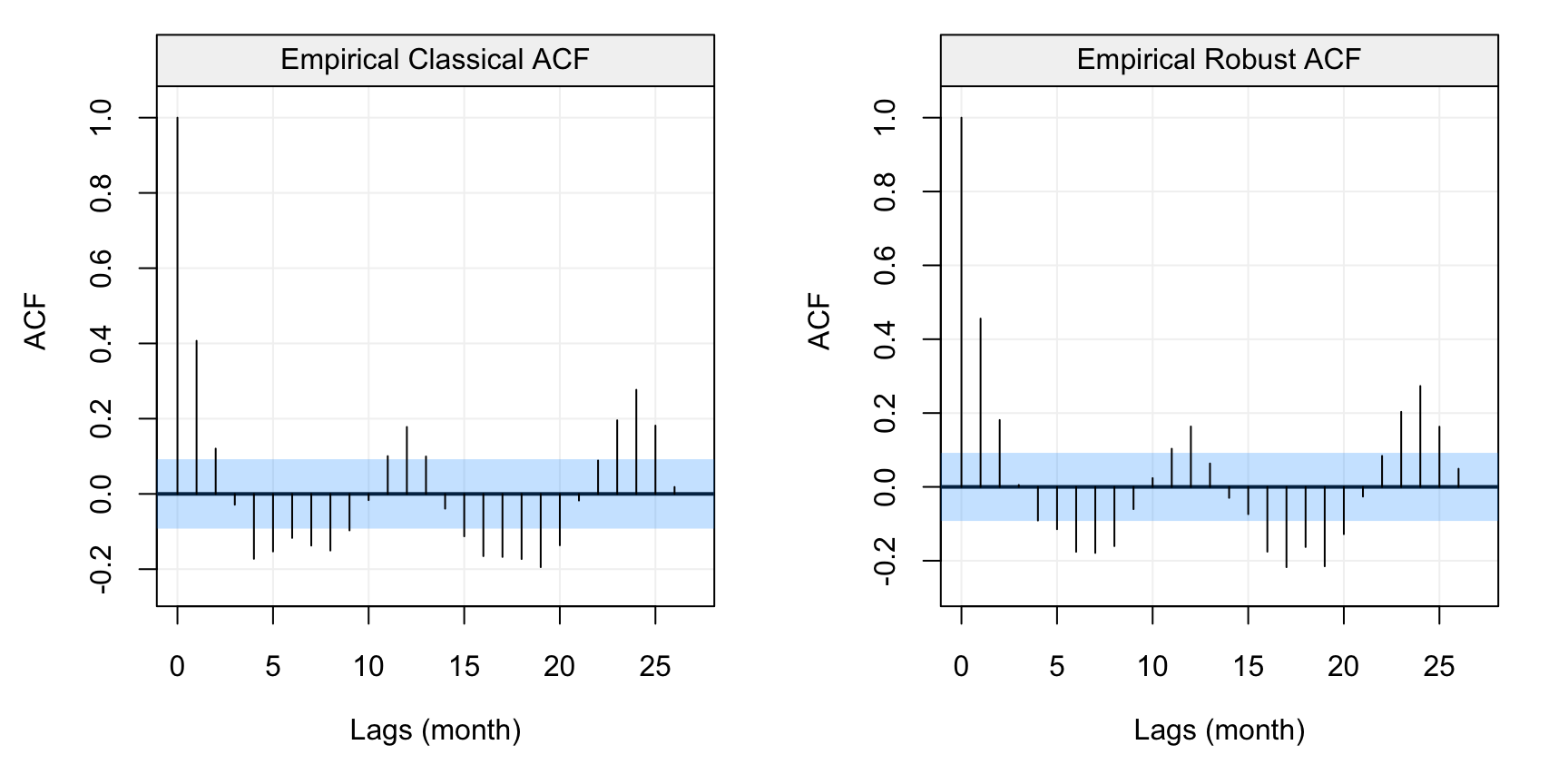

compare_acf(diff_fish)

Figure 4.7: Standard (left) and robust (left) estimates of the ACF function on the first difference of the monthly fish recruitment data (rec)

In this case we see that the patterns appear to be the same but the values between the standard and robust estimates are slightly (to moderately) different over different lags. This would suggest that there could be some contamination in the data or, in any case, that the normal assumption may not hold exactly. With this in mind, let us estimate an AR(2) model for this data using the Yule-Walker and MLE.

# MLE of Recruitment data

yw_fish = estimate(AR(2), rec, method = "yule-walker", demean = TRUE)

# MLE of Recruitment data

mle_fish = estimate(AR(2), rec, method = "mle", demean = TRUE)

# Compare estimates

# Yule-Walker Estimation

c(yw_fish$mod$coef[1], yw_fish$mod$sigma2)## ar1

## 1.354069 89.717052# MLE Estimation

c(mle_fish$mod$coef[1], mle_fish$mod$sigma2)## ar1

## 1.351218 89.334361It can be seen that, in this setting, the two estimators deliver very similar results (at least in terms of the \(\phi_1\) and \(\phi_2\) coefficients). Indeed, there doesn’t appear to be a strong need for robust estimation and the choice of a standard estimator is justified by the will to obtain efficient estimations. The only slight difference between the two estimations is in the innovation variance parameter \(\sigma^2\) and this could be (evenutally) due to the normality assumption that the MLE estimator upholds in this case. If there is therefore a doubt on the fact that the Gaussian assumption does not hold for this data, then it is probably more convenient to use the Yule-Walker estimates.

Until now we have focussed on estimation based on an assumed model. However, how do we choose a model? How can we make inference on the models and their parameters? To perform all these tasks we will need to compute residuals (as, for example, in the linear regression framework). In order to obtain residuals, we need to be able to predict (forecast) values of the time series and, consequently, the next section focuses on forecasting time series.

4.2.3 Forecasting AR(p) Models

One of the most interesting aspects of time series analysis is to predict the future unobserved values based on the values that have been observed up to now. However, this is not possible if the underlying (parametric) model is unknown, thus in this section we assume, for purpose of illustration, that the time series \((X_t)\) is stationary and its model is known. In particular, we denote forecasts by \(X^{t}_{t+j}\), where \(t\) represents the time point from which we would like to make a forecast assuming we have an observed time series (e.g. \(\mathbf{X} = (X_{1}, X_{2}, \cdots , X_{t-1}, X_t)\)) and \(j\) represents the \(j^{th}\)-ahead future value we wish to predict. So, \(X^{t}_{t+1}\) represents a one-step-ahead prediction of \(X_{t+1}\) given data \((X_{1}, X_{2}, \cdots, X_{t-1}, X_{t})\).

Let us now focus on defining a prediction operator and, for this purpose, let us define the Mean Squared Prediction Error (MSPE) as follows:

\[\mathbb{E}[(X_{t+j} - X^{t}_{t+j})^2] .\] Intuitively, the MPSE measures the square distance (i.e. always positive) between the actual future values and the corresponding predictions. Ideally, we would want this measure to be equal to zero (meaning that we don’t make any prediction errors) but, if this is not possible, we would like to define a predictor that has the smallest MPSE among all possible predictors. The MPSE is not necessarily the best measure of prediction accuracy since, for example, it can be greatly affected by outliers or doesn’t take into account importance of positive or negative misspredictions (e.g. for risks in finance or insurance). Nevertheless, it is a good overall measure of forecast accuracy and the next theorem states what the best predictor is for this measure.

Theorem 4.1 (Minimum Mean Squared Error Predictor) Let us define \[X_{t + j}^t \equiv \mathbb{E}\left[ {{X_{t + j}}|{X_t}, \cdots ,{X_1}} \right], j > 0\]

Then \[\mathbb{E}\left[ {{{\left( {{X_{t + j}} - m\left( {{X_1}, \cdots ,{X_t}} \right)} \right)}^2}} \right] \ge \mathbb{E}\left[ {{{\left( {{X_{t + j}} - X_{t + j}^t} \right)}^2}} \right]\] for any function \(m(.)\).

The proof of this theorem can be found in Appendix A.2. Although this theorem defines the best possible predictor, there can be many functional forms for this operator. We first restrict our attention to the set of linear predictors defined as

\[X_{t+j}^t = \sum_{i=1}^t \alpha_i X_i,\] where \(\alpha_i \in \mathbb{R}\). It can be noticed, for example, that the \(\alpha_i\)’s are not always the same based on the values of \(t\) and \(j\) (i.e. it depends from which time point you want to predict and how far into the future). Another aspect to notice is that, if the time series model underlying the observed time series can be expressed in the form of a linear operator (e.g. a linear process), then we can derive a linear predictor from this framework.

Let us consider some examples to understand how these linear predictors can be delivered based on the AR(p) models studied this far. For this purpose, let us start with an AR(1) process.

Example 4.8 (Forecasting with an AR(1) model) The AR(1) model is defined as follows:

\[{X_t} = \phi {X_{t - 1}} + {W_t},\]

where \(W_t \sim WN(0, \sigma^2)\).

From here, the conditional expected mean and variance are given by

\[\begin{aligned} \mathbb{E}\left[ X_{t + j} | \Omega_t \right] &= \mathbb{E}[\phi X_{t+j-1} + W_{t+j}\,| \, \Omega_t ] \\ &= ... = {\phi ^j}{X_t} \\ \operatorname{var}\left[ {{X_{t + j}}} | \Omega_t \right] &= \left( {1 + {\phi ^2} + {\phi ^4} + \cdots + {\phi ^{2\left( {j - 1} \right)}}} \right){\sigma ^2} \\ \end{aligned} \]

Within this derivation, it is important to remember that

\[\begin{aligned} \mathop {\lim }\limits_{j \to \infty } \mathbb{E}\left[ {{X_{t + j}}} | \Omega_t \right] &= \mathbb{E}\left[ {{X_t}} \right] = 0 \\ \mathop {\lim }\limits_{j \to \infty } \operatorname{var}_t\left[ {{X_{t + j}}} | \Omega_t \right] &= \operatorname{var} \left( {{X_t}} \right) = \frac{{{\sigma ^2}}}{{1 - {\phi ^2}}} \end{aligned} \]

From these last results we see that the forecast for an AR(1) process is “mean-reverting” since in general we have that the AR(1) can be written with respect to the mean \(\mu\) as follows

\[(X_t - \mu) = \phi (X_{t-1} - \mu) + W_t,\]

which gives

\[X_t = \mu + \phi (X_{t-1} - \mu) + W_t,\]

and consequently

\[\begin{aligned} \mathbb{E}[X_{t+j} | \Omega_t] &= \mu + \mathbb{E}[(X_{t+j} - \mu) | \Omega_t] \\ &= \mu + \phi^j(X_t - \mu), \end{aligned} \]

which tends to \(\mu\) as \(j \to \infty\)Let us now consider a more complicated model, i.e. an AR(2) model.

Example 4.9 (Forecasting with an AR(2) model) Consider an AR(2) process defined as follows:

\[{X_t} = {\phi _1}{X_{t - 1}} + {\phi _2}{X_{t - 2}} + {W_t},\]

where \(W_t \sim WN(0, \sigma^2)\).

Based on this process we are able to find the predictor for each j-step ahead prediction using the following approach:

\[\begin{aligned} \mathbb{E}\left[ {{X_{t + 1}}} | \Omega_t \right] &= {\phi _1}{X_t} + {\phi _2}{X_{t - 1}} \\ \mathbb{E}\left[ {{X_{t + 2}}} | \Omega_t \right] &= \mathbb{E}\left[ {{\phi _1}{X_{t + 1}} + {\phi _2}{X_t}} | \Omega_t \right] = {\phi _1} \mathbb{E}\left[ {{X_{t + 1}}} | \Omega_t \right] + {\phi _2}{X_t} \\ &= {\phi _1}\left( {{\phi _1}{X_t} + {\phi _2}{X_{t - 1}}} \right) + {\phi _2}{X_t} \\ &= \left( {\phi _1^2 + {\phi _2}} \right){X_t} + {\phi _1}{\phi _2}{X_{t - 1}}. \\ \end{aligned} \]

Having seen how to build a forecast for an AR(1), let us now generalise this method to an AR(p) using matrix notation.

Example 4.10 (Forecasting with an AR(p) model) Consider AR(p) process defined as:

\[{X_t} = {\phi _1}{X_{t - 1}} + {\phi _2}{X_{t - 2}} + \cdots + {\phi _p}{X_{t - p}} + {W_t},\]

where \(W_t \sim WN(0, \sigma^2_W)\).

The process can be rearranged into matrix form as follows:

\[\begin{aligned} \underbrace {\left[ {\begin{array}{*{20}{c}} {{X_t}} \\ \vdots \\ \vdots \\ {{X_{t - p + 1}}} \end{array}} \right]}_{{Y_t}} &= \underbrace {\left[ {\begin{array}{*{20}{c}} {{\phi _1}}& \cdots &{}&{{\phi _p}} \\ {}&{}&{}&0 \\ {}&{{I_{p - 1}}}&{}& \vdots \\ {}&{}&{}&0 \end{array}} \right]}_A\underbrace {\left[ {\begin{array}{*{20}{c}} {{X_{t - 1}}} \\ \vdots \\ \vdots \\ {{X_{t - p}}} \end{array}} \right]}_{{Y_{t - 1}}} + \underbrace {\left[ {\begin{array}{*{20}{c}} 1 \\ 0 \\ \vdots \\ 0 \end{array}} \right]}_C{W_t} \\ {Y_t} &= A{Y_{t - 1}} + C{W_t} \end{aligned}\]

From here, the conditional expectation and variance can be computed as follows:

\[\begin{aligned} {E}\left[ {{Y_{t + j}}} | \Omega_t \right] &= {E}\left[ {A{Y_{t + j - 1}} + C{W_{t + j}}} | \Omega_t\right] = {E}\left[ {A{Y_{t + j - 1}}} | \Omega_t \right] + \underbrace {{E}\left[ {C{W_{t + j}}} | \Omega_t \right]}_{ = 0} \\ &= {E}\left[ {A\left( {A{Y_{t + j - 2}} + C{W_{t + j - 1}}} \right)} | \Omega_t \right] = {E}\left[ {{A^2}{Y_{t + j - 2}}} | \Omega_t\right] = {A^j}{Y_t} \\ {\operatorname{var}}\left[ {{Y_{t + j}}} | | \Omega_t\right] &= {\operatorname{var}}\left[ {A{Y_{t + j - 1}} + C{W_{t + j}}} | \Omega_t \right] \\ &= {\sigma ^2}C{C^T} + {\operatorname{var}}\left[ {A{Y_{t + j - 1}}} | \Omega_t \right] = {\sigma ^2}A{\operatorname{var}}\left[ {{Y_{t + j - 1}}} | \Omega_t \right]{A^T} \\ &= {\sigma ^2}C{C^T} + {\sigma ^2}AC{C^T}A + {\sigma ^2}{A^2}{\operatorname{var}}\left[ {{Y_{t + j - 2}}} | \Omega_t \right]{\left( {{A^2}} \right)^T} \\ &= {\sigma ^2}\sum\limits_{k = 0}^{j - 1} {{A^k}C{C^T}{{\left( {{A^K}} \right)}^T}} \\ \end{aligned} \]

Considering the recursive pattern coming from the expressions of the conditional expectation and variance, the predictions can be obtained via the following recursive formulation: \[\begin{aligned} {E}\left[ {{Y_{t + j}}} | \Omega_t \right] &= A{E}\left[ {{Y_{t + j - 1}}} | \Omega_t\right] \\ {\operatorname{var}}\left[ {{Y_{t + j}}} | \Omega_t \right] &= {\sigma ^2}C{C^T} + A{\operatorname{var}}\left[ {{Y_{t + j - 1}}} | \Omega_t \right]{A^T} \\ \end{aligned} \]With this recursive expression we can now compute the conditional expectation and variance of different AR(p) models. Let us therefore revisit the previous AR(2) example using this recursive formula.

Example 4.11 (Forecasting with an AR(2) in Matrix Form) We can rewrite our previous example of the predictions for an AR(2) process as follows

\[\begin{aligned} \underbrace {\left[ {\begin{array}{*{20}{c}} {{X_t}} \\ {{X_{t - 1}}} \end{array}} \right]}_{{Y_t}} &= \underbrace {\left[ {\begin{array}{*{20}{c}} {{\phi _1}}&{{\phi _2}} \\ 1&0 \end{array}} \right]}_A\underbrace {\left[ {\begin{array}{*{20}{c}} {{X_{t - 1}}} \\ {{X_{t - 2}}} \end{array}} \right]}_{{Y_{t - 1}}} + \underbrace {\left[ {\begin{array}{*{20}{c}} 1 \\ 0 \end{array}} \right]}_C{W_t} \\ {Y_t} &= A{Y_{t - 1}} + C{W_t} \end{aligned}\]

Then, we are able to calculate the prediction as

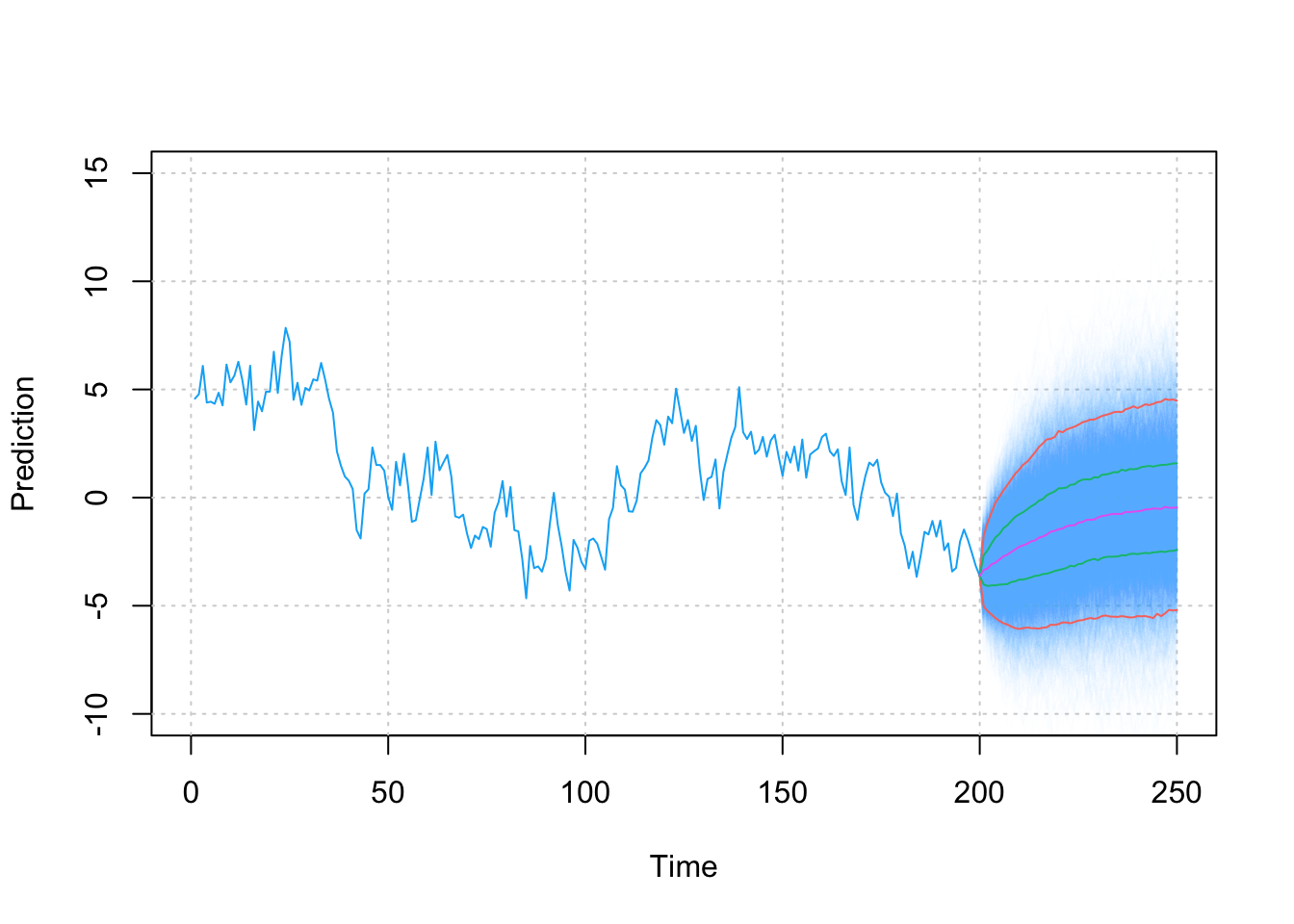

\[\begin{aligned} {E}\left[ {{Y_{t + 2}}} | \Omega_t \right] &= {A^2}{Y_t} = \left[ {\begin{array}{*{20}{c}} {{\phi _1}}&{{\phi _2}} \\ 1&0 \end{array}} \right]\left[ {\begin{array}{*{20}{c}} {{\phi _1}}&{{\phi _2}} \\ 1&0 \end{array}} \right]\left[ {\begin{array}{*{20}{c}} {{X_t}} \\ {{X_{t - 1}}} \end{array}} \right] \hfill \\ &= \left[ {\begin{array}{*{20}{c}} {\phi _1^2 + {\phi _2}}&{{\phi _1}{\phi _2}} \\ {{\phi _1}}&{{\phi _2}} \end{array}} \right]\left[ {\begin{array}{*{20}{c}} {{X_t}} \\ {{X_{t - 1}}} \end{array}} \right] = \left[ {\begin{array}{*{20}{c}} {\left( {\phi _1^2 + {\phi _2}} \right){X_t} + {\phi _1}{\phi _2}{X_{t - 1}}} \\ {{\phi _1}{X_t} + {\phi _2}{X_{t - 1}}} \end{array}} \right] \hfill \\ \end{aligned} \]The above examples have given insight as to how to compute predictors for the AR(p) models that we have studied this far. Let us now observe the consequences of using such an approach to predict future values from these models. For this purpose, using the recursive formulation seen in the examples above we perform a simulation study where, for a fixed set of parameters, we make 5000 predictions from an observed time series and predict 50 values ahead into the future. The known parameters for the AR(2) process we use for the simulation study are \(\phi _1 = 0.75\), \(\phi _2 = 0.2\) and \(\sigma^2 = 1\). The figure below shows the distribution of these predictions starting from the last observation \(T = 200\).

Figure 4.8: Values of the AR(2) predictions with the pink line being the median prediction, red and green lines are respectively 95% and 75% Confidence intervals.

It can be observed that, as hinted by the expressions for the variance of the predictions (in the examples above), the variability of the predictions increases as we try to predict further into the future.

Having now defined the basic concepts for forecasting of time series we can now start another topic of considerable importance for time series analysis. Indeed, the whole discussion on prediction is not only important to derive forecasts for future values of the phenomena one may be interested in, but also in understanding how well a model explains (and predicts) an observed time series. In fact, predictions allow to deliver residuals within the time series setting and, based on these residuals, we can obtain different inference and diagnostic tools.

Given the knowledge on predictions seen above, residuals can be computed as

\[r_{t+j} = X_{t+j} - \hat{X}_{t+j},\] where \(\hat{X}_{t+j}\) represents an estimator of the conditional expectation \(E[X_{t+j} | \Omega_{t}]\). The latter quantity will depend on the model underlying the time series and, assuming we know the true model, we could use the true parameters to obtain a value for this expectation. However, in an applied setting it is improbable that we know the true parameters and therefore \(\hat{X}_{t+j}\) can be obtained by estimating the parameters (as seen in the previous sections) and then plugging thmem into the expression of \(E[X_{t+j} | \Omega_{t}]\).

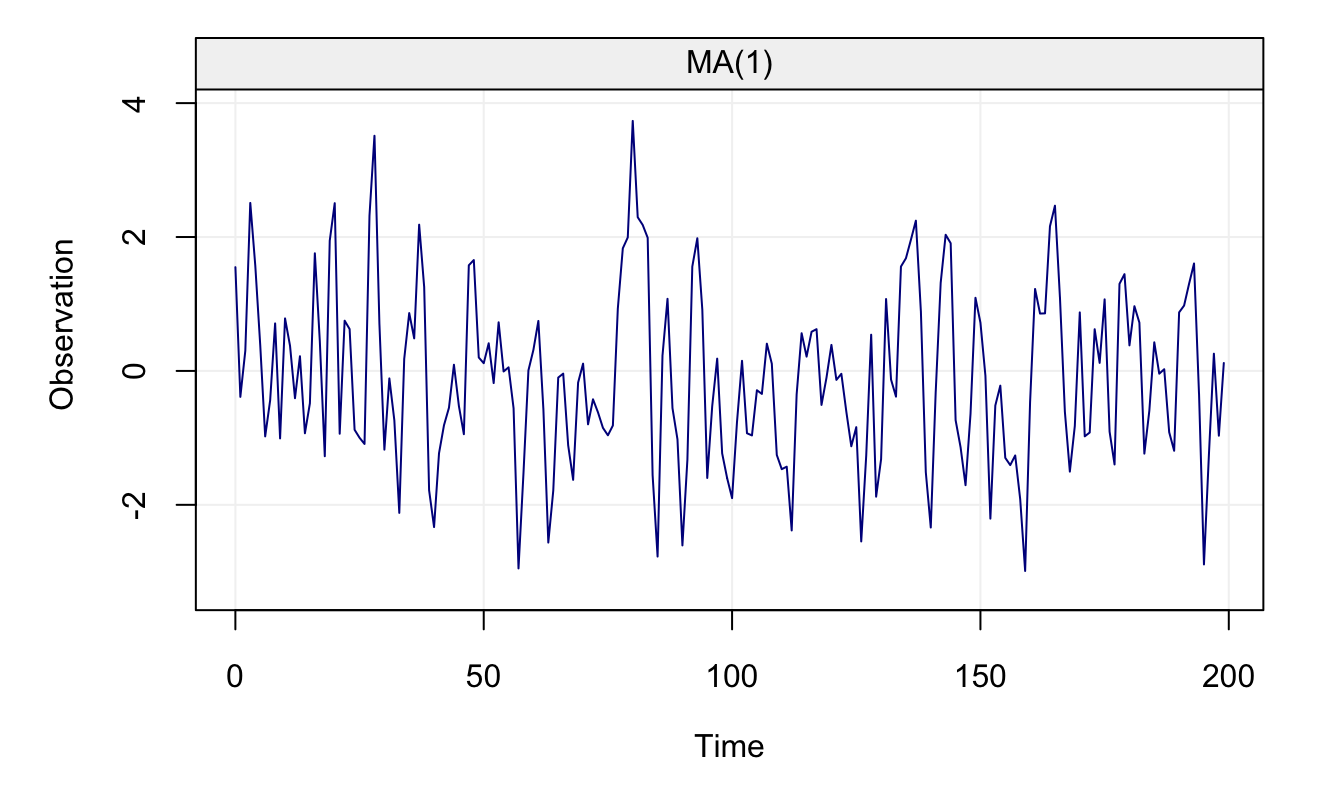

4.3 Diagnostic Tools for Time Series

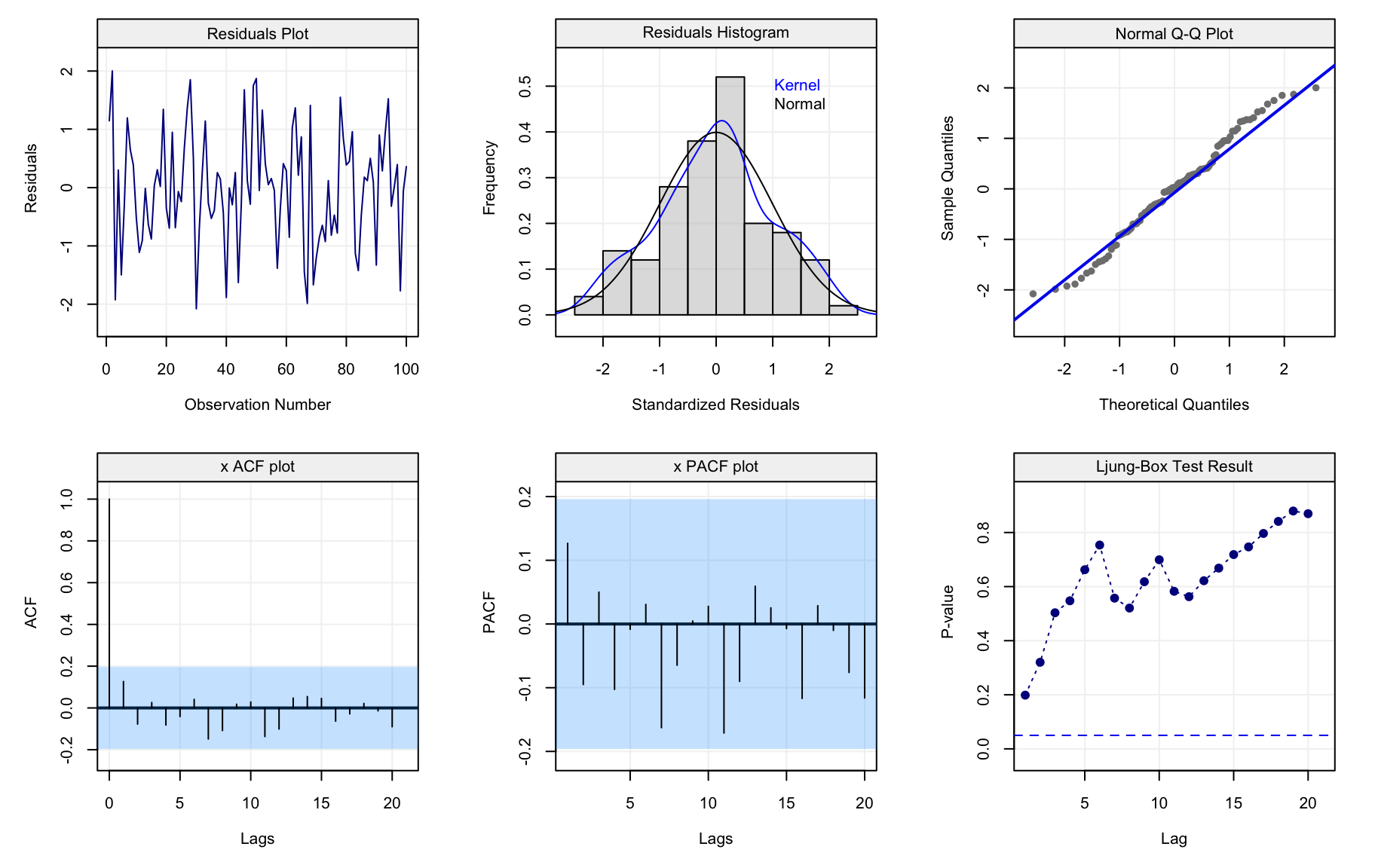

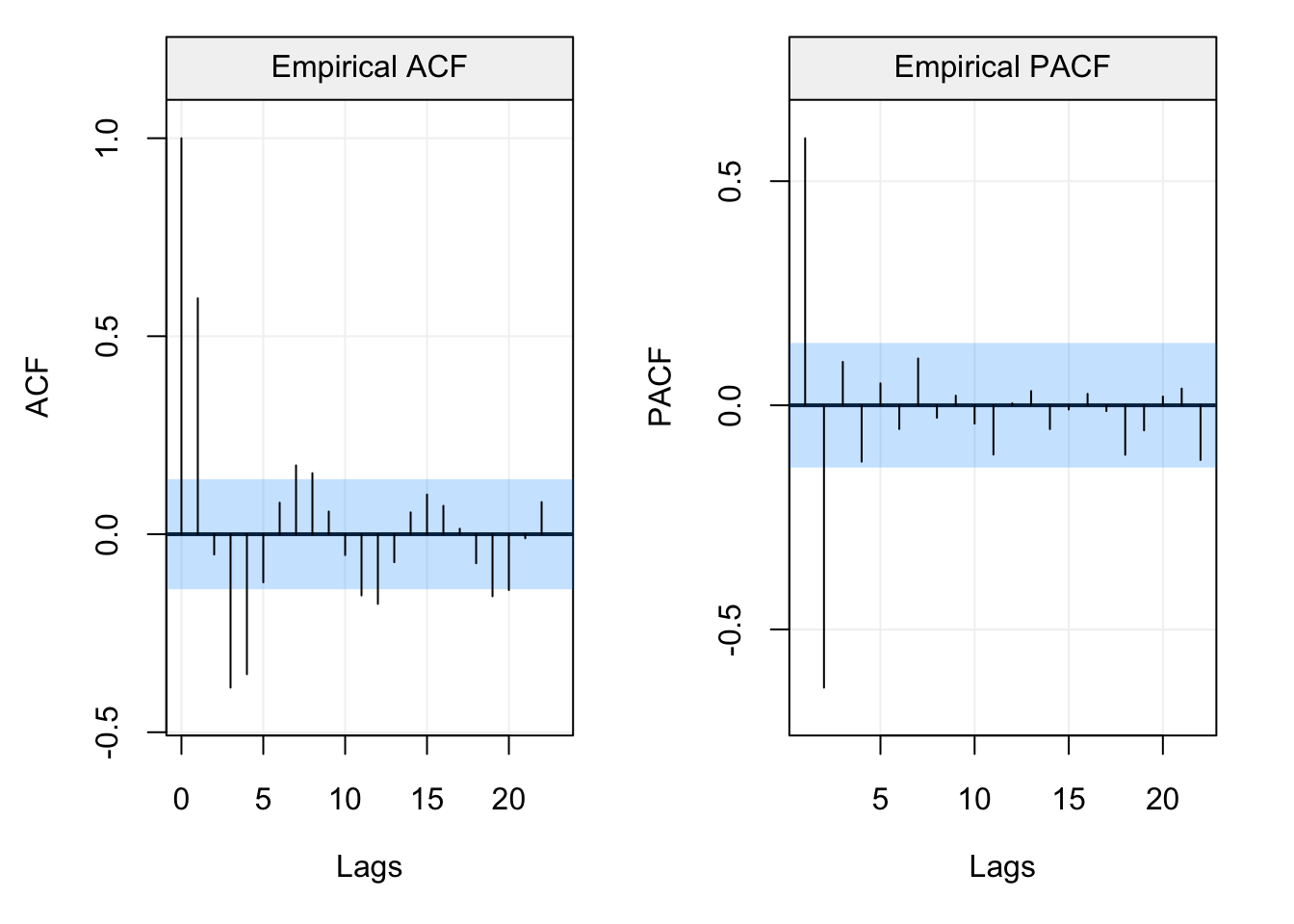

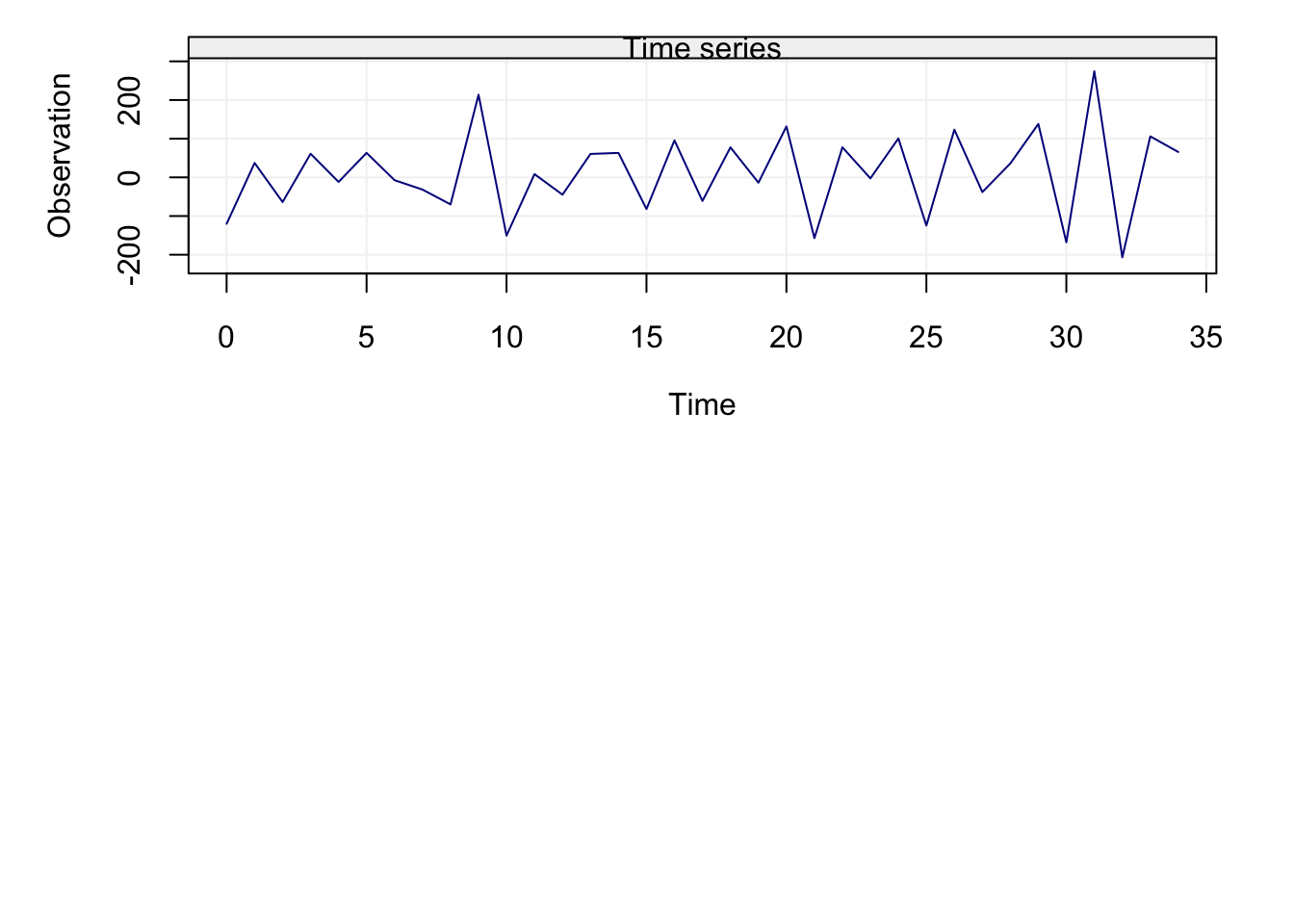

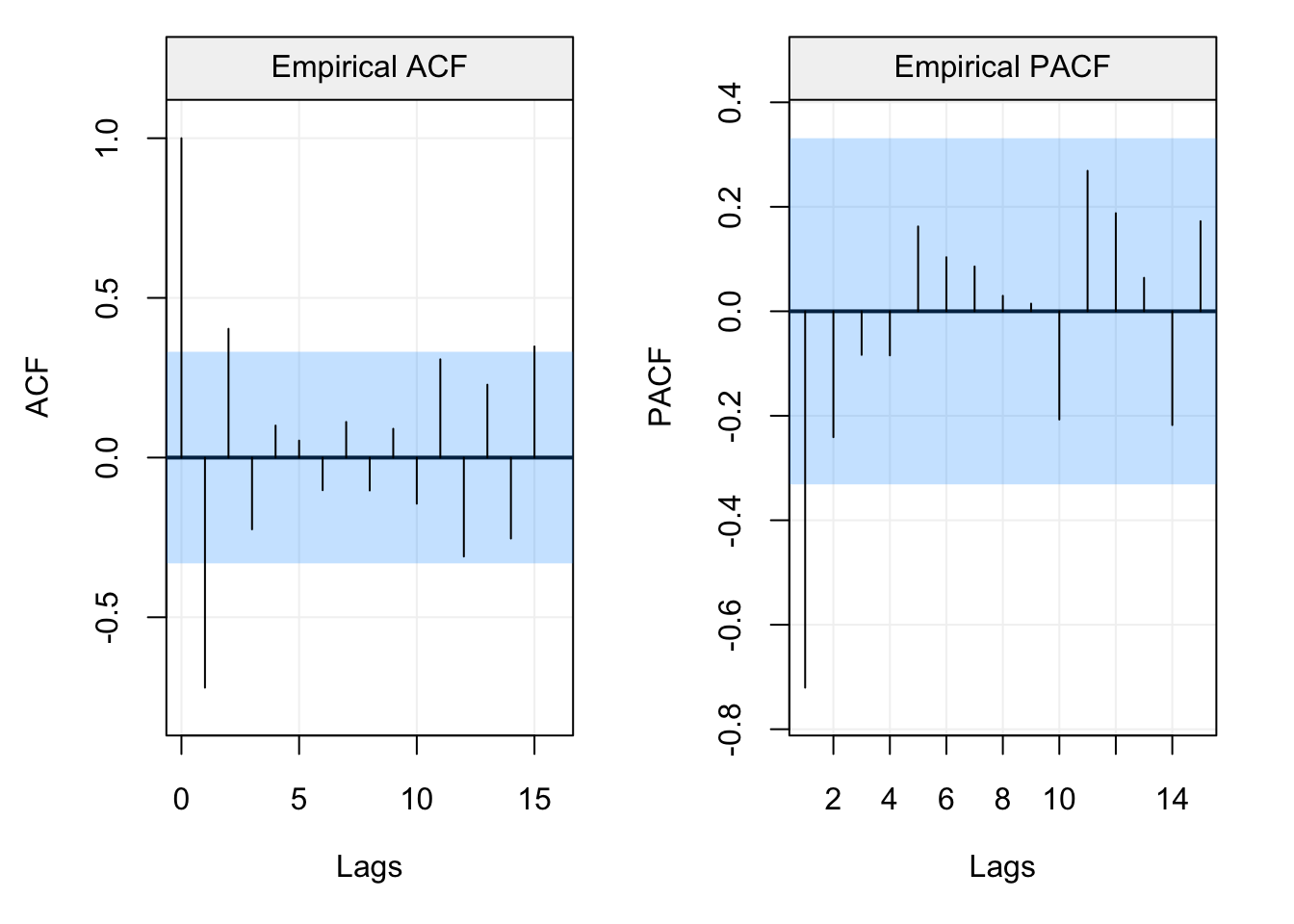

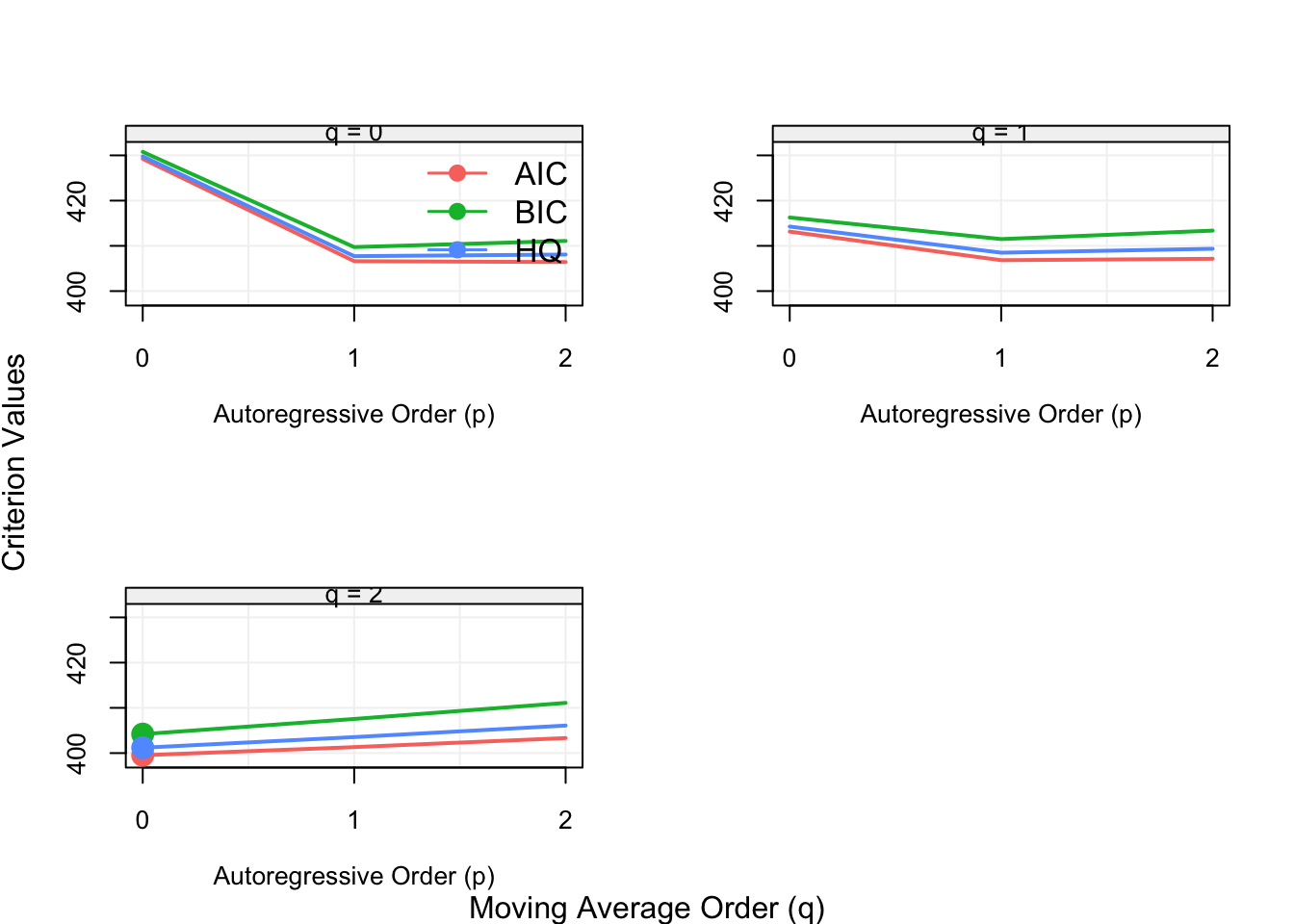

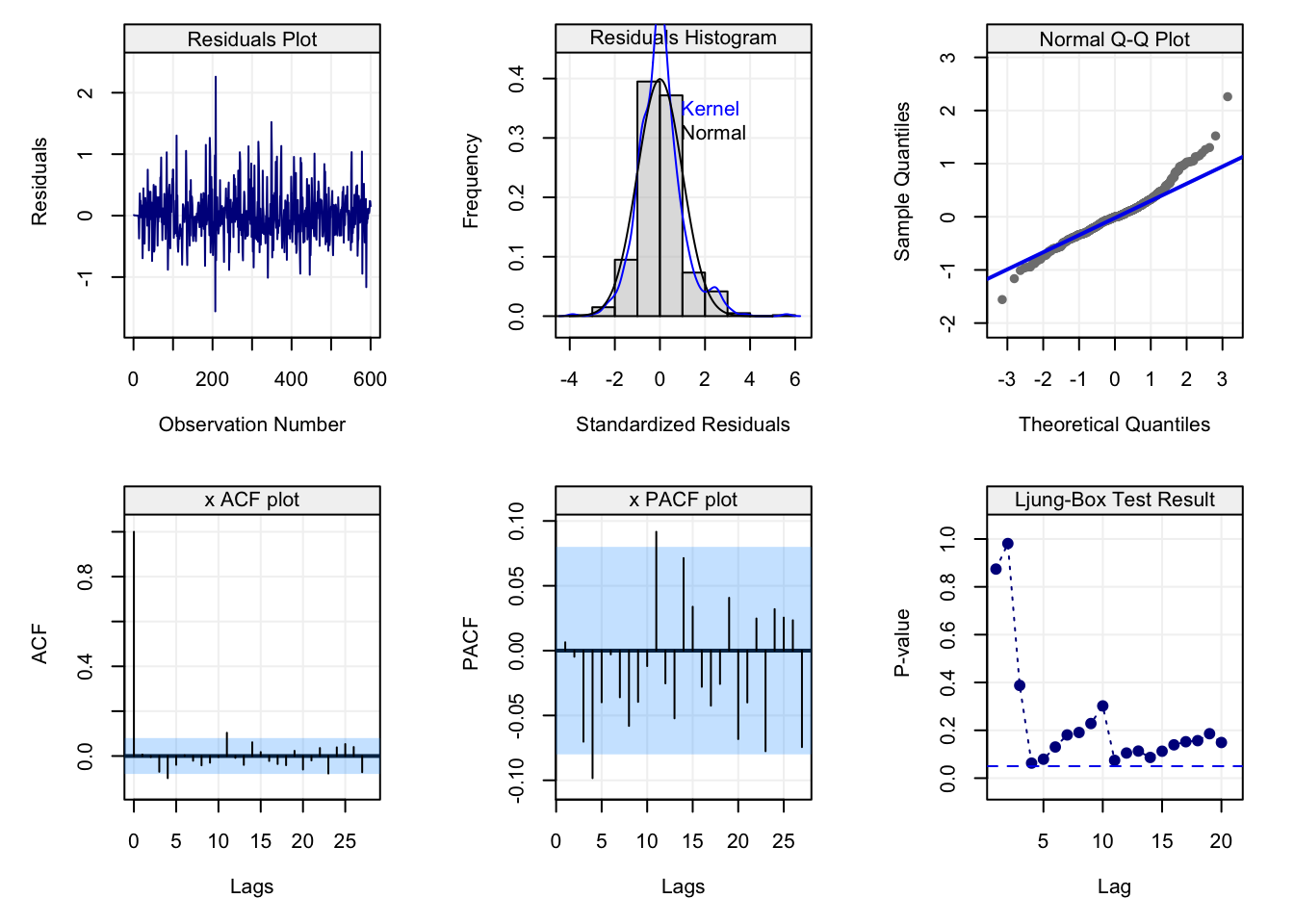

The standard approach to understanding how well a model fits the data is analysing the residuals (e.g. linear regression). The same approach can be taken for time series analysis where, given our knowledge on forecasts from the previous section, we can now deliver residuals. The first step (and possibly the most important) is to use visual tools to check the residuals and also the original time series. Below is a figure that collects different diagnostic tools for time series analysis and is applied to a simulated AR(1) process of length \(T = 100\).

set.seed(333)

true_model = AR(phi = 0.8, sigma2 = 1)

Xt = gen_gts(n = 100, model = true_model)

model = estimate(AR(1), Xt, demean = FALSE)

check(model = model)

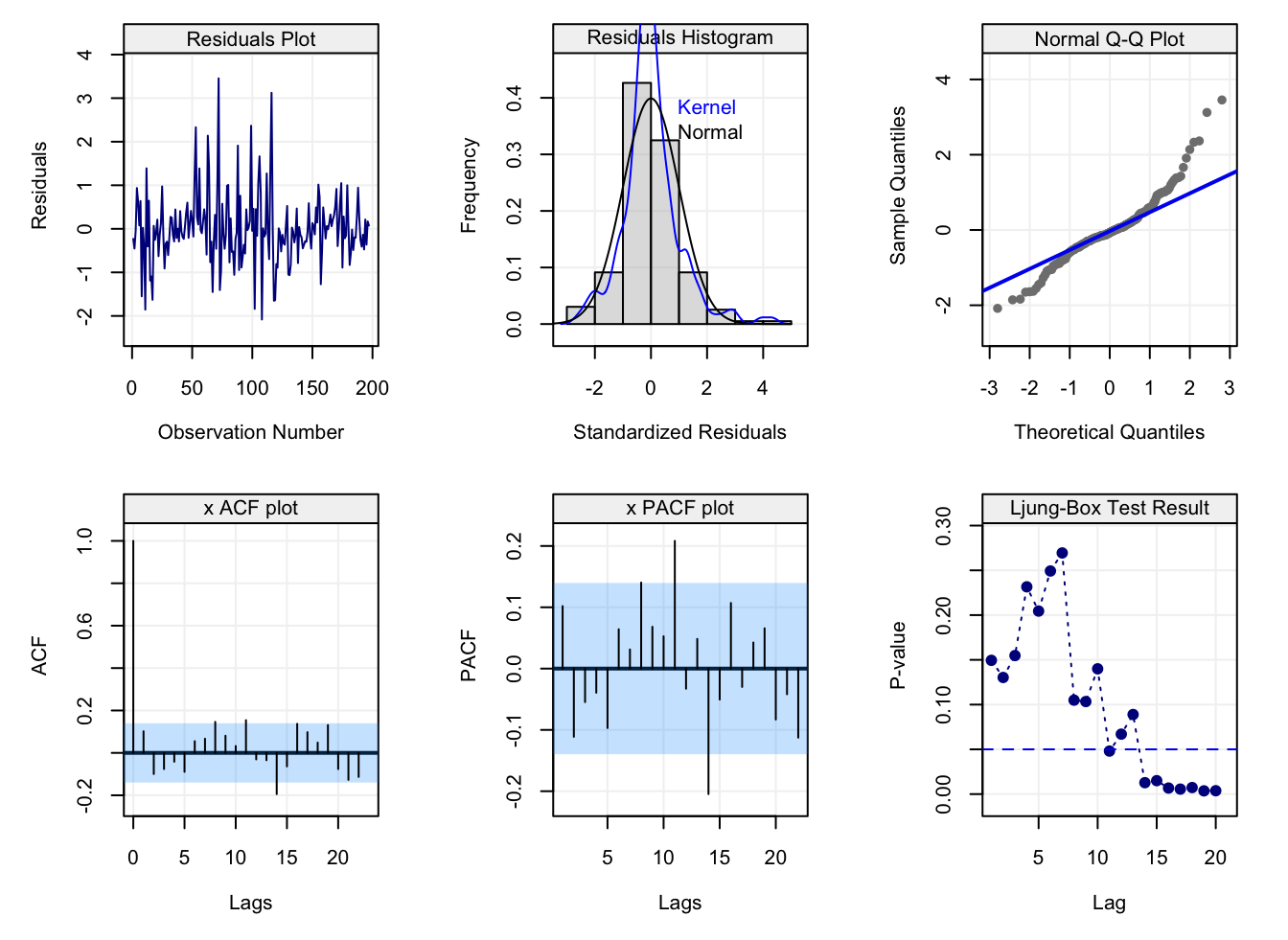

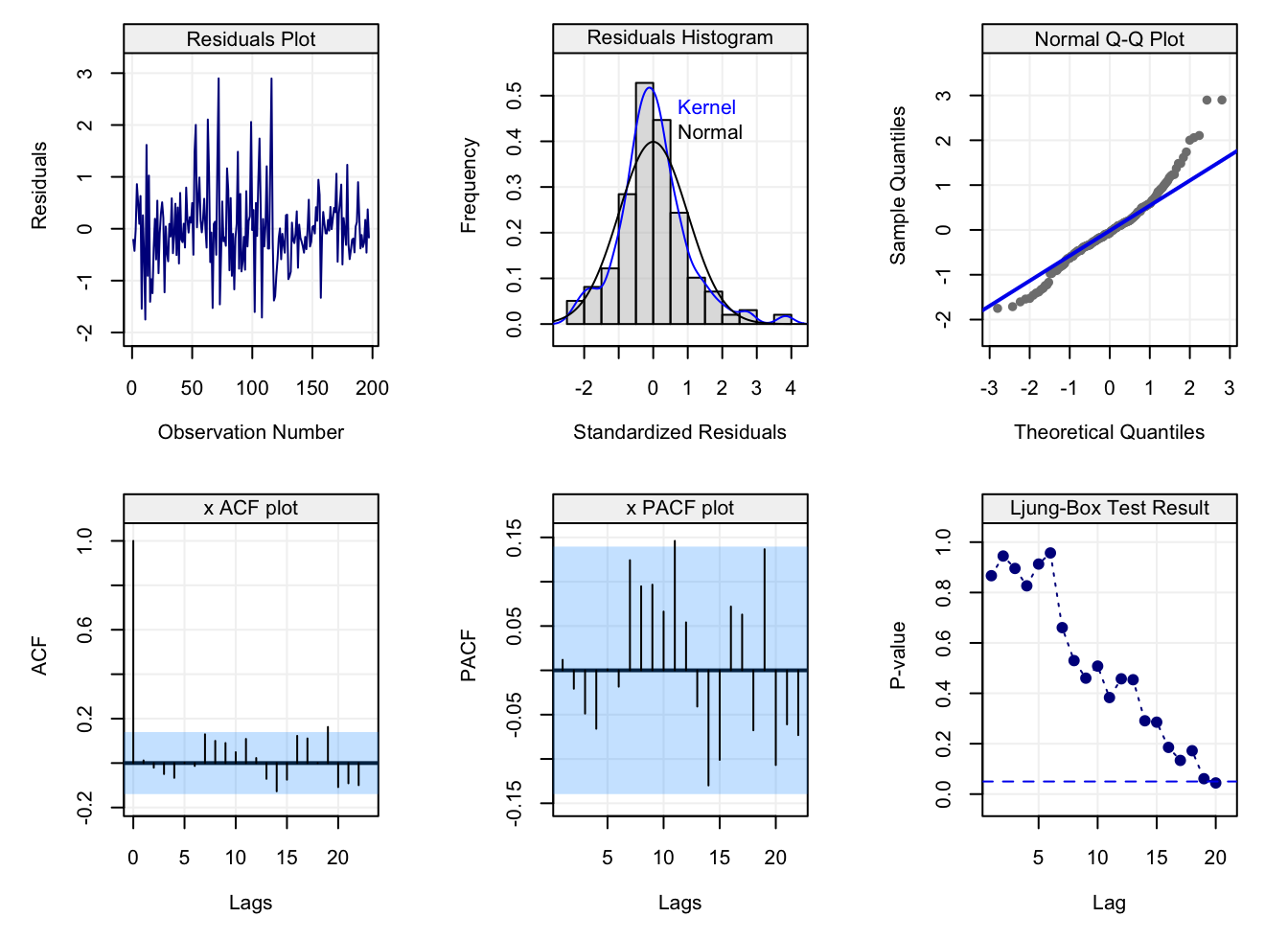

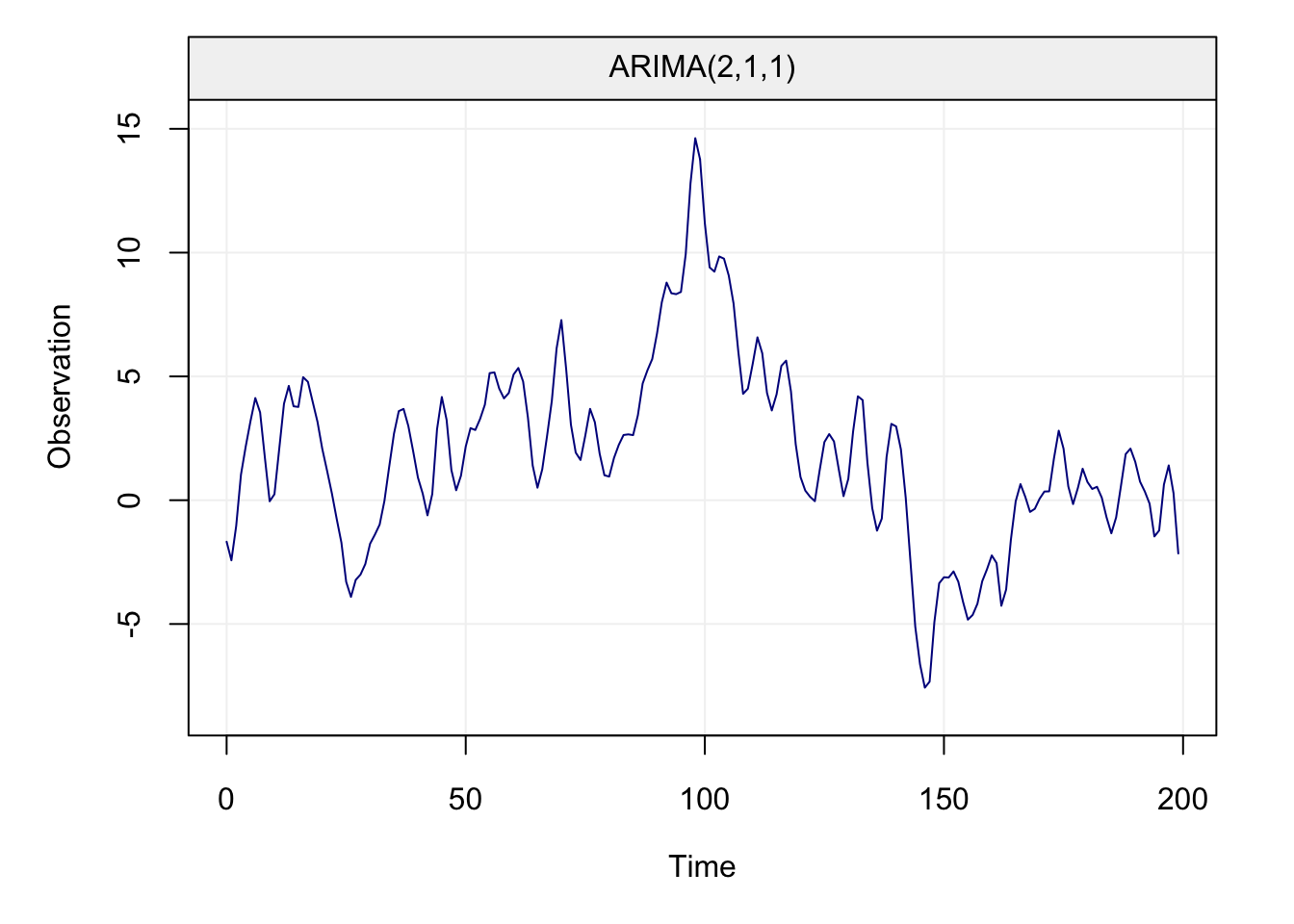

(#fig:diagnostic_plot)Ljung-Box test p-values for the residuals of the fitted AR(1) model over lags \(h = 1, ...., 20\).

All plots refer to the residuals of the model-fit and aim at visually assessing whether the fitted time series model captures the dependence structure in the data. The first row contains plots that can be interpreted in the same manner as the residuals from a linear regression. The first plot simply represents the residuals over time and should show if there is any presence of trends, seasonality or heteroskedasticity. The second plot gives a histogram (and smoothed histrogram called a kernel) of the residuals which should be centered in zero and (possibly) have an approximate normal distribution. The latter hypothesis can also be checked using the third plot which consists in a Normal quantile-quantile plot and the residuals should lie on (or close to) the diagonal line representing correspondance between the distributions.

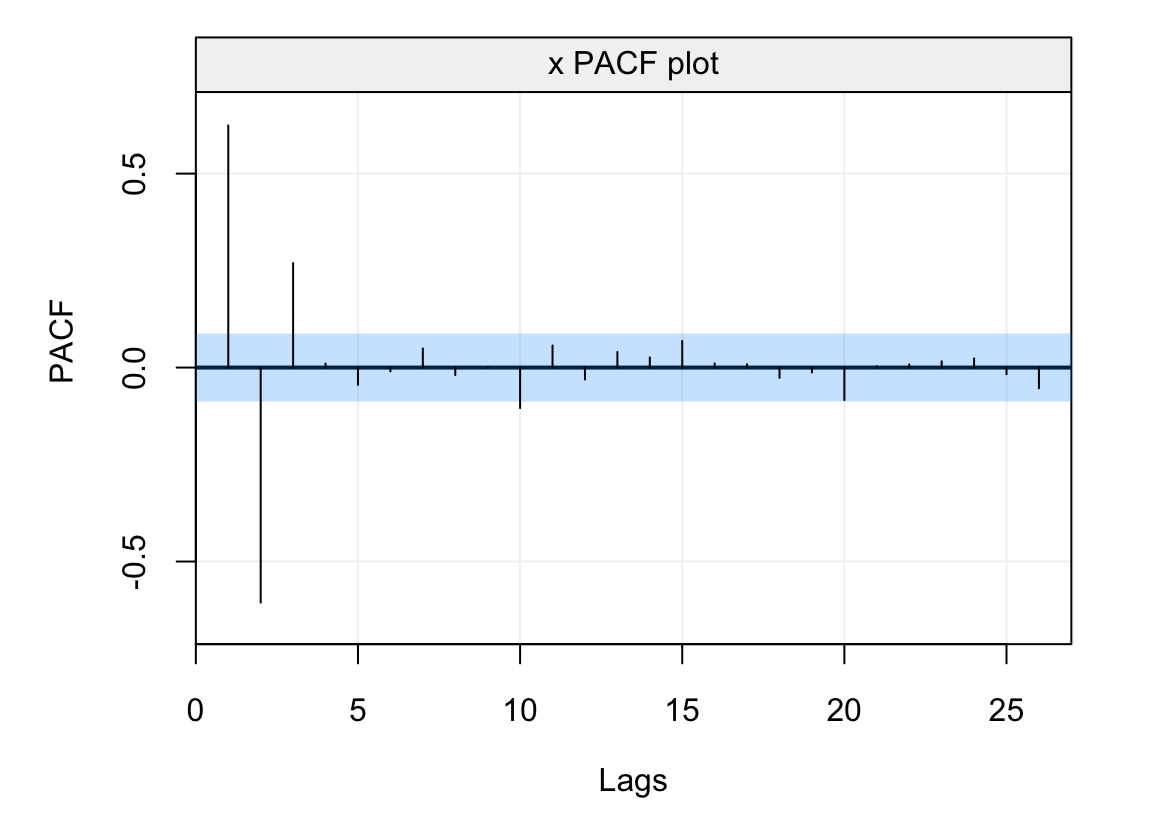

The first plot in the second row in the above figureshows the estimated ACF. It can be seen how the estimated autocorrelations all lie within the blue shaded area representing the confidence intervals. The second plot represents the Partial AutoCorrelation Function (PACF) and is another tool that will be investigated further on. In the meantime, suffice it to say that this tool can be interpreted as a different version of the ACF which measures autocorrelation conditionally on the previous lags (in some sense, it measures the direct impact of \(X_t\) on \(X{t+h}\) removing the influence of all observations inbetween). In this case, we see that also the estimated PACF lies within the confidence intervals and, along with the intepretation of the ACF, we can state that the model appears to fit the time series reasonably well since the residuals can be considered as following a white noise process. Finally, the last plot visualises the “Ljung-Box Test” (which is a type of Portmanteau test) that tests whether the autocorrelation of the residuals at a set of lags is different from zero. The plot represents the p-value of this test at the different lags and also shows a dashed line representing the common significance level of \(\alpha = 0.05\). If the modelling has done a good job, all p-values should be larger than this level. In this case, using the latter level, we can see that the autocorrelations for the residuals appear to be non-significant and therefore fitting an AR(1) model to the time series (which is the true model in this example) appears to do a reasonably good job.

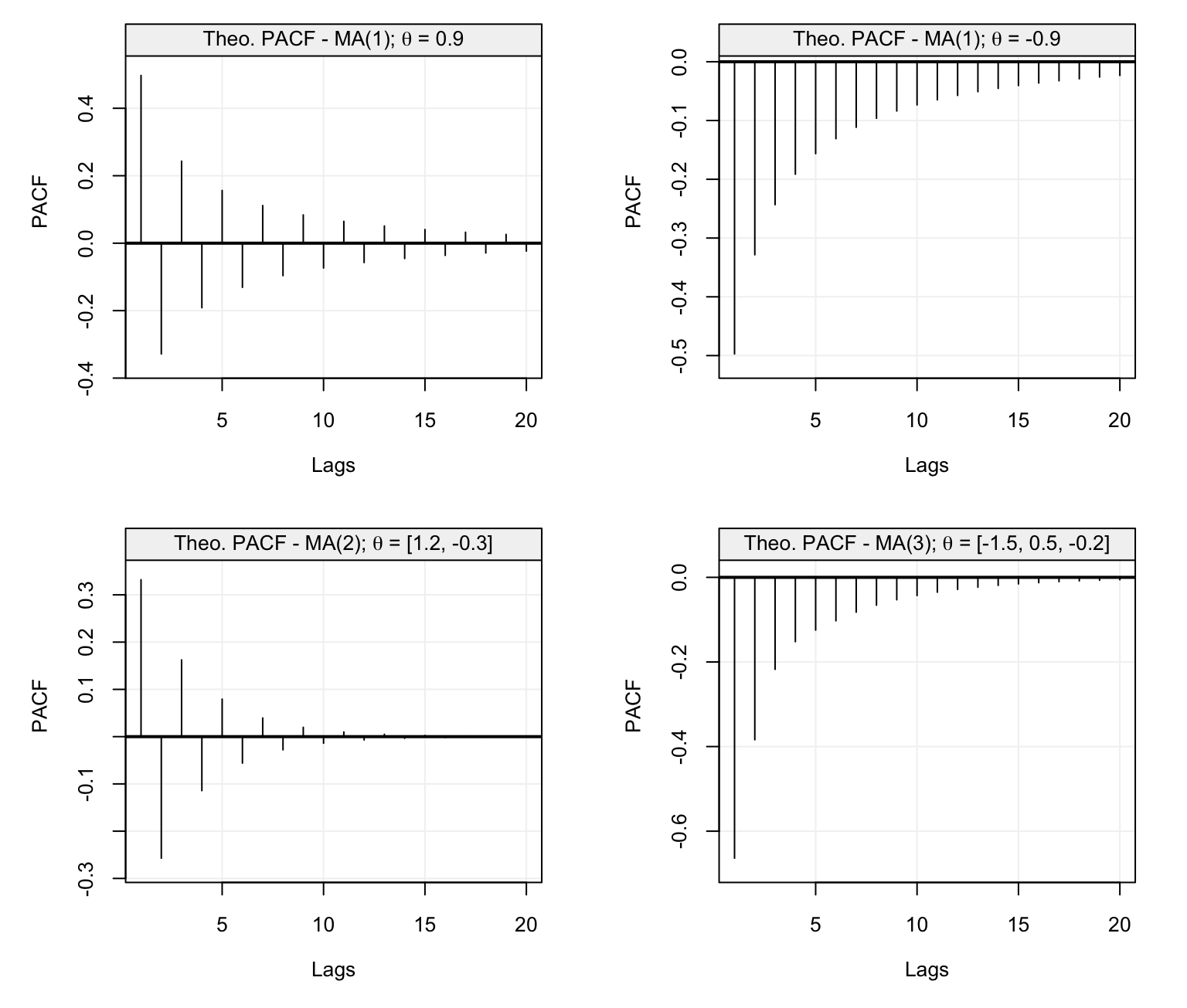

4.3.1 The Partial AutoCorrelation Function (PACF)

Before further discussing these diagnostic tools, let us focus a little more on the PACF mentioned earlier. As briefly highlighted above, this function measures the degree of linear correlation between two lagged observations by removing the effect of the observations in the intermediate lags. For example, let us assume we have three correlated variables \(X\), \(Y\) and \(Z\) and we wish to find the direct correlation between \(X\) and \(Y\): we can apply a linear regression of \(X\) on \(Z\) (to obtain \(\hat{X}\)) and \(Y\) on \(Z\) (to obtain \(\hat{Y}\)) and thereby compute \(corr(\hat{X}, \hat{Y})\) which represents the partial autocorrelation. In this manner, the effect of \(Z\) on the correlation between \(X\) and \(Y\) is removed. Considering the variables as being indexed by time (i.e. a time series), we can therefore define the following quantities \[\hat{X}_{t+h} = \beta_1 X_{t+h-1} + \beta_2 X_{t+h-2} + ... + \beta_{h-1} X_{t+1},\] and

\[\hat{X}_{t} = \beta_1 X_{t+1} + \beta_2 X_{t+2} + ... + \beta_{h-1} X_{t+h-1},\] which represent the regressions of \(X_{t+h}\) and \(X_t\) on all intermediate observations. With these defintions, let us more formally define the PACF.

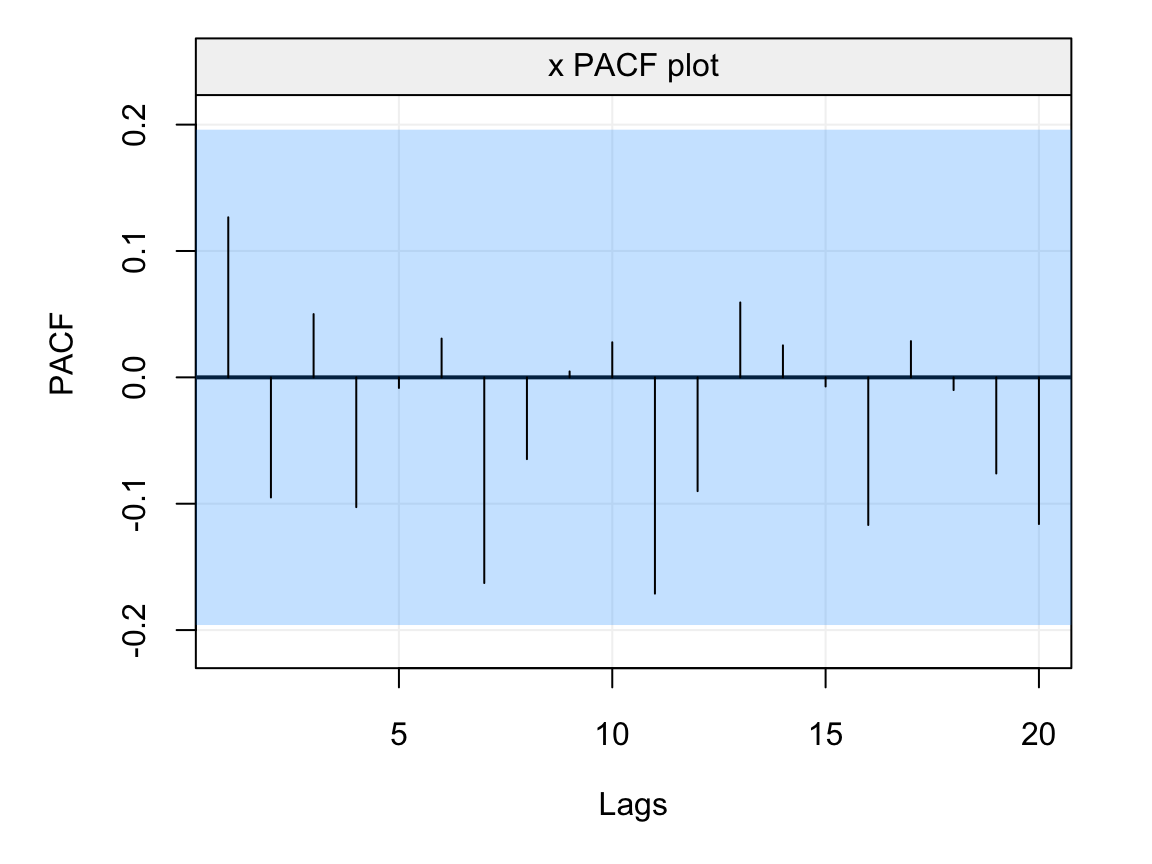

Therefore, the PACF is not defined at lag zero while it is the same as the ACF at the first lag since there are no intermediate observations. The defintion of this function is important for different reasons. Firstly, as mentioned earlier, we have an asymptotic distribution for the estimator of the PACF which allows us to deliver confidence intervals as for the ACF. Indeed, representing the empirical PACF along with its confidence intervals can provide a further tool to make sure that the residuals of a model fit can be considered as being white noise. The latter is a reasonable hypothesis if both the empirical ACF and PACF lie within the confidence intervals indicating that there is no significant correlation between lagged variables. Let us focus on the empirical PACF of the residuals we saw earlier in the diagnostic plot.

residuals = model$mod$residuals

plot(auto_corr(residuals, pacf = TRUE))

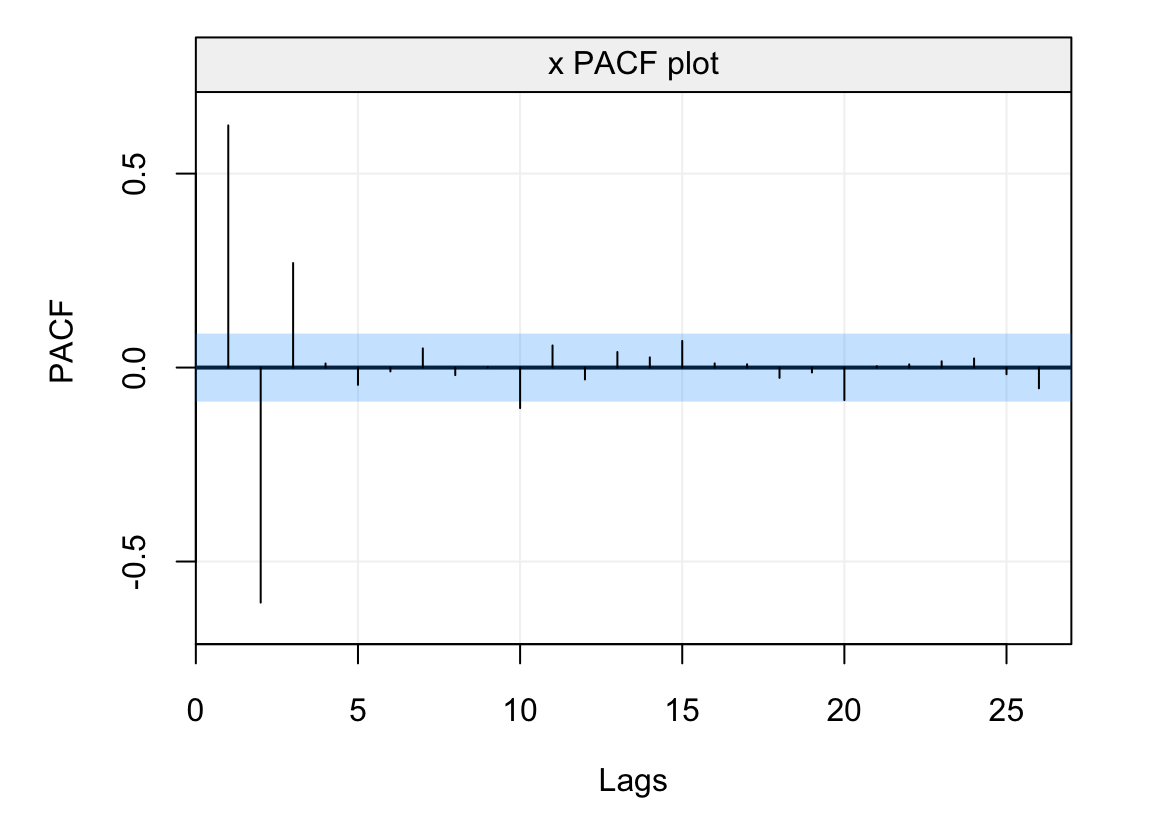

Figure 4.9: Empirical PACF on the residuals of the AR(1) model fitted to the simulated time series generated from an AR(1) process.

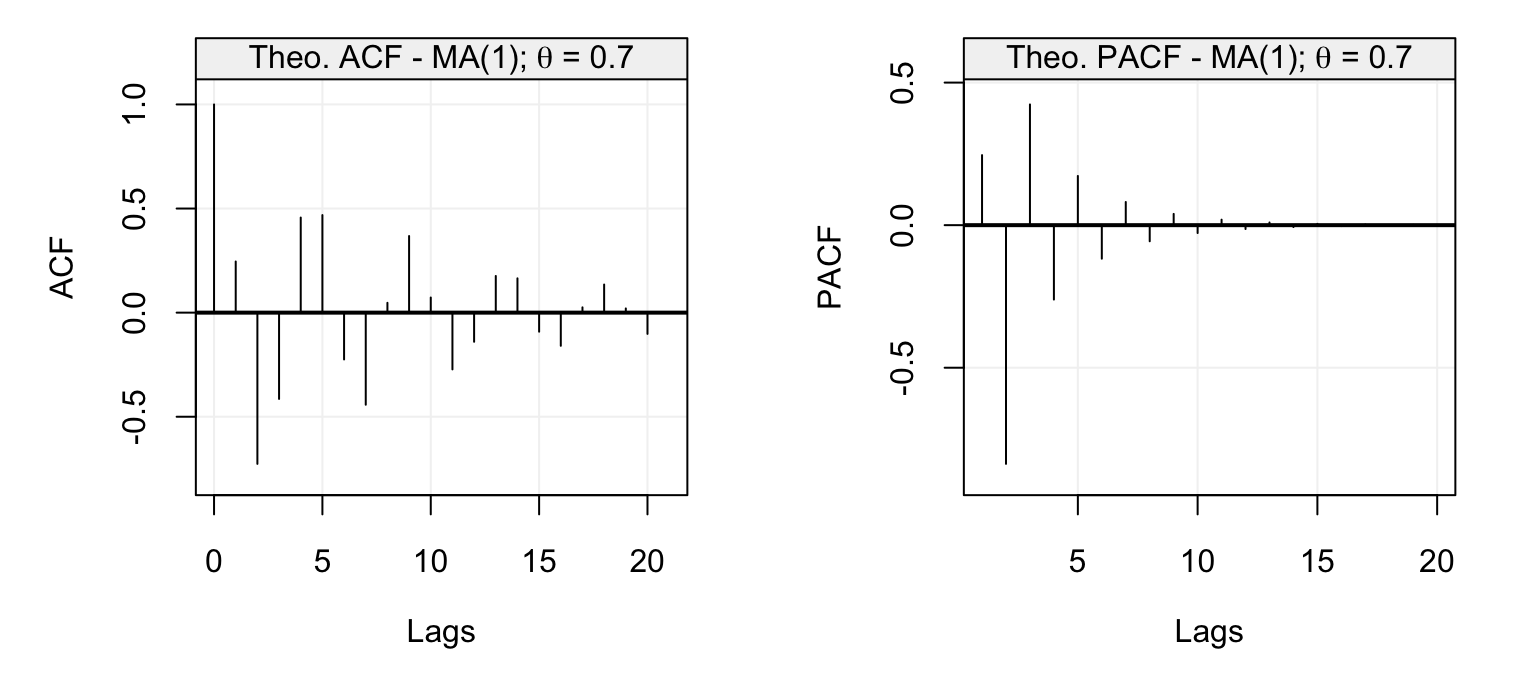

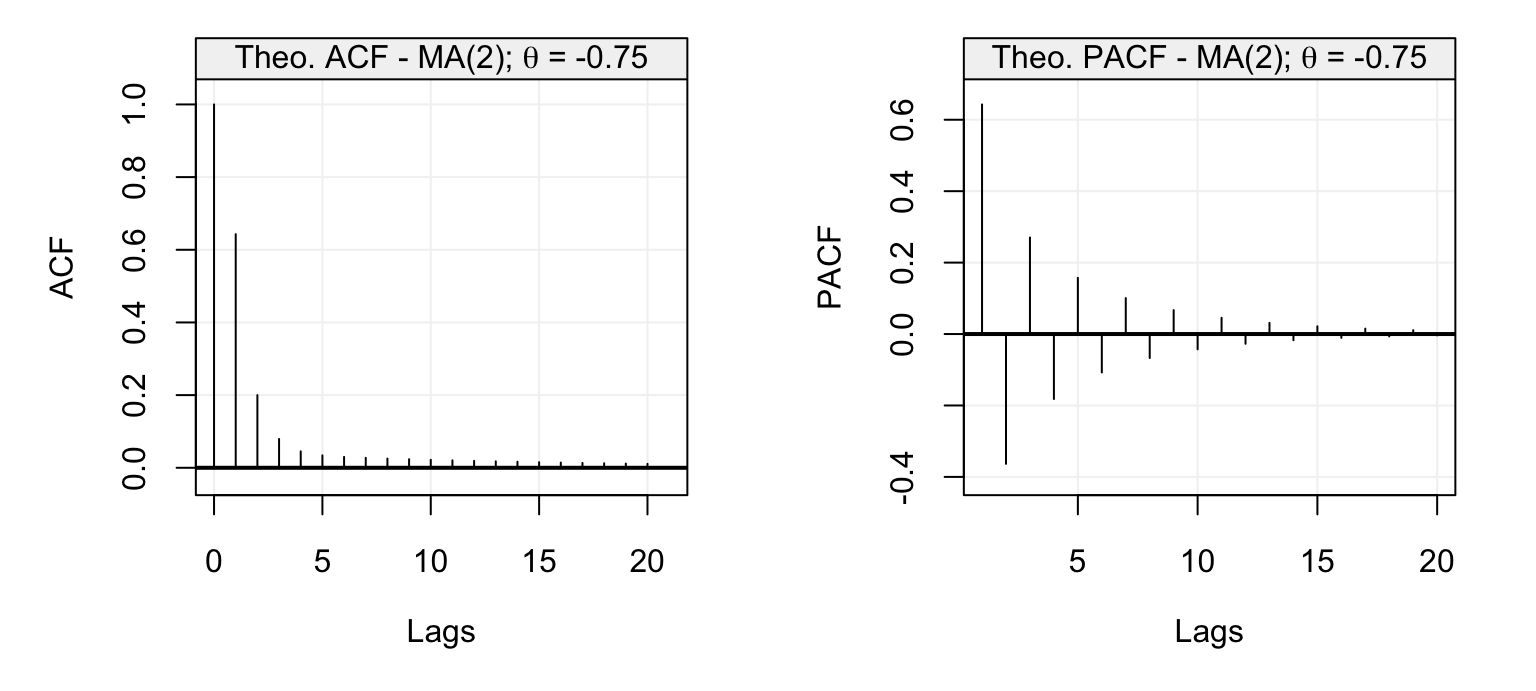

From the plot we see that the estimated PACF at all lags lies within the blue shaded area representing the 95% confidence intervals indicating that the residuals don’t appear to have any form of direct autocorrelation over lags and, hence, that they can be considered as white noise. In addition to delivering a tool to analyse the residuals of a model fit, the PACF is especially useful for diagnostic purposes in order to detect the possible models that have generated an observed time series. With respect to the class of models studied this far (i.e. AR(\(p\)) models), the PACF can give important insight to the order of the AR(\(p\)) model, more specifically the value of \(p\). Indeed, the ACF of an AR(\(p\)) model tends to decrease in a sinusoidal fashion and exponetially fast to zero as the lag \(h\) increases thereby giving a hint that the underlying model belongs to the AR(\(p\)) family. However, aside from hinting to the fact that the model belongs to the family of AR(\(p\)) models, the latter function tends to be less informative with respect to which AR(\(p\)) model (i.e. which value of \(p\)) is best suited for the observed time series. The PACF on the other hand is non-zero for the first \(p\) lags and then becomes zero for \(h > p\), therefore it can give an important indication with respect to which order should be considered to best model the time series.

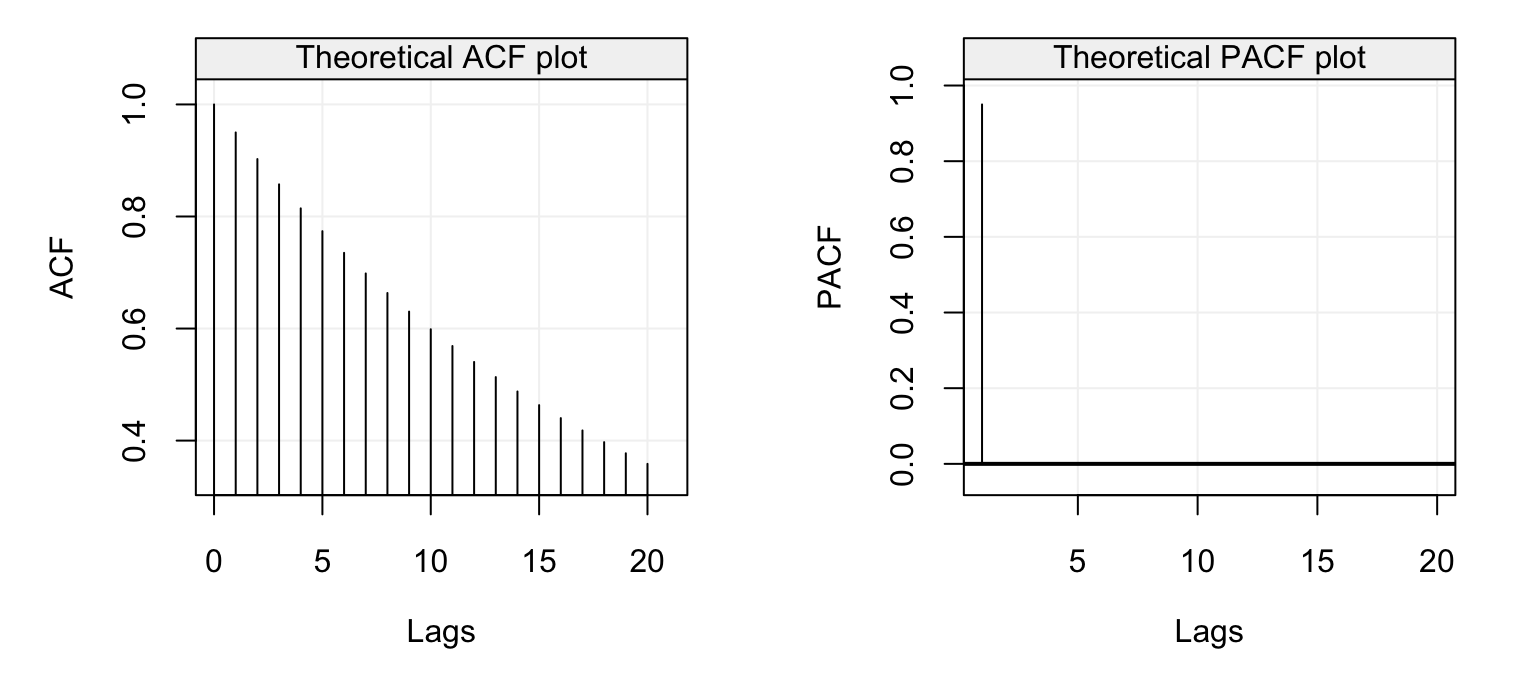

With the above discussion in mind, let us consider some examples where we study the theoretical ACF and PACF of different AR(\(p\)) models. The first is an AR(1) model with parameter \(\phi = 0.95\) and its ACF and PACF are shown below.

par(mfrow = c(1, 2))

plot(theo_acf(ar = 0.95, ma = NULL))

plot(theo_pacf(ar = 0.95, ma = NULL))

Figure 4.10: Theoretical ACF (left) and PACF (right) of an AR(1) model with parameter \(\phi = 0.95\).

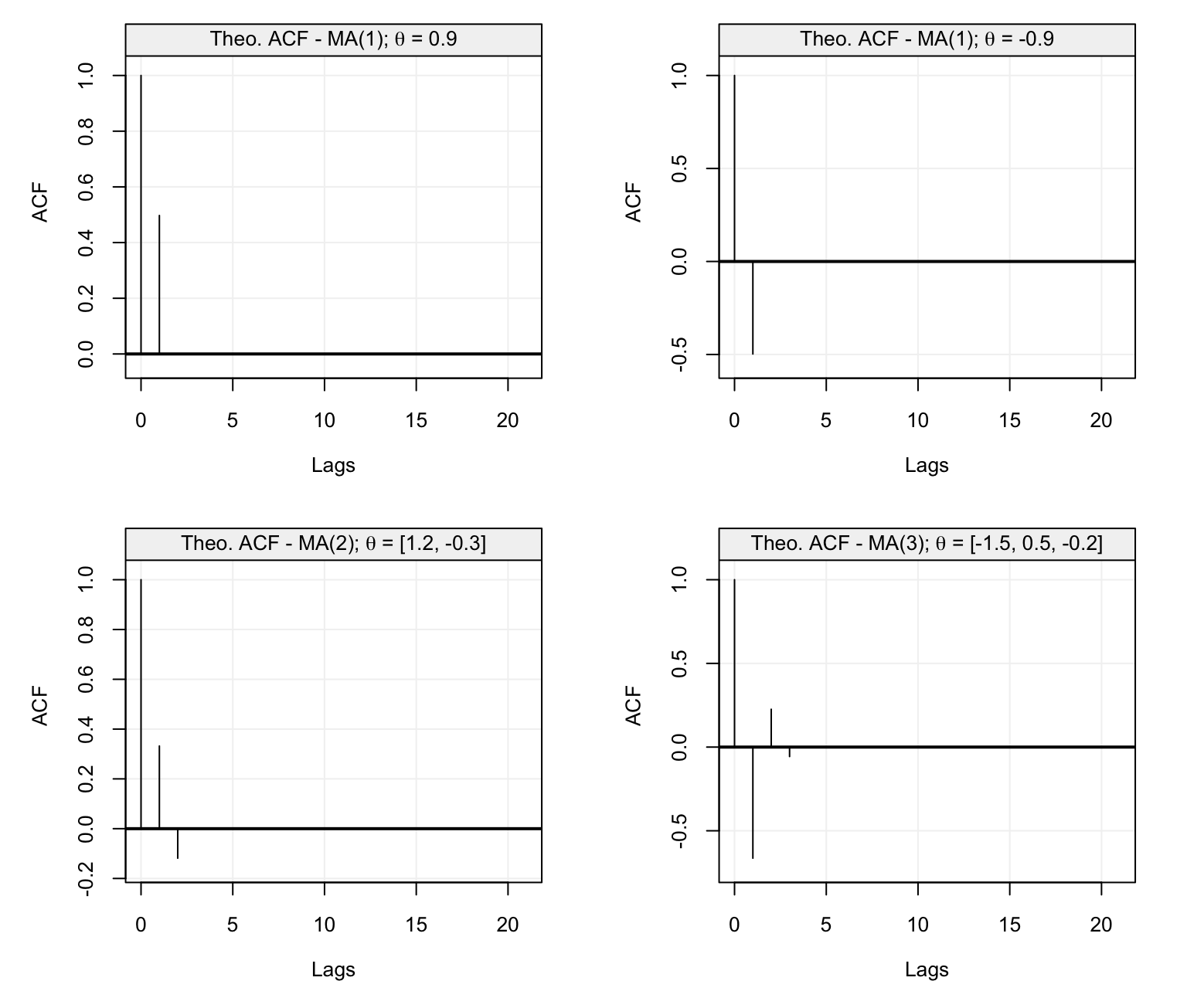

We can see that the ACF decreases exponentially fast as we would expect for an AR(p) model while the PACF has the same values of the ACF at lag 1 (which is always the case) and is zero for \(h > 1\). The latter therefore indicates the order \(p\) of the AR(\(p\)) model which in this case is \(p=1\). Let us now consider the following models as further examples:

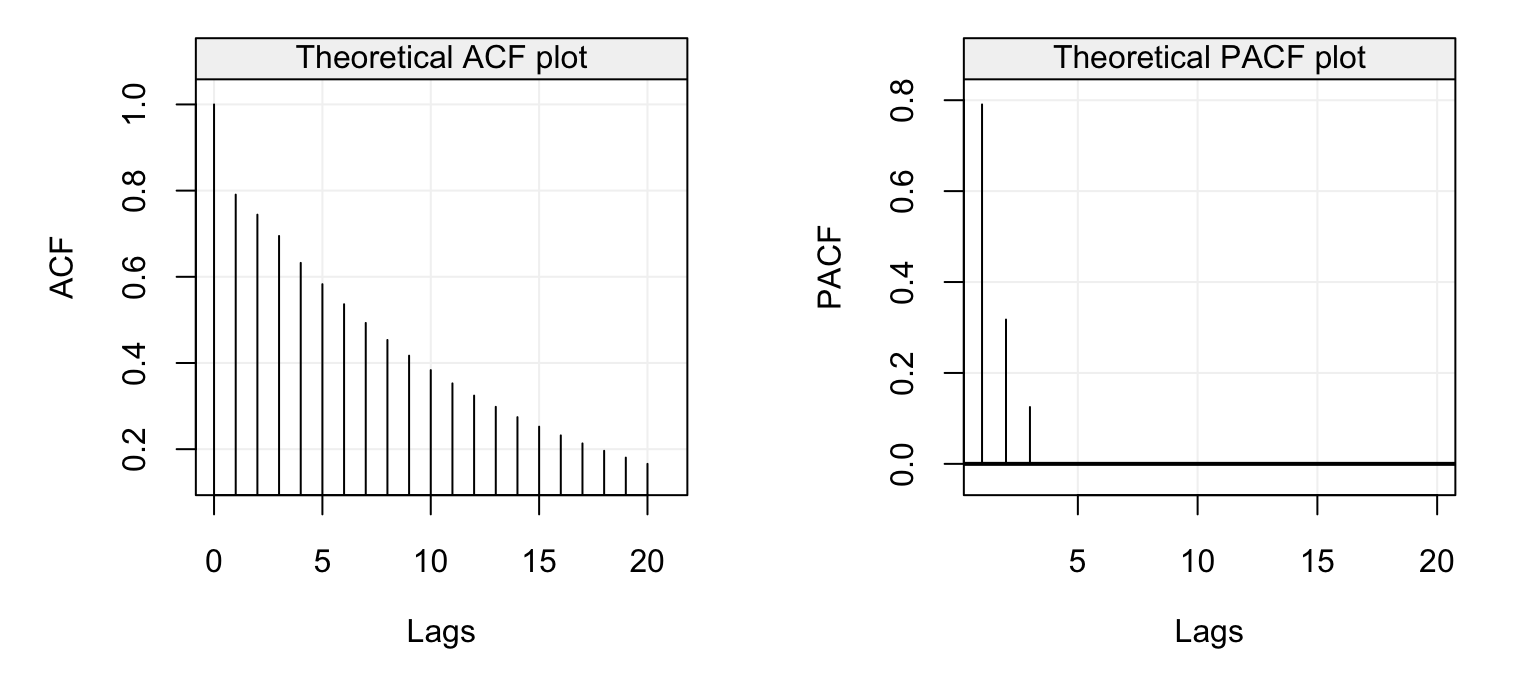

\[X_t = 0.5 X_{t-1} + 0.25 X_{t-2} + 0.125 X_{t-3} + W_t\]

and

\[X_t = -0.1 X_{t-2} -0.1 X_{t-4} + 0.6 X_{t-5} + W_t.\]

The first model is an AR(3) model with positive coefficients while the second is an AR(5) model where \(\phi_1 = \phi_3 = 0\). The ACF and PACF of the first AR(3) model is shown below.

par(mfrow = c(1, 2))

plot(theo_acf(ar = c(0.5, 0.25, 0.125), ma = NULL))

plot(theo_pacf(ar = c(0.5, 0.25, 0.125), ma = NULL))

Figure 4.11: Theoretical ACF (left) and PACF (right) of an AR(3) model with parameters \(\phi_1 = 0.95\), \(\phi_2 = 0.25\) and \(\phi_3 = 0.125\).

Again, we see that the ACF decreases exponentially and also the PACF plot shows a steady decrease until it becomes zero for \(h > 3\). This is because the values of \(\phi_i\) are all positive and decreasing as well. Let us now consider the second model (the AR(5) model) whose ACF and PACF plots are represented below.

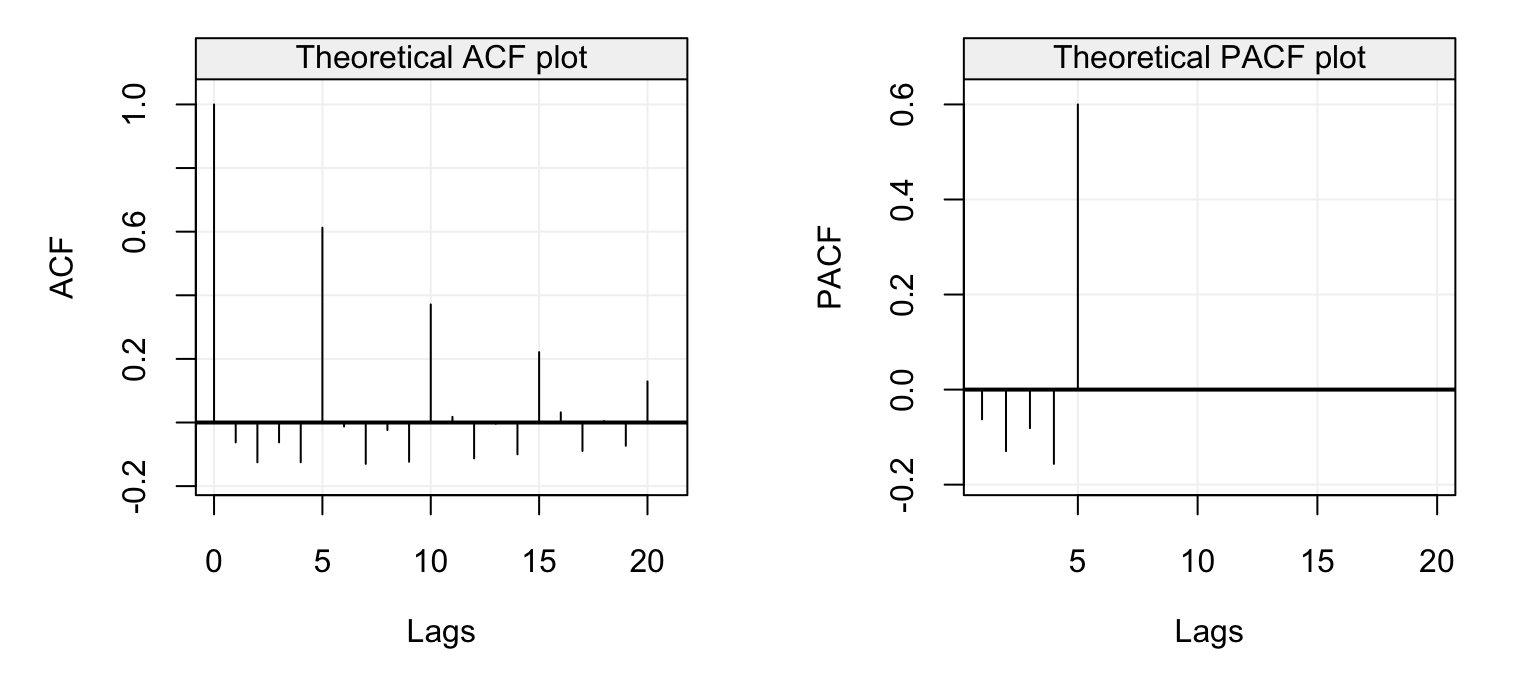

par(mfrow = c(1, 2))

plot(theo_acf(ar = c(0, -0.1, 0, -0.1, 0.6), ma = NULL))

plot(theo_pacf(ar = c(0, -0.1, 0, -0.1, 0.6), ma = NULL))

Figure 4.12: Theoretical ACF (left) and PACF (right) of an AR(4) model with parameters \(\phi_1 = 0\), \(\phi_2 = -0.1\), \(\phi_3 = 0\) and \(\phi_4 = 0\).

In this case we see that the ACF has a sinusoidal-like behaviour where the values of \(\phi_1 = \phi_3 = 0\) and the negative \(\phi_2 = \phi_4 = -0.1\) values deliver this alternating ACF form which nevertheless decreases as \(h\) increases. These values deliver the PACF plot on the right which is negative for the first lags and is greatly positive at \(h=5\) only to become zero for \(h > 5\).

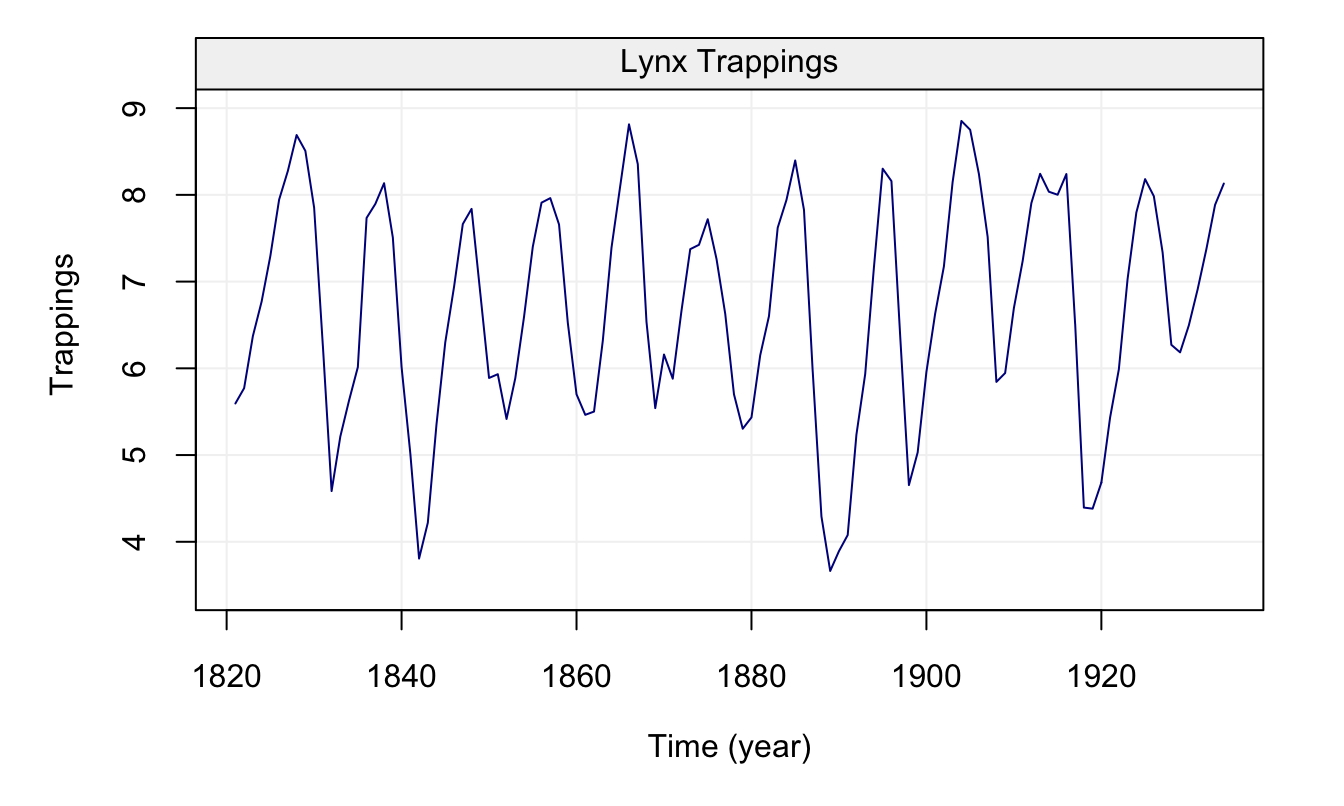

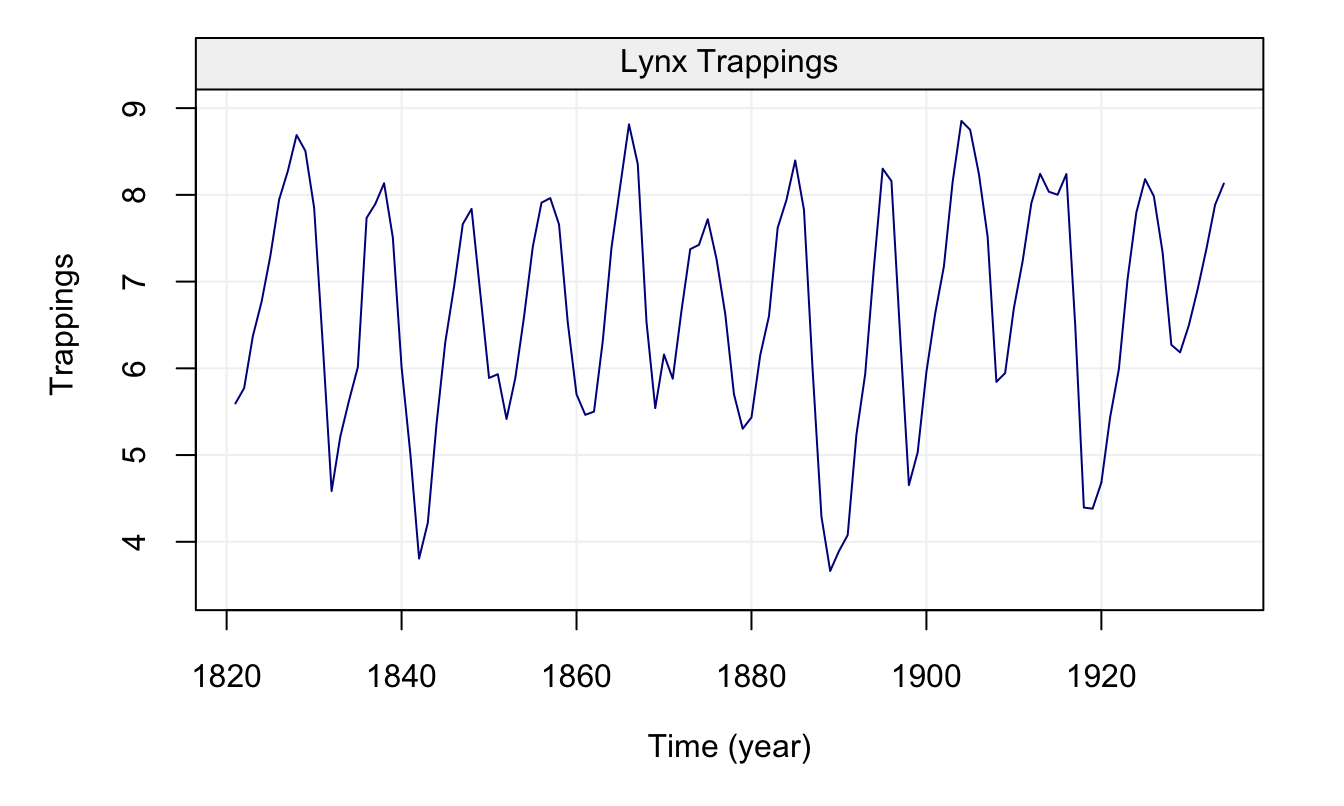

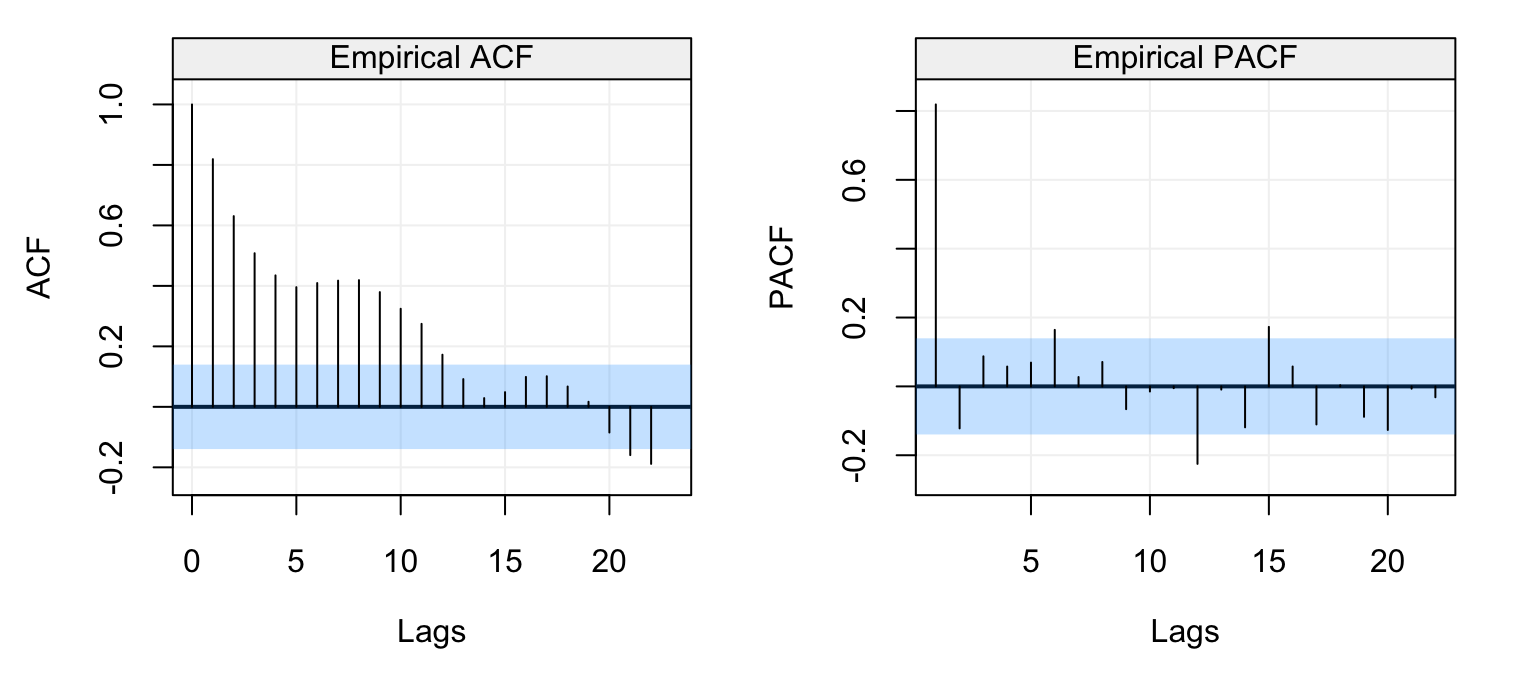

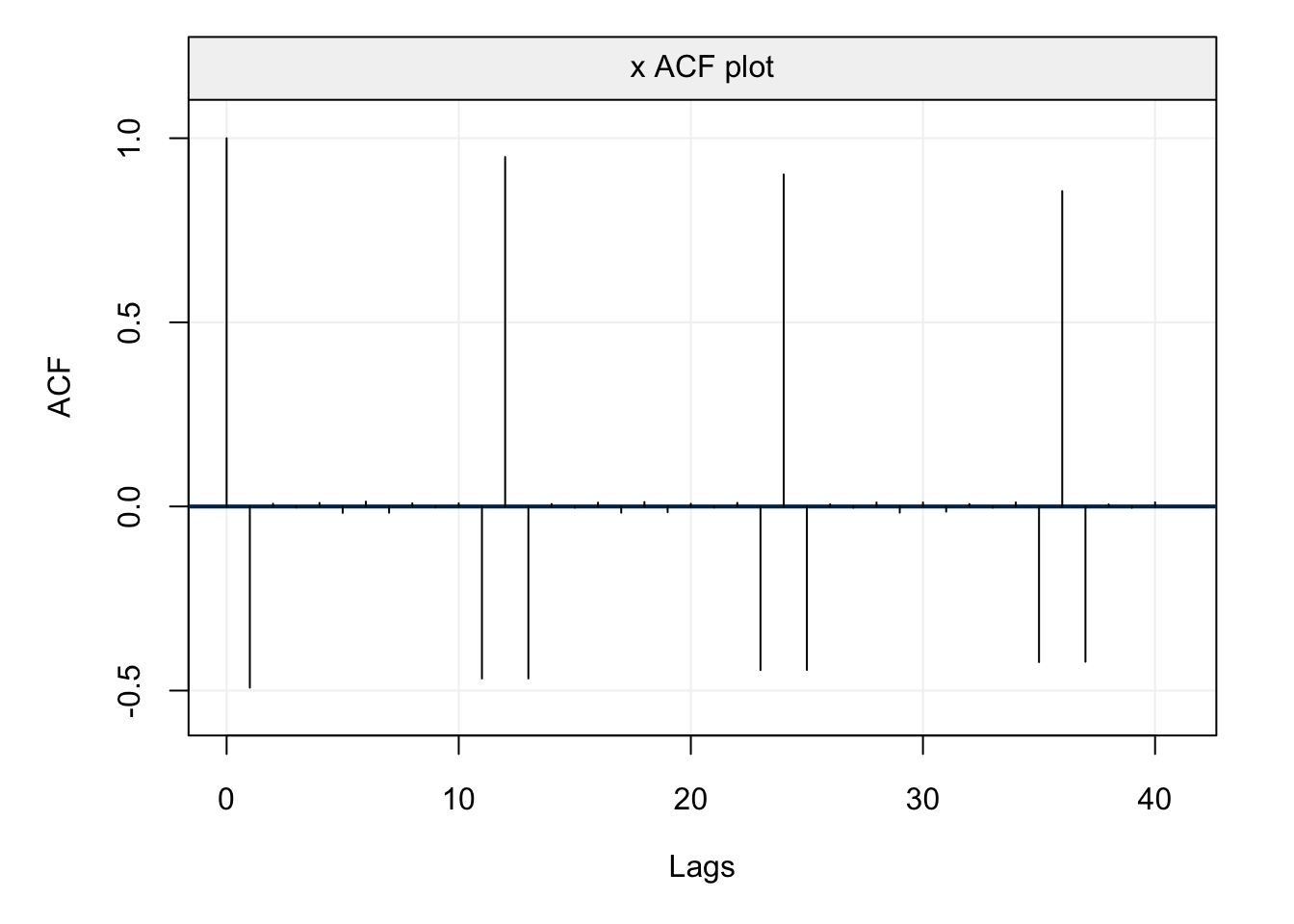

These examples therefore give us further insight as to how to interpret the empirical (or estimated) versions of these functions. For this reason, let us study the empirical ACF and PACF of some real time series, the first of which is the data representing the natural logarithm of the annual number of Lynx trappings in Canada between 1821 and 1934. The time series is represented below.

lynx_gts = gts(log(lynx), start = 1821, data_name = "Lynx Trappings", unit_time = "year", name_ts = "Trappings")

plot(lynx_gts)

Figure 4.13: Time series of annual number of lynx data trappings in Canada between 1821 and 1934.

We can see that there appears to be a seasonal trend within the data but let us ignore this for the moment and check the ACF and PACF plots below.

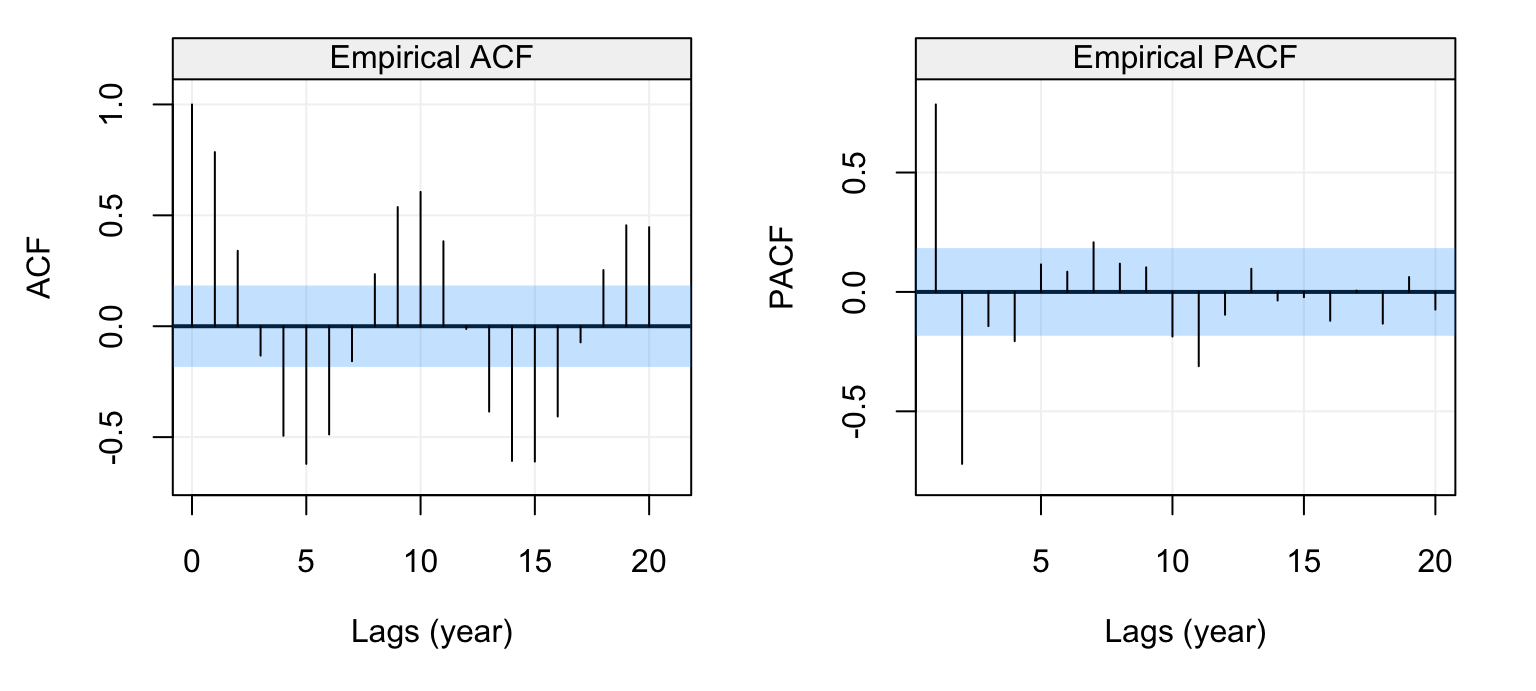

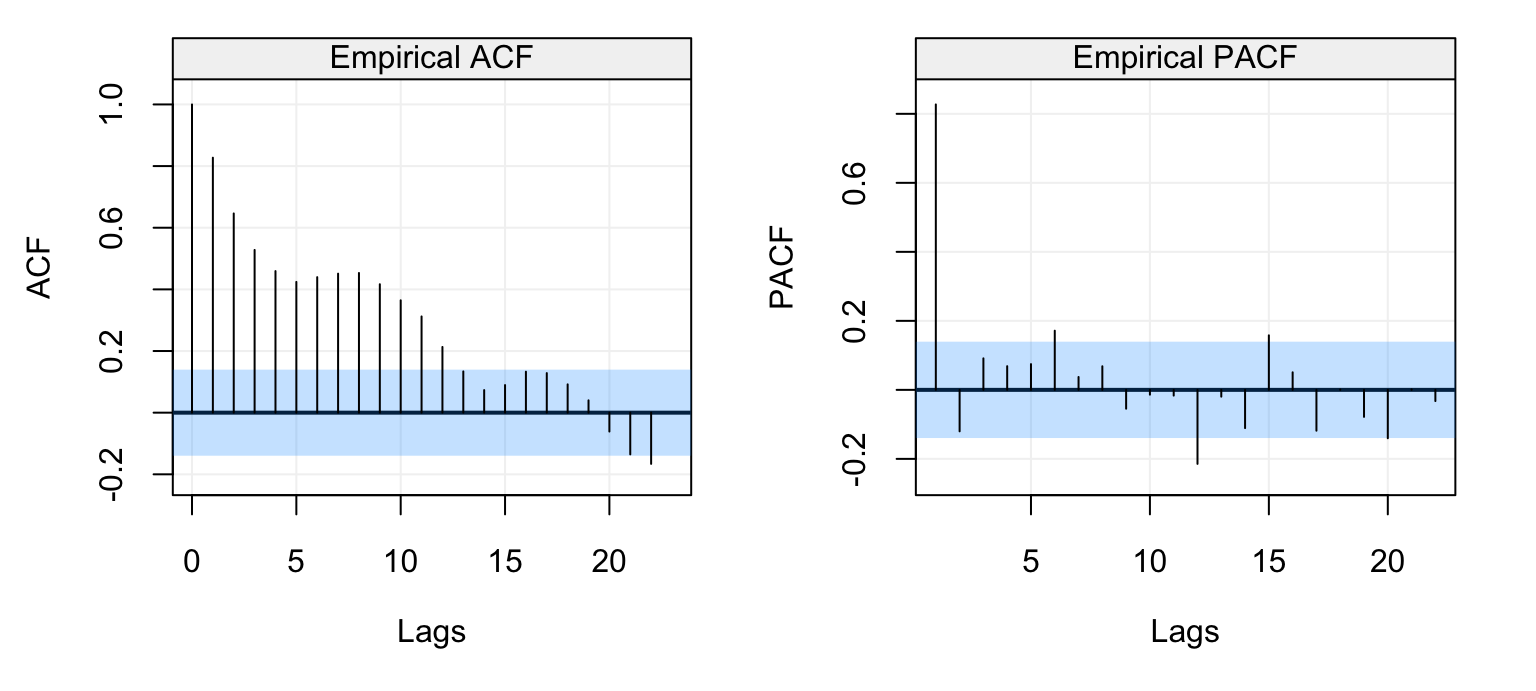

corr_analysis(lynx_gts)

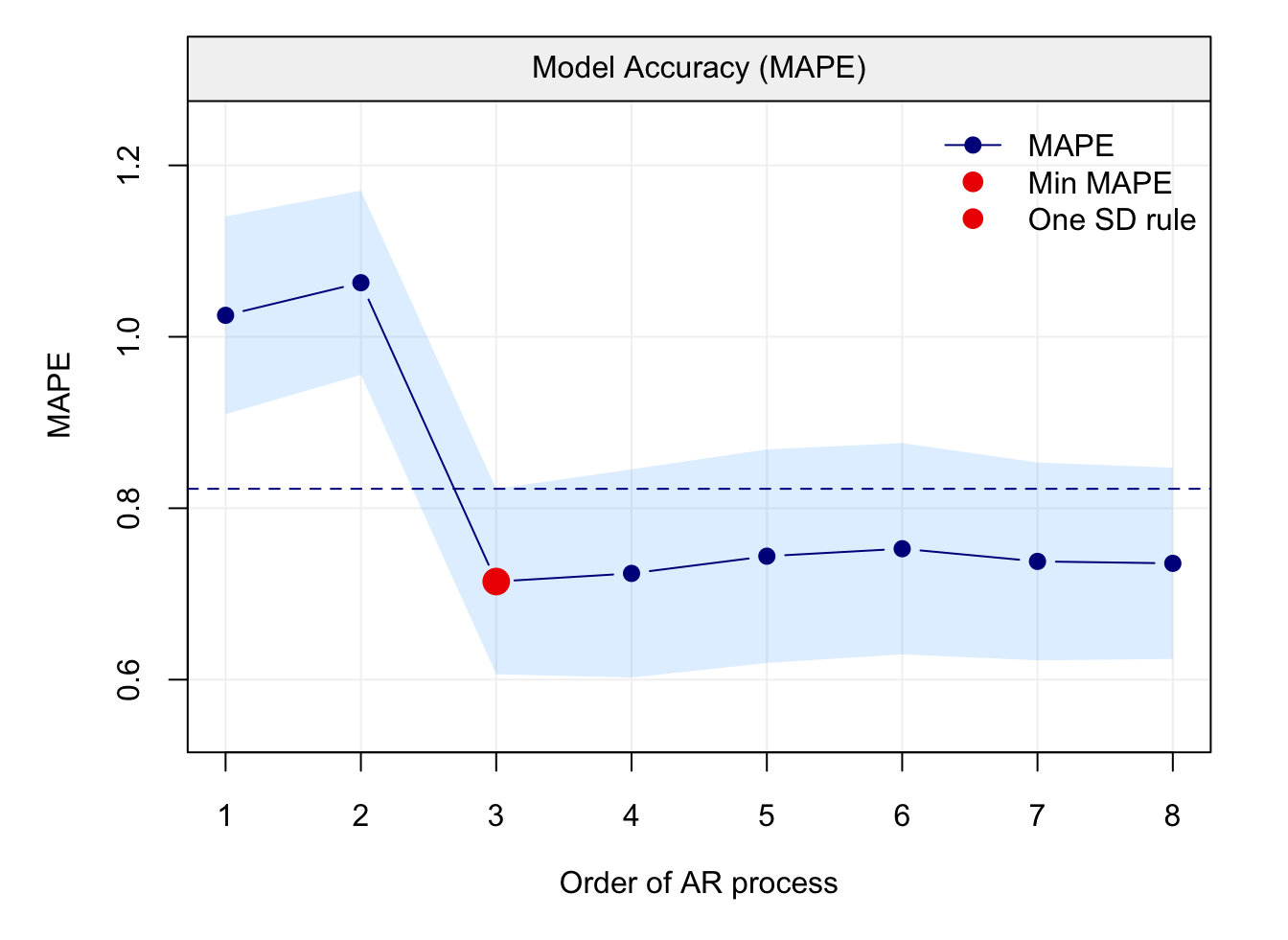

Figure 4.14: Empirical ACF (left) and PACF (right) of the lynx time series data.

The seasonal behaviour also appears in the ACF plot but we see that it decreases in a sinusoidal fashion as the lag increases hinting that an AR(p) model could be a potential candidate for the time series. Looking at the PACF plot on the right we can see that a few partial autocorrelations appear to be significant up to lag \(h=11\). Therefore an AR(11) model could be a possibly good candidate to explain (and predict) this time series.

4.3.2 Portmanteau Tests

In a similar manner to using the ACF and PACF confidence intervals to understand if the residuals can be considered as white noise, other statistical tests exist to determine if the autocorrelations can be considered as being significant or not. Indeed, the 95% confidence intervals can be extremely useful in detecting significant (partial) autocorrelations but they cannot be considered as an overall test for white noise since (aside from being asymptotic and therefore approximate) they do not consider the multiple testing hypothesis implied by them (i.e. if we’re testing all lags, the actual level of significance should be modified in order to bound type I errors). For this reason, Portmanteau tests have been proposed in order to deliver an overall test that jointly considers a set of lags and determines whether their autocorrelation is significantly different from zero (i.e. whether the residuals can be considered white noise or not).

One of the most popular Portmanteau tests is the Ljung-Box test whose statistic is defined as follows:

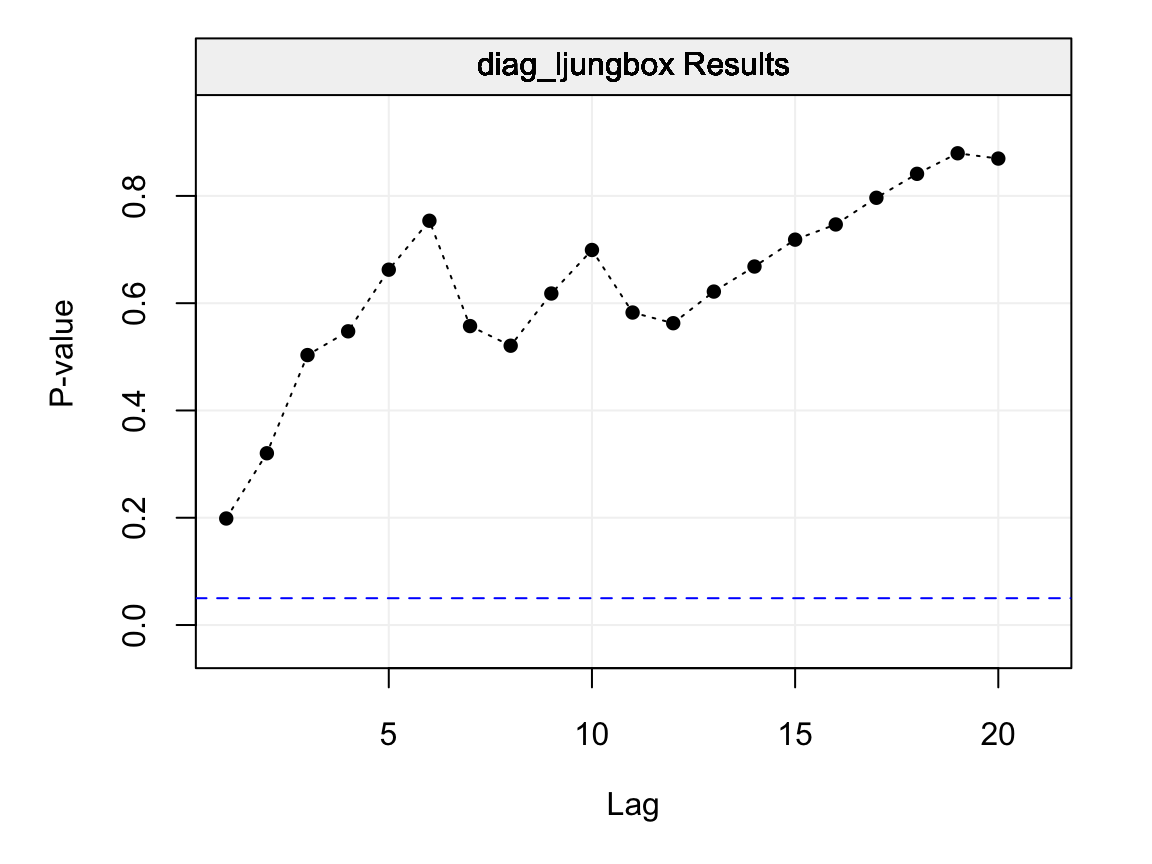

\[Q_h = T(T+2) \sum_{j=1}^{h} \frac{\hat{\rho}_j^2}{T - j},\] where \(h\) represents the maximum lag of the set of lags for which we wish to test for serial autocorrelation (i.e. from lag 1 to lag \(h\)). Under the null hypothesis \(Q_h \sim \chi_h^2\) since asymptotically \(\hat{\rho}_j \sim \mathcal{N}\left(0, \frac{1}{T}\right)\) under the null and therefore the statistic is proportional to the sum of squared standard normal variables. The last plot of Figure @ref(fig:diagnostic_plot) can therefore be better interpreted under this framework and is reproduced below.

lb_test = diag_ljungbox(as.numeric(residuals), order = 0, stop_lag = 20, stdres = FALSE, plot = TRUE)

Figure 4.15: Ljung-Box p-values for lags \(h = 1,...,20\) on the residuals of the fitted AR(1) model.

Each p-value represented in the plot above is therefore the p-value for the considered maximum lag. Therefore the first p-value (at lag 1) is the p-value for the null hypothesis that all lags up to lag 1 are equal to zero, the second is for the null hypothesis that all lags up to lag 2 are equal to zero and so on. Hence, each p-value represents a global test on the autocorrelations up to the considered maximum lag \(h\) and delivers additional information regarding the dependence structure within the residuals. In this case, as interpreted earlier, all p-values are larger than the \(\alpha = 0.05\) level and is an additional indication that the residuals follow a white noise process (and that the fitted model appears to well describe the observed time series).

There are other Portmanteau tests with different advantages (and disadvantages) over the Ljung-Box test but these are beyond the scope of this textbook.

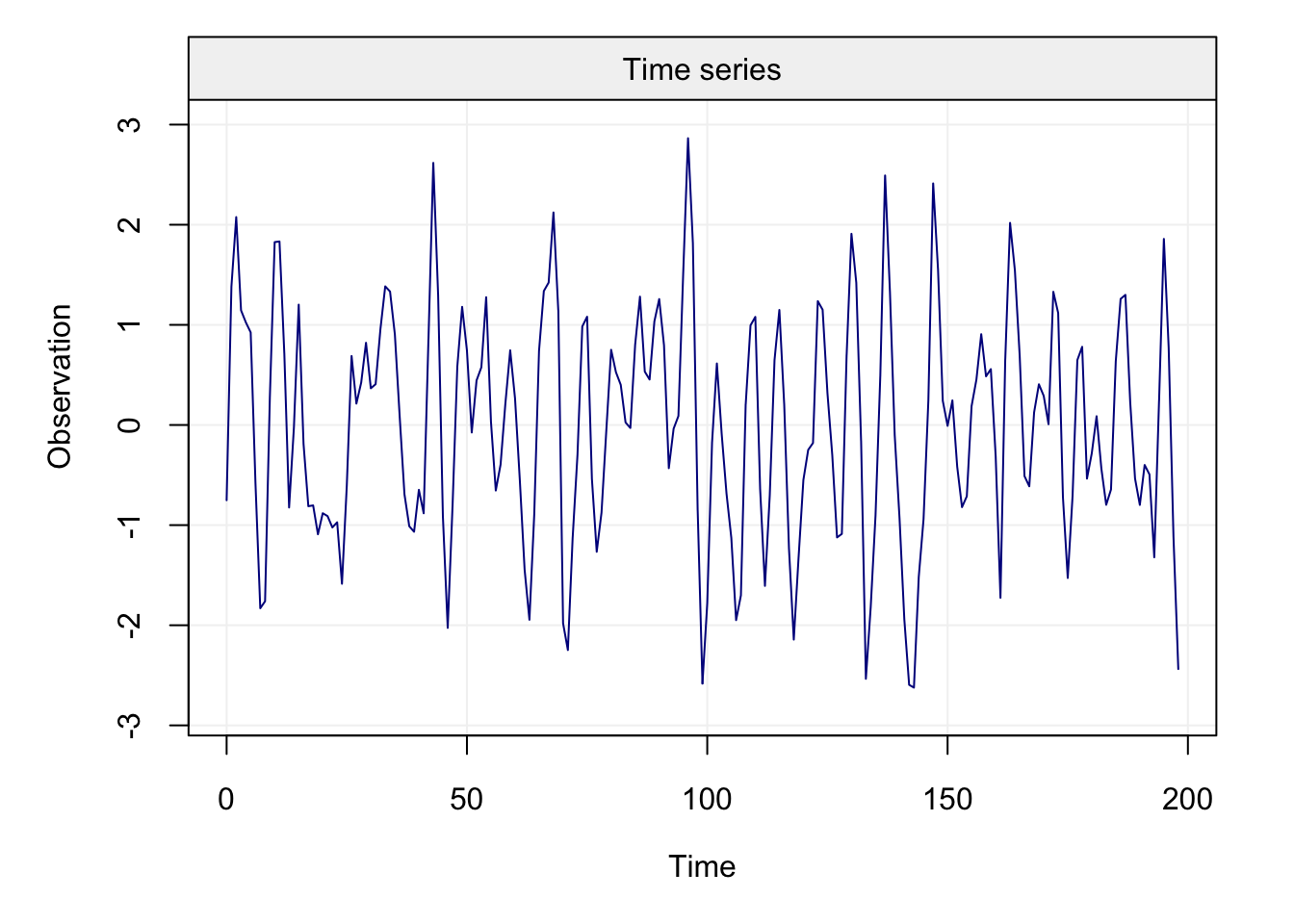

4.4 Inference for AR(p) Models

For all the above methods, it would be necessary to understand how “precise” their estimates are. To do so we would need to obtain confidence intervals for these estimates and this can be done mainly in two manners:

- using the asymptotic distribution of the parameter estimates;

- using parametric bootstrap.

The first approach consists in using the asymptotic distribution of the estimators presented earlier to deliver approximations of the confidence intervals which get better as the length of the observed time series increases. Hence, if for example we wanted to find a 95% confidence interval for the parameter \(\phi\), we would use the quantiles of the normal distribution (given that all methods presented earlier present this asymptotic distribution). However, this approach can present some drawbacks, one of which its behaviour when the parameters are close to the boundaries of the parameter space. Suppose we consider a realization of length \(T = 100\) of the following AR(1) model:

\[X_t = 0.96 X_{t-1} + W_t, \;\;\;\; W_t \sim \mathcal{N}(0,1),\]

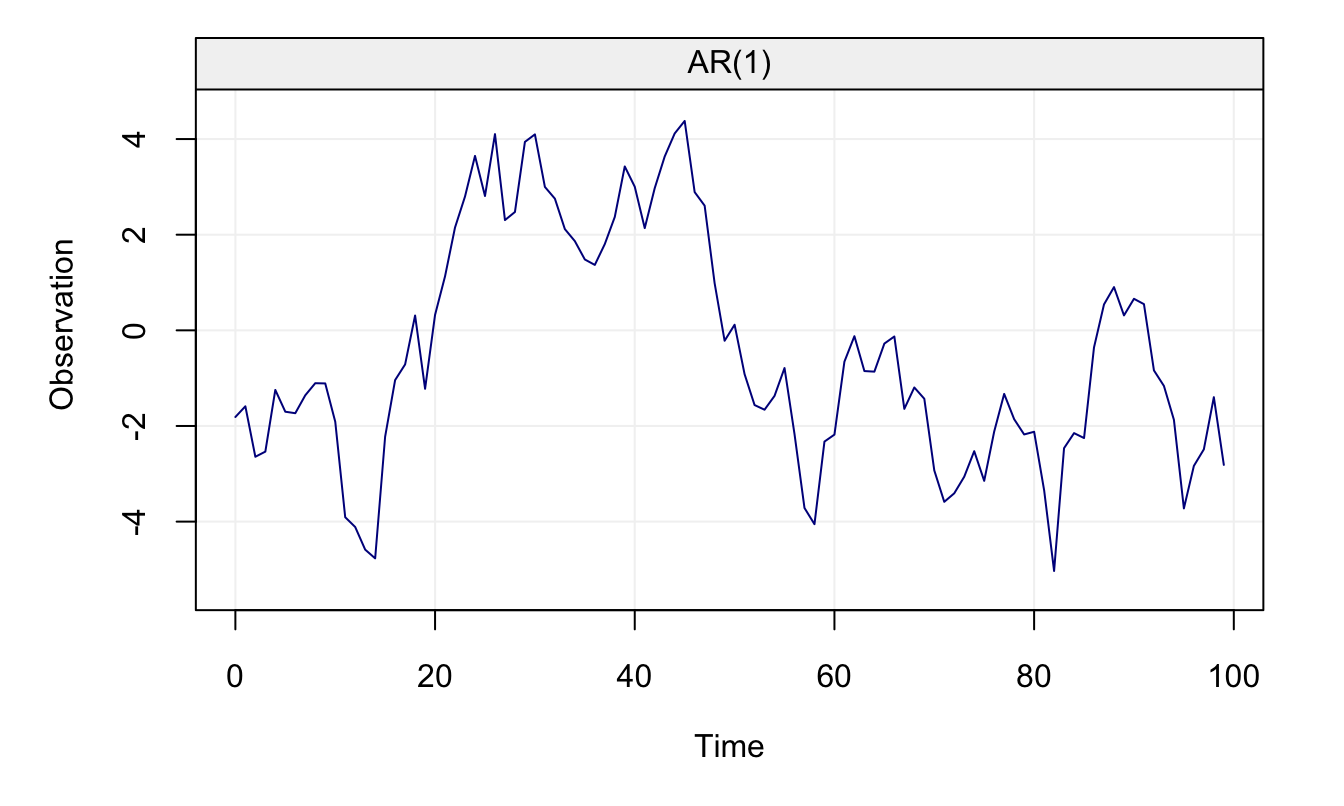

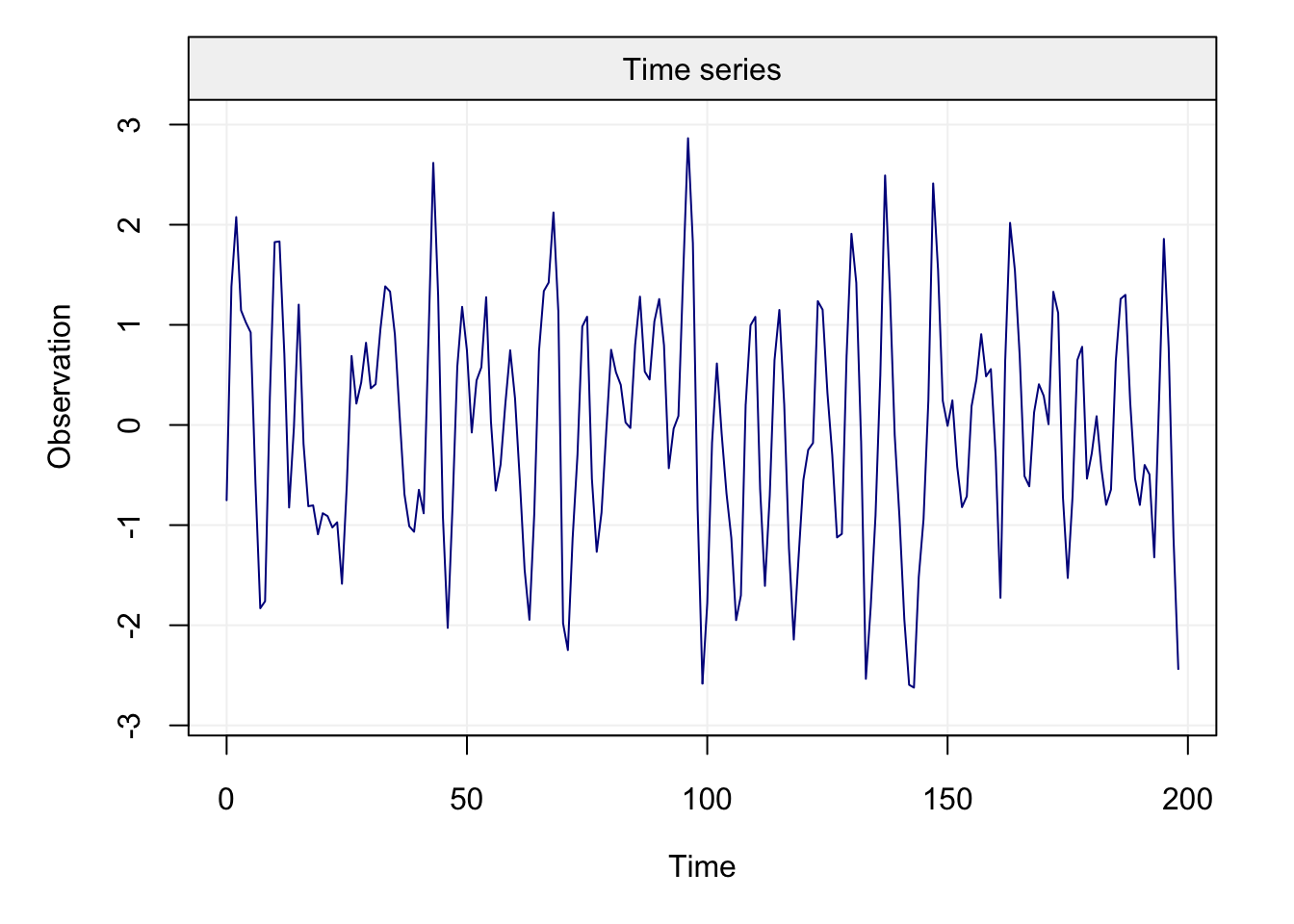

which is represented in Figure 4.16

set.seed(55)

x = gen_gts(n = 100, AR1(0.96, 1))

plot(x)

Figure 4.16: AR(1) with \(\phi\) close to parameter bound

It can be seen that the parameter \(\phi = 0.96\) respects the condition for stationarity (i.e.\(\left| \phi \right| < 1\)) but is very close to its boundary. Using the MLE, we first estimate the parameters and then compute confidence intervals for \(\phi\) using the asymptotic normal distribution.

# Compute the parameter estimates using MLE

fit.ML = estimate(AR(1), x, demean = FALSE)

c("phi" = fit.ML$mod$coef[1], "sigma2" = sqrt(fit.ML$mod$sigma2))## phi.ar1 sigma2

## 0.9740770 0.8538777# Construct asymptotic confidence interval for phi

fit.ML$mod$coef[1] + c(-1,1)*1.96*as.numeric(sqrt(fit.ML$mod$var.coef))## [1] 0.9393414 1.0088127## Fitted model: AR(1)

##

## Estimated parameters:

## Model Information:

## Estimates

## AR 0.9864031

## SIGMA2 0.7644610

##

## * The initial values of the parameters used in the minimization of the GMWM objective function

## were generated by the program underneath seed: 1337.

##

## 95 % confidence intervals:

## Estimates CI Low CI High SE

## AR 0.9864031 0.8688122 1.054986 0.05659269

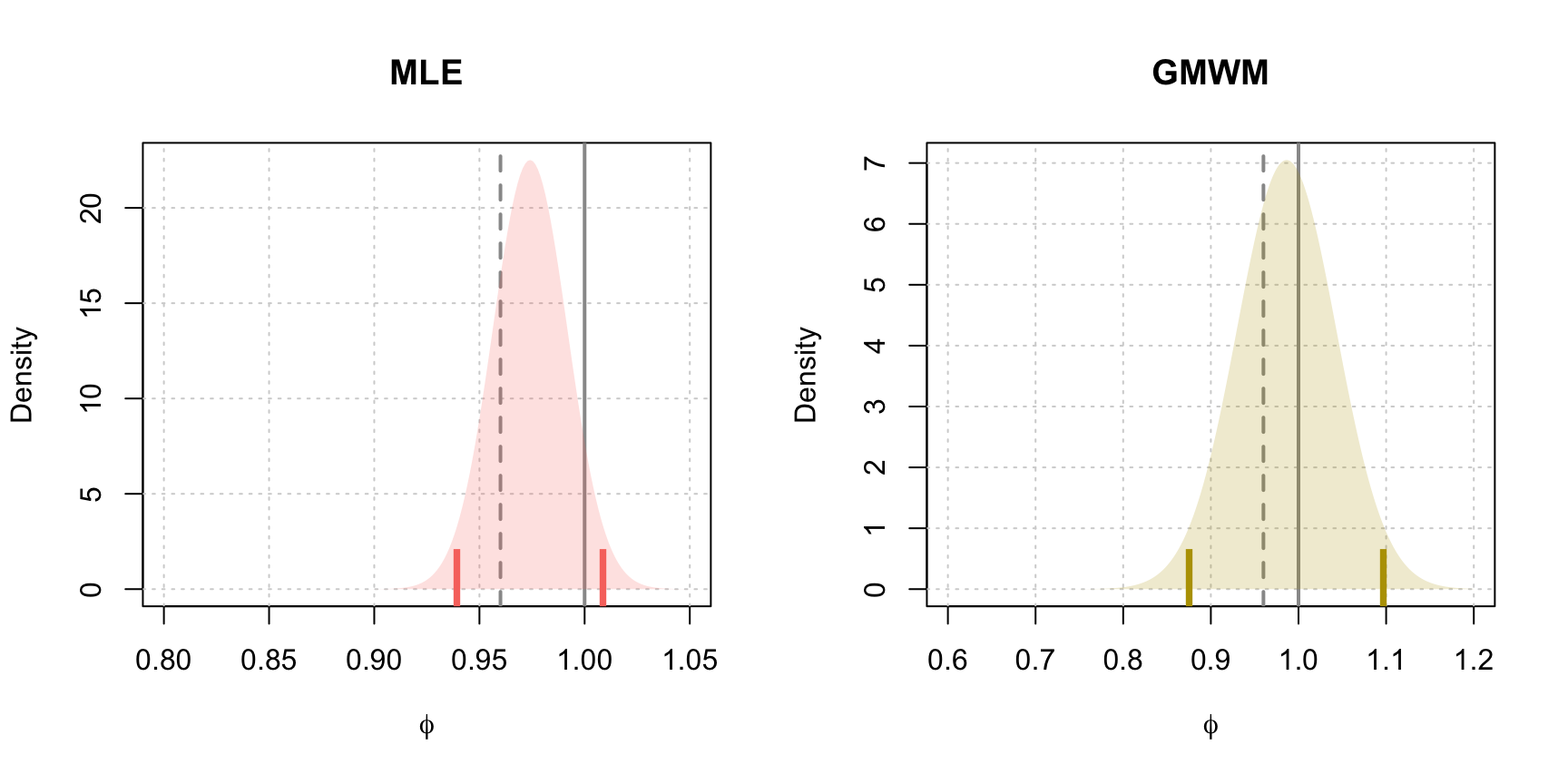

## SIGMA2 0.7644610 0.5565939 0.926104 0.11232308From the estimation summary, we can notice that the MLE confidence intervals contain values that would make the AR(1) non-stationary (i.e. values of \(\phi\) larger than 1). However, these confidence intervals are based on the (asymptotic) distributions of \(\hat{\phi}\) which are shown in Figure 4.17 along with those based on the GMWM estimator.

Figure 4.17: Estimated asymptotic distribution of \(\hat{\phi}\) for MLE and GMWM parameter estimates. The dashed vertical line represents the true value of \(\phi\), the solid line denotes the upper bound of the parameter space for \(\phi\) and the vertical ticks represent the limits of the 95% confidence intervals for both methods.

Therefore, if we estimate a stationary AR(1) model, it would be convenient to have more “realistic” confidence intervals that give limits for a stationary AR(1) model. A viable solution for this purpose is to use parametric bootstrap. Indeed, parametric bootstrap takes the estimated parameter values and uses them in order to simulate from the assumed model (an AR(1) process in this case). For each simulation, the parameters are estimated and stored in order to obtain an empirical (finite sample) distribution of the estimators. Based on this distribution it is consequently possible to find the \(\alpha/2\) and \(1-\alpha/2\) empirical quantiles thereby delivering confidence intervals that should not suffer from boundary problems (since the estimation procedure looks for solutions within the admissable regions). The code below gives an example of how this confidence interval is built based on the same estimation procedure but using parametric bootstrap (using \(B = 10,000\) bootstrap replicates).

# Number of Iterations

B = 10000

# Set up storage for results

est.phi.gmwm = rep(NA,B)

est.phi.ML = rep(NA,B)

# Model generation statements

model.gmwm = AR(phi = c(fit.gmwm$mod$estimate[1]), sigma2 = c(fit.gmwm$mod$estimate[2]))

model.mle = AR(phi = c(fit.ML$mod$coef), sigma2 = c(fit.ML$mod$sigma2))

# Begin bootstrap

for(i in seq_len(B)){

# Set seed for reproducibility

set.seed(B + i)

# Generate process under MLE parameter estimate

x.star = gen_gts(100, model.mle)

# Attempt to estimate phi by employing a try

est.phi.ML[i] = tryCatch(estimate(AR(1), x.star, demean = FALSE)$mod$coef,

error = function(e) NA)

# Generate process under GMWM parameter estimate

x.star = gen_gts(100, model.gmwm)

est.phi.gmwm[i] = estimate(AR(1), x.star, method = "gmwm")$mod$estimate[1]

}

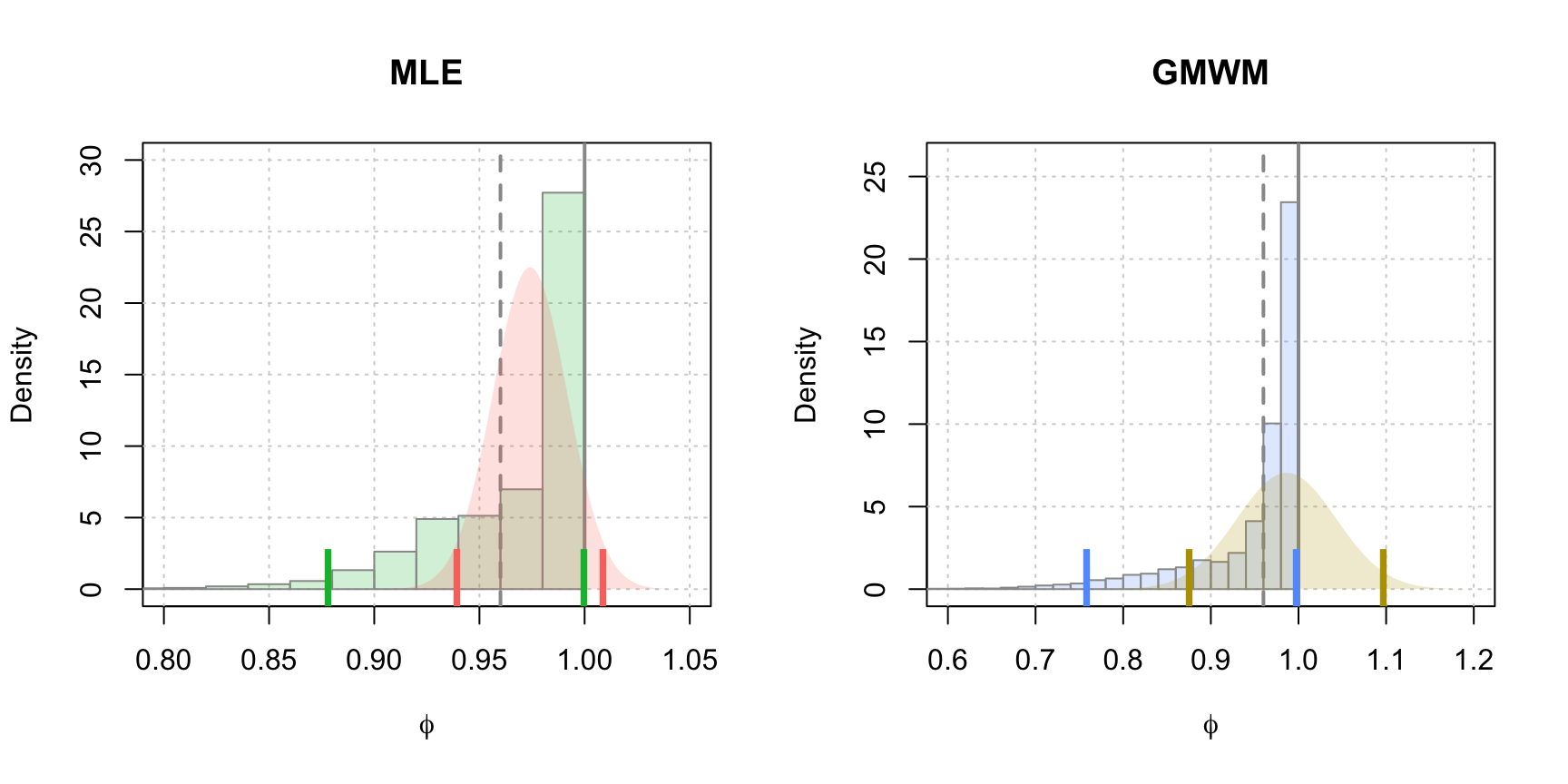

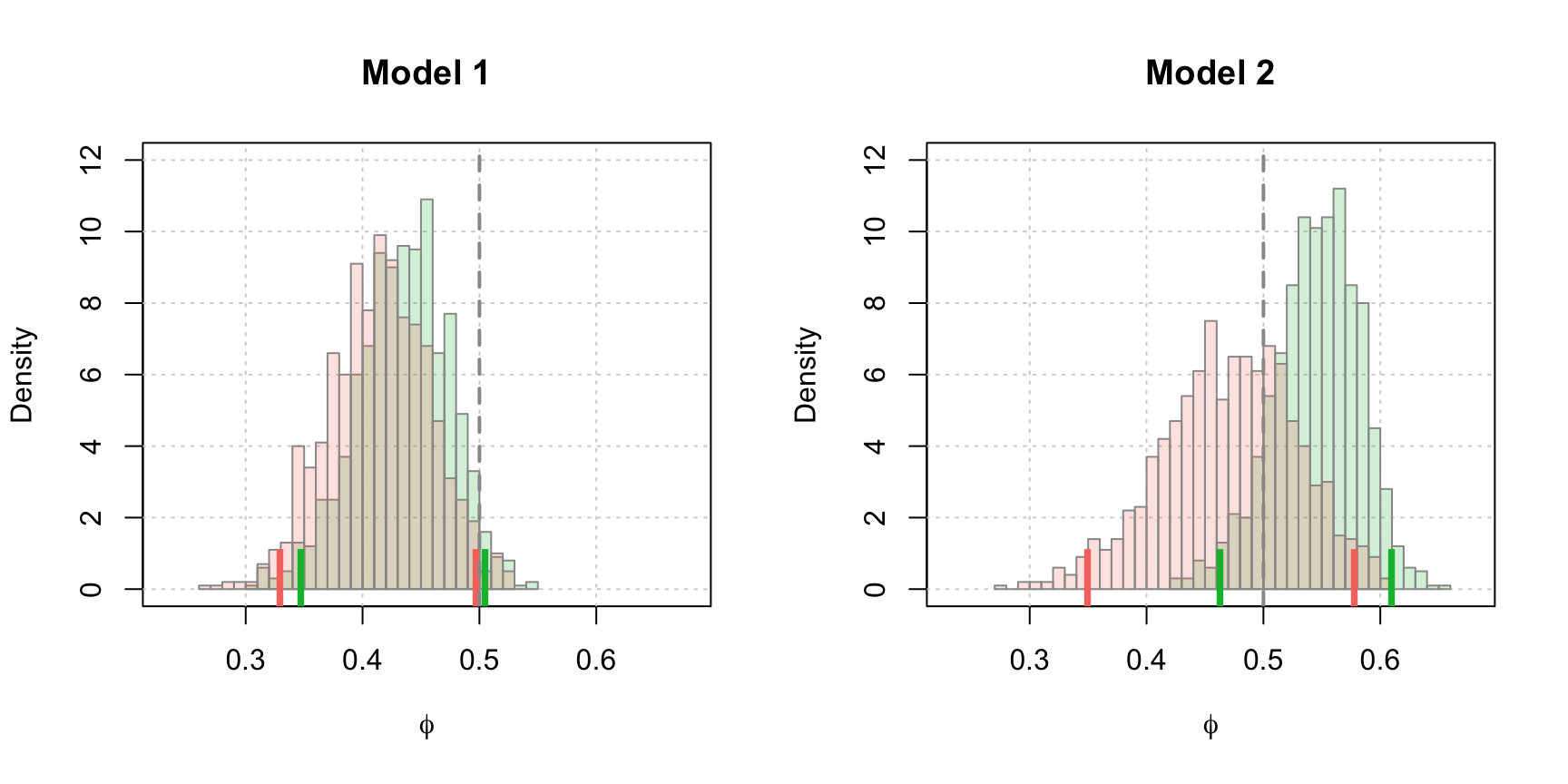

Figure 4.18: Parametric bootstrap distributions of \(\hat{\phi}\) for the MLE and GMWM parameter estimates. The histograms represent the empirical distributions resulting from the parametric bootstrap while the continuous densities represent the estimated asymptotic distributions. The vertical line represents the true value of \(\phi\), the dark solid lines represent the upper bound of the parameter space for \(\phi\) and the vertical ticks represent the limits of the 95% confidence intervals for the asymptotic distributions (red and gold) and the empirical distributions (green and blue).

In Figure 4.18, we compare the estimated densities for \(\hat{\phi}\) using asymptotic results and bootstrap techniques for the MLE and the GMWM estimators. It can be observed that the issue that arose previously with “non-stationary” confidence intervals does not occur with the parametric bootstrap approach since the interval regions of the latter lie entirely within the boundaries of the admissable parameter space.

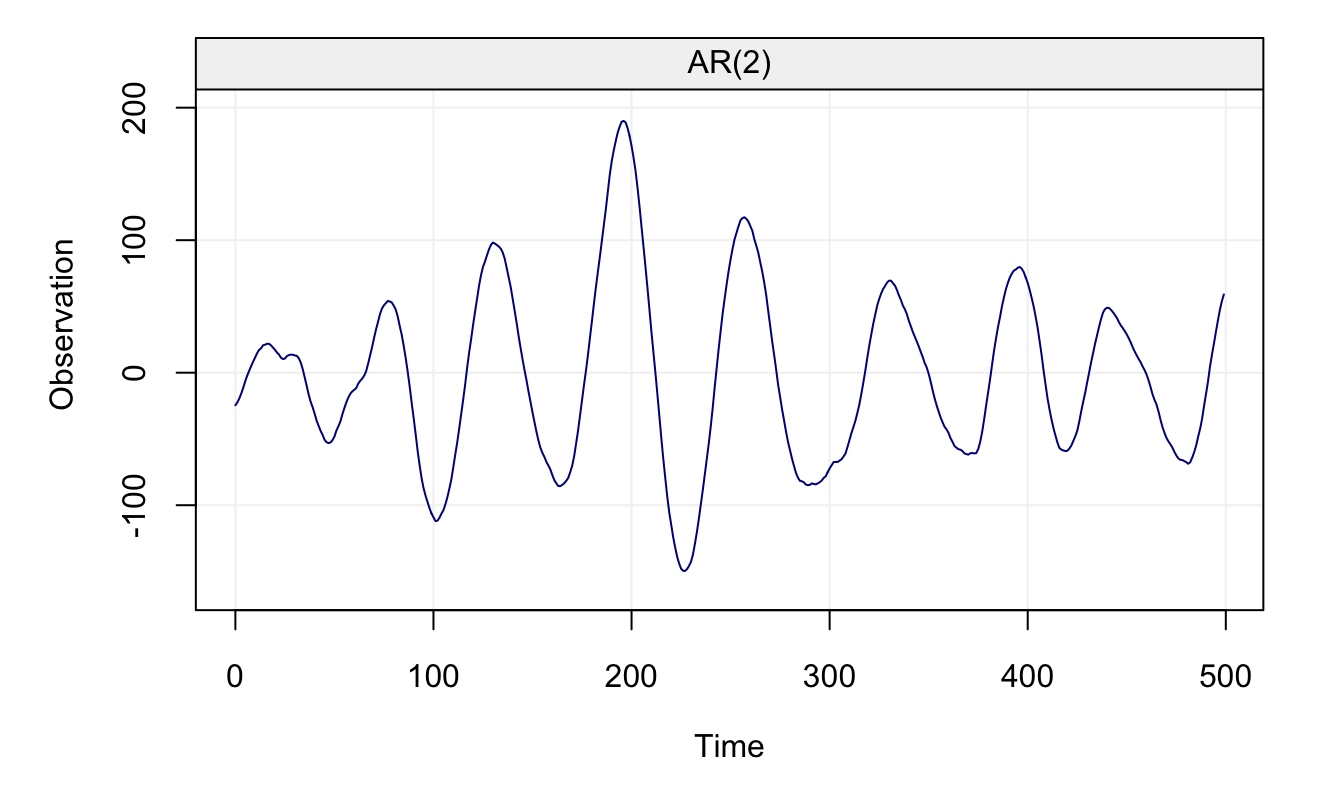

To further emphasize the advantages of parametric bootstrap, let us consider one additonal example of an AR(2) process. For this example, discussion will focus solely on the behaviour of the MLE. Having said this, the considered AR(2) process is defined as follows:

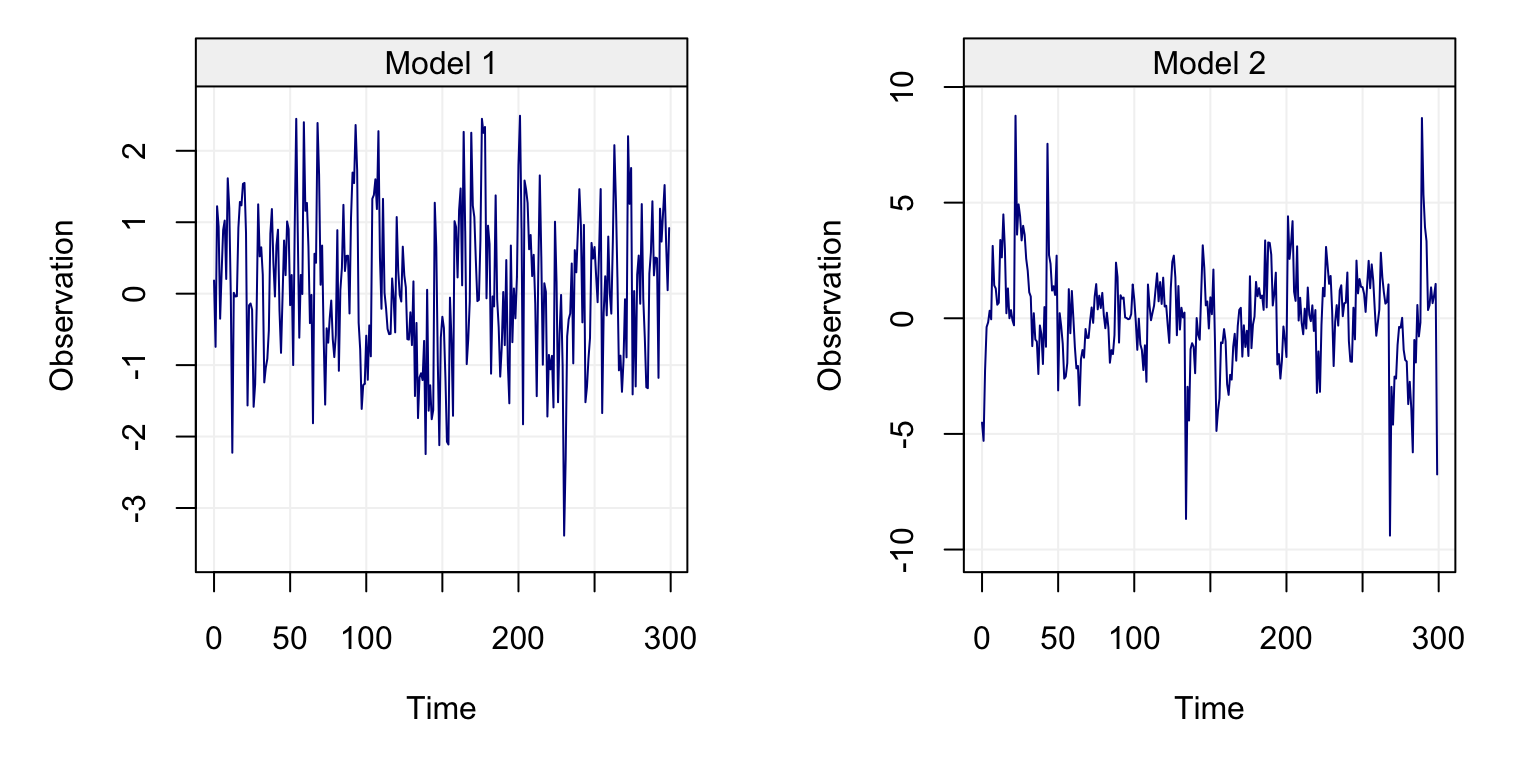

\[\begin{equation} {X_t} = {1.98}{X_{t - 1}} - {0.99}{X_{t - 2}} + {W_t} \tag{4.2} \end{equation}\]where \(W_t \sim \mathcal{N}(0, 1)\). The generated process is displayed in Figure 4.19.

set.seed(432)

Xt = gen_gts(500, AR(phi = c(1.98, -0.99), sigma2 = 1))

plot(Xt)

Figure 4.19: Generated AR(2) Process

fit = estimate(AR(2), Xt, demean = FALSE)

fit## Fitted model: AR(2)

##

## Estimated parameters:

##

## Call:

## arima(x = as.numeric(Xt), order = c(p, intergrated, q), seasonal = list(order = c(P,

## seasonal_intergrated, Q), period = s), include.mean = demean, method = meth)

##

## Coefficients:

## ar1 ar2

## 1.9787 -0.9892

## s.e. 0.0053 0.0052

##

## sigma^2 estimated as 0.8419: log likelihood = -672.58, aic = 1351.15Having estimated the model’s coefficients, we can again perform the parametric bootstrap. It must be noticed that in these simulations there can be various cases where a method may fail to estimate due to numerical issues (e.g. numerically singular covariance matrices, etc.) and therefore we add a check for each estimation to ensure that we preserve those estimations that have succeeded.

B = 500

est.phi.ML = matrix(NA, B, 2)

model = AR(phi = fit$mod$coef, sigma2 = fit$mod$sigma2)

for(i in seq_len(B)) {

set.seed(B + i) # Set Seed for reproducibilty

x.star = gen_gts(500, model) # Simulate the process

# Obtain parameter estimate with protection

# that defaults to NA if unable to estimate

est.phi.ML[i,] = tryCatch(estimate(AR(2), x.star, demean = FALSE)$mod$coef,

error = function(e) NA)

}Once this is done, we can compare the bivariate distributions (asymptotic and bootsrapped) of the two estimated parameters \(\hat{\phi}_1\) and \(\hat{\phi}_2\).

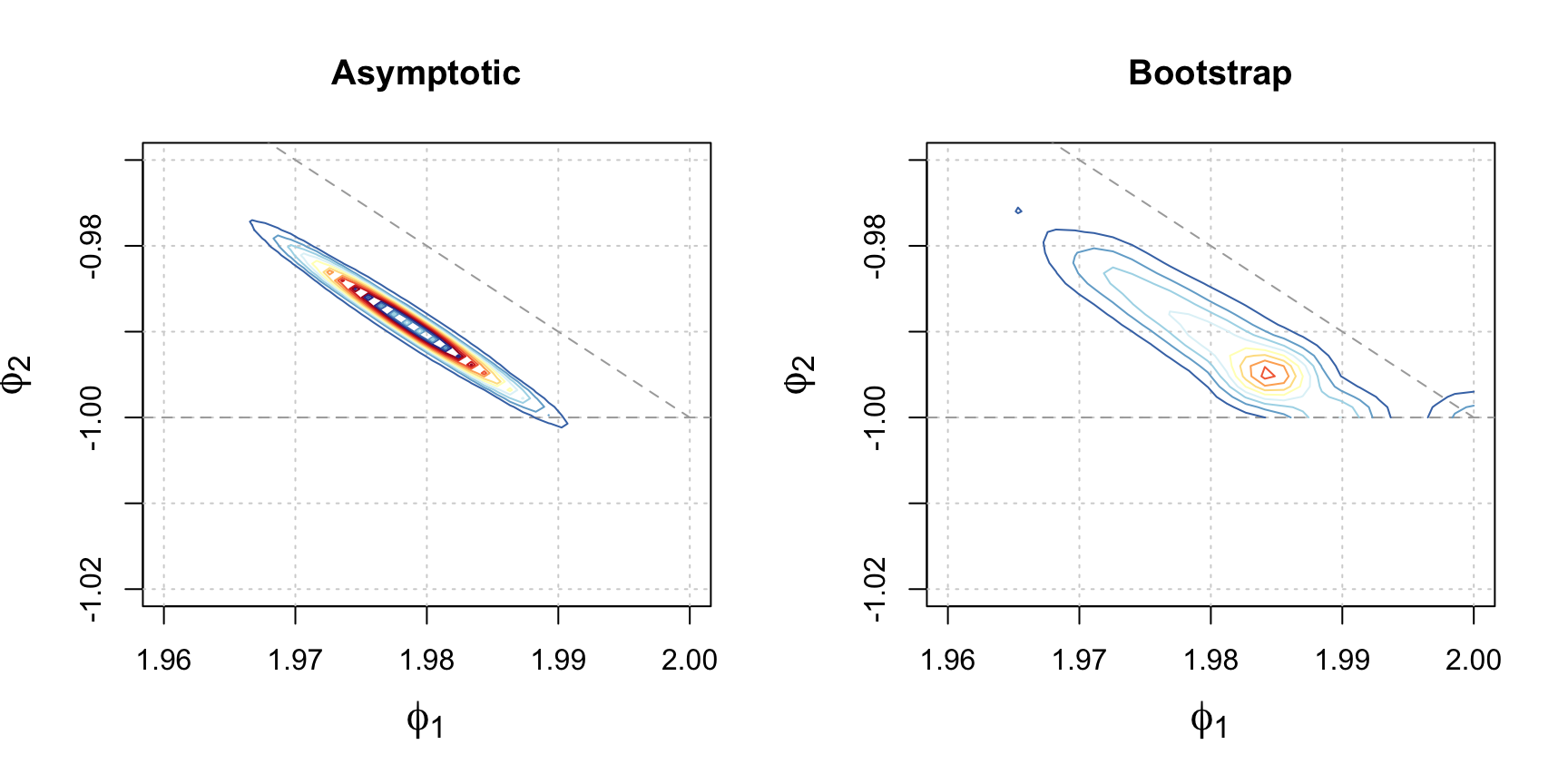

Figure 4.20: Estimated distributions of \(\hat{\phi_1}\) and \(\hat{\phi_2}\) based on the MLE using asymptotic and parametric bootstrap techniques. The colored contours represent the density of the distributions and the dark grey lines represent the boundary constraints of \(\left|\phi_2\right|<1\) and \(\phi_2 = 1 - \phi_1\).

As for any other estimation and inference procedure, these confidence intervals rely on the assumption that the chosen model (AR(2) in this case) is the true model underlying the observed time series. However, if this assumption were not correct, then the derived confidence intervals would not be correct and this could possibly lead to bad conclusions. For this reason, one could decide to use a non-parametric approach to computing these confidence intervals, such as the traditional bootstrap that consists in a simple random sampling with replacement from the original data. However, this approach would not be appropriate in the presence of dependence between observations since this procedure would break the dependence structure and therefore deliver unreliable conclusions. Therefore, to preserve the dependence structure of the original data one option would be to use block bootstrapping, which is a particular form of non-parametric bootstrap. There are many different kinds of block-bootstrap procedures for time series which are all related to the Moving Block Bootstrap (MBB) which is defined as follows.

Definition 4.4 Suppose that \(\left(X_t\right)\) is a weakly stationary time series with \(T\) observations. The procedure is as follows:

- Divide the time series into overlapping blocks \(\left\{S_1, \ldots, S_M\right\}\) of length \(\ell\), \(1 \le \ell < N\), resulting in \(M = N - \ell + 1\) blocks structured as:

\[\begin{aligned} {S_1}& = & ({X_1}, &{X_2}, \cdots , {X_\ell}) & && \\ {S_2}& = & &( {X_2}, {X_3}, \cdots , {X_{\ell + 1}}) & && \\ & \cdots & & {} & \cdots && \\ {S_M} & = & & & {} &&( {X_{N-\ell+1}}, {X_{N-\ell+2}}, \cdots , {X_{N}}) \end{aligned}\]

- Draw \(M = \left\lfloor {\frac{N}{\ell}} \right\rfloor\) blocks with replacement from these \(\left\{S_1, \ldots, S_M\right\}\) blocks and place them in order to form \((X_t^*)\).

- Compute the statistic of interest on the simulated sample \((X_t^*)\).

- Repeat Steps 2 and 3 \(B\) times where \(B\) is sufficiently “large” (typically \(100 \leq B \leq 10,000\)).

- Compute the empirical mean and variance on the statistic of interest based on the \(B\) independent replications.

The approach taken by MBB ensures that within each block the dependency between observations is preserved. However, one particular issue that now arises is that some inaccuracy is introduced as a result of successive blocks potentially being independent from each other. In reality, this is one of the trade-offs of the MBB approach that can be mitigated by selecting an optimal \(\ell\) (to improve the accuracy of the procedure one should choose \(\ell = T^{1/3}\) as \(T \to \infty\) as being the optimal choice). An earlier variant of MBB is called the Nonoverlapping Block Bootstrap (NBB) which doesn’t allow the blocks to share common data points and is presented below.

Definition 4.5 Suppose that \(\left(X_t\right)\) is weakly stationary time series with \(T\) observations.

- Divide time series into nonoverlapping blocks \(\left\{S_1, \ldots, S_M\right\}\) of length \(\ell\), \(1 \le \ell < N\), resulting in \(M = \left\lfloor {\frac{N}{\ell}} \right\rfloor\) blocks structured as:

\[\begin{aligned} {S_1}& = ({X_1}, {X_2}, \cdots , {X_\ell})& & && \\ {S_2}& = &( {X_{\ell+1}}, {X_{\ell+2}}, \cdots , {X_{2\ell}}) & && \\ & \cdots & {} & \cdots && \\ {S_K} & = & & {} &&( {X_{\left({N-\ell+1}\right)}}, {X_{N-\ell+2}}, \cdots , {X_{N}}) \end{aligned}\]

- Draw \(M\) blocks with replacement from these \(\left\{S_1, \ldots, S_M\right\}\) blocks and place them in order to form \((X_t^*)\).

- Compute the statistic of interest on the simulated sample \((X_t^*)\).

- Repeat Steps 2 and 3 \(B\) times where \(B\) is sufficiently “large” (typically \(100 \leq B \leq 10,000\)).

- Compute the empirical mean and variance on the statistic of interest based on the \(B\) independent replications.

Alternatively, depending on the case one can also use modifications of the MBB that seeks to change how the beginning and end of the time series is weighted such as a Circular Block-Bootstrap (CBB) or a Stationary Bootstrap (SB). The code below implements the MMB to obtain a bootrstrap distribution for the estimated parameters of an AR(1) model.

ar1_blockboot = function(Xt, block_len = 10, B = 500) {

n = length(Xt) # Length of Time Series

res = rep(NA, B) # Bootstrapped Statistics

m = floor(n/block_len) # Amount of Blocks

for (i in seq_len(B)) { # Begin MMB

set.seed(i + 1199) # Set seed for reproducibility

x_star = rep(NA, n) # Setup storage for new TS

for (j in seq_len(m)) { # Simulate new time series

index = sample(m, 1) # Randomize block starting points

# Place block into time series

x_star[(block_len*(j - 1) + 1):(block_len*j)] =

Xt[(block_len*(index - 1) + 1):(block_len*index)]

}

# Calculate parameters with protection

res[i] = tryCatch(estimate(AR(1), x_star, demean = FALSE)$mod$coef,

error = function(e) NA)

}

na.omit(res) # Deliver results

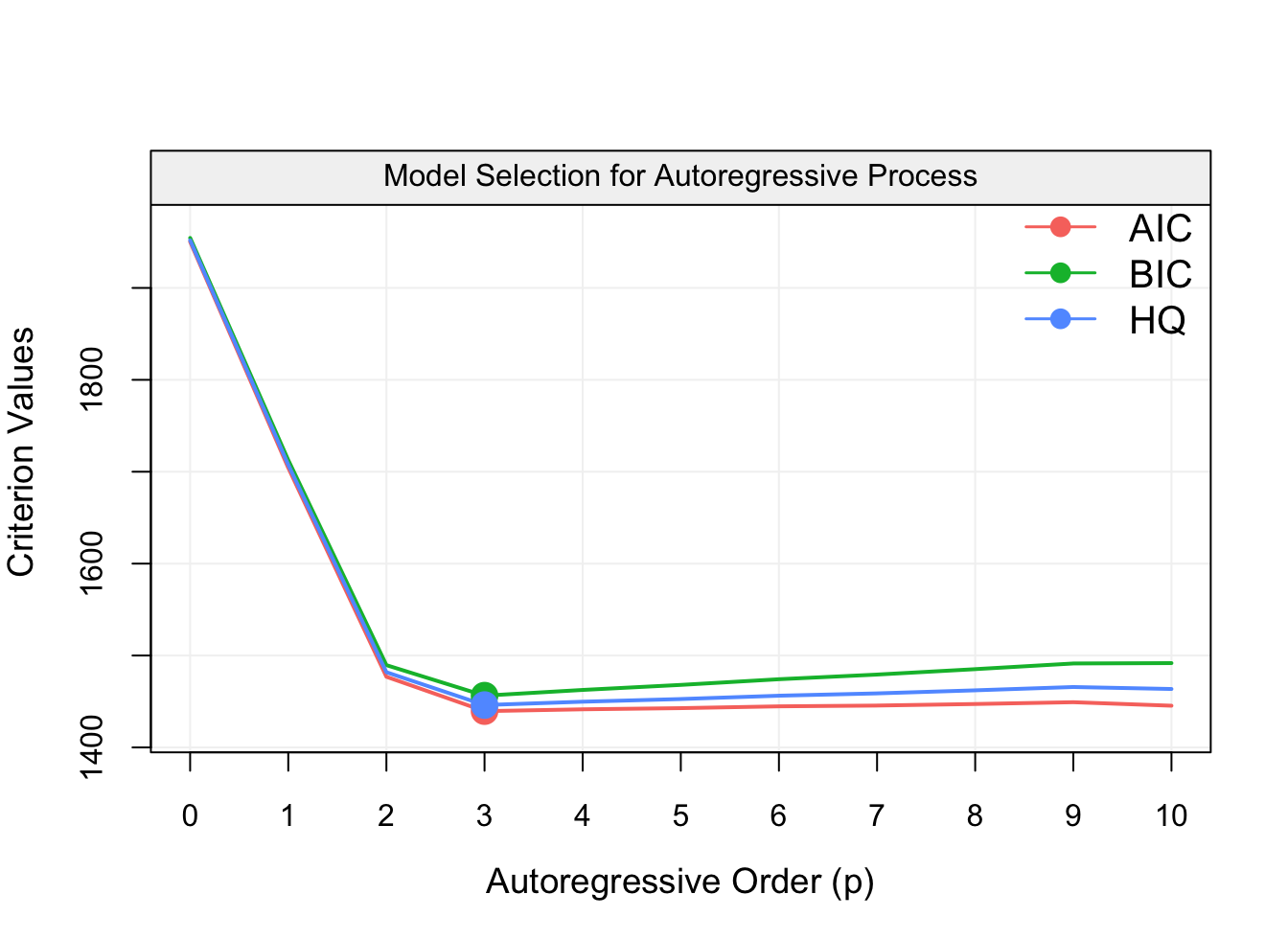

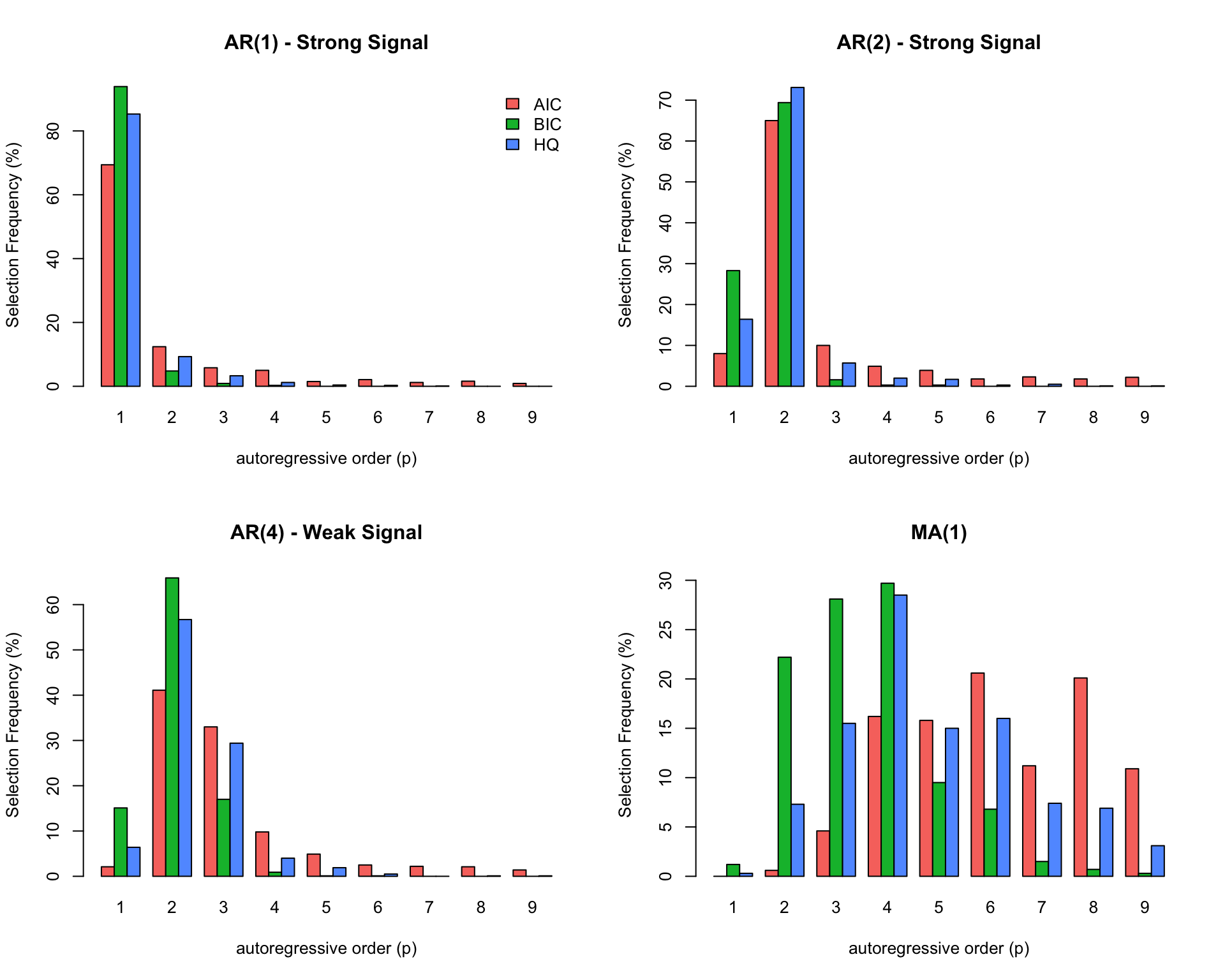

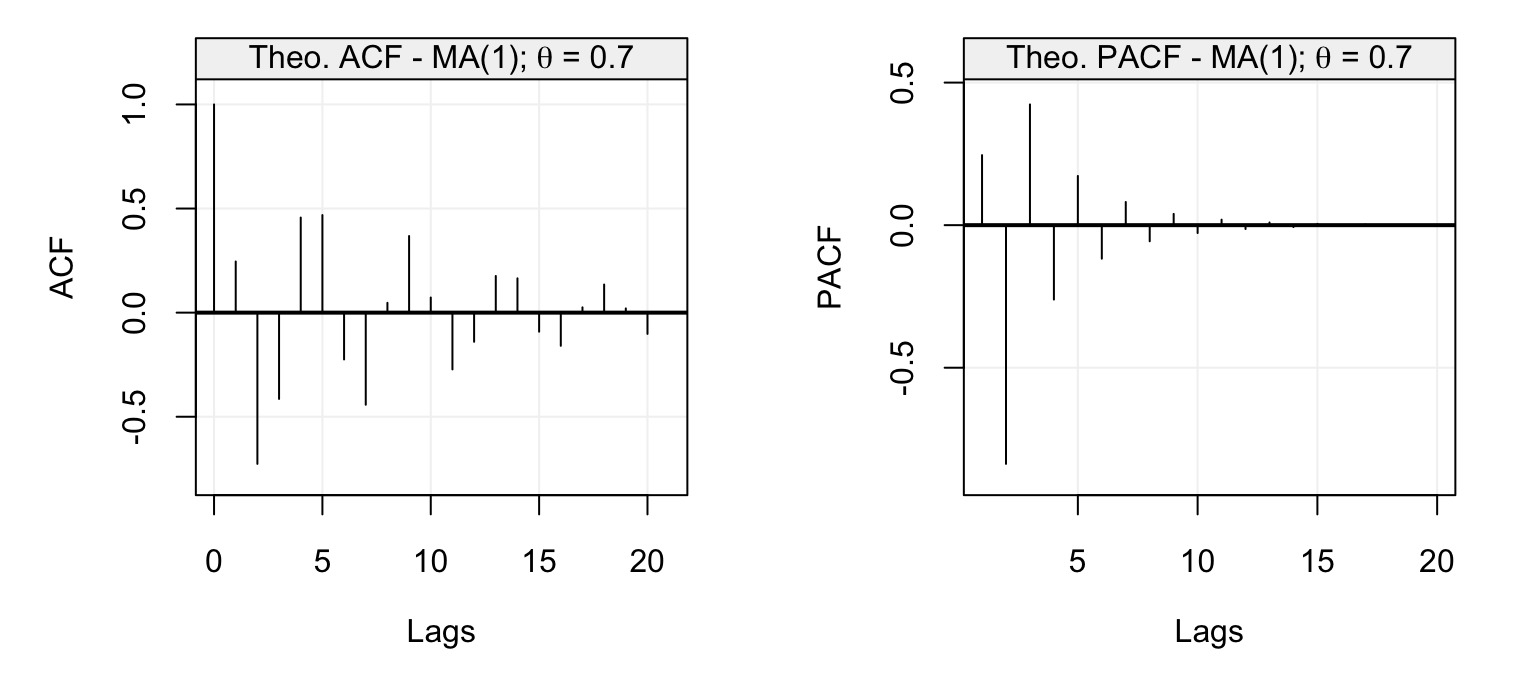

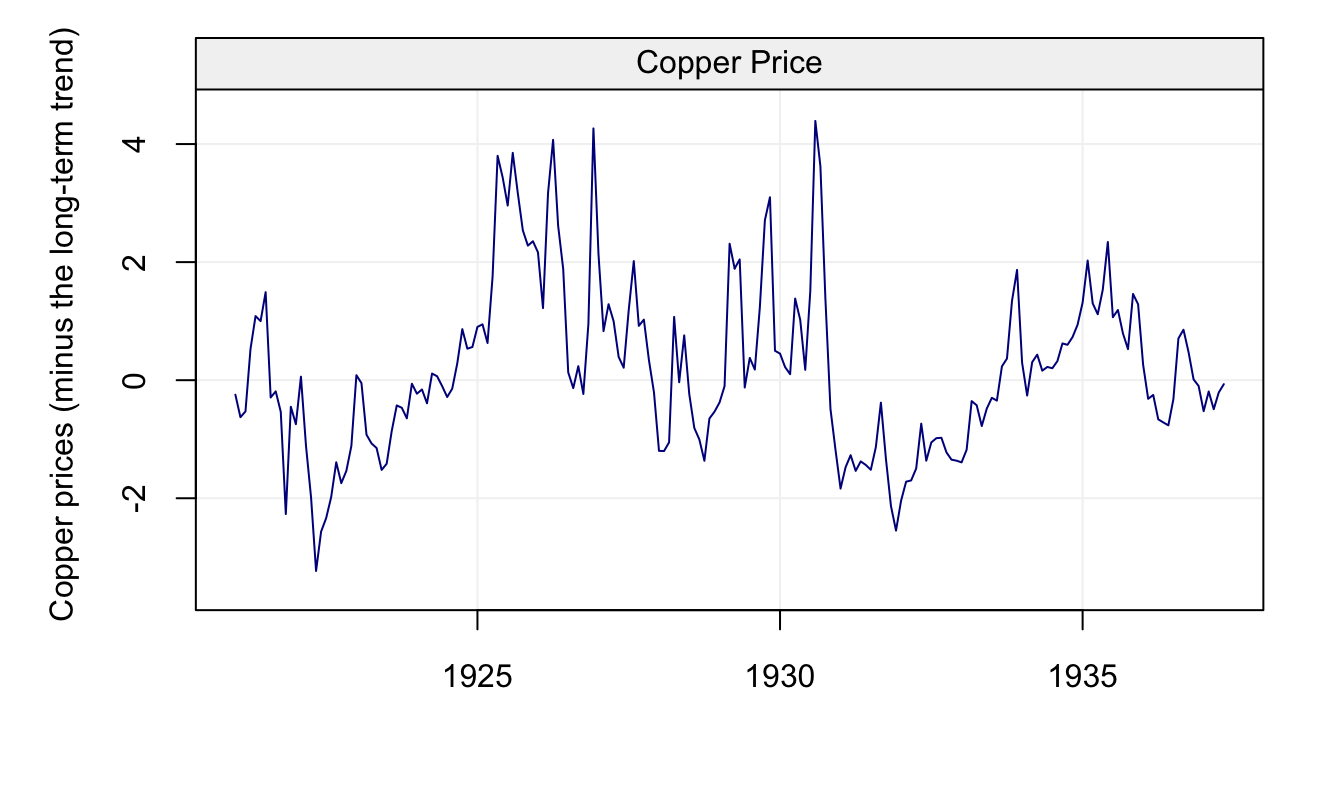

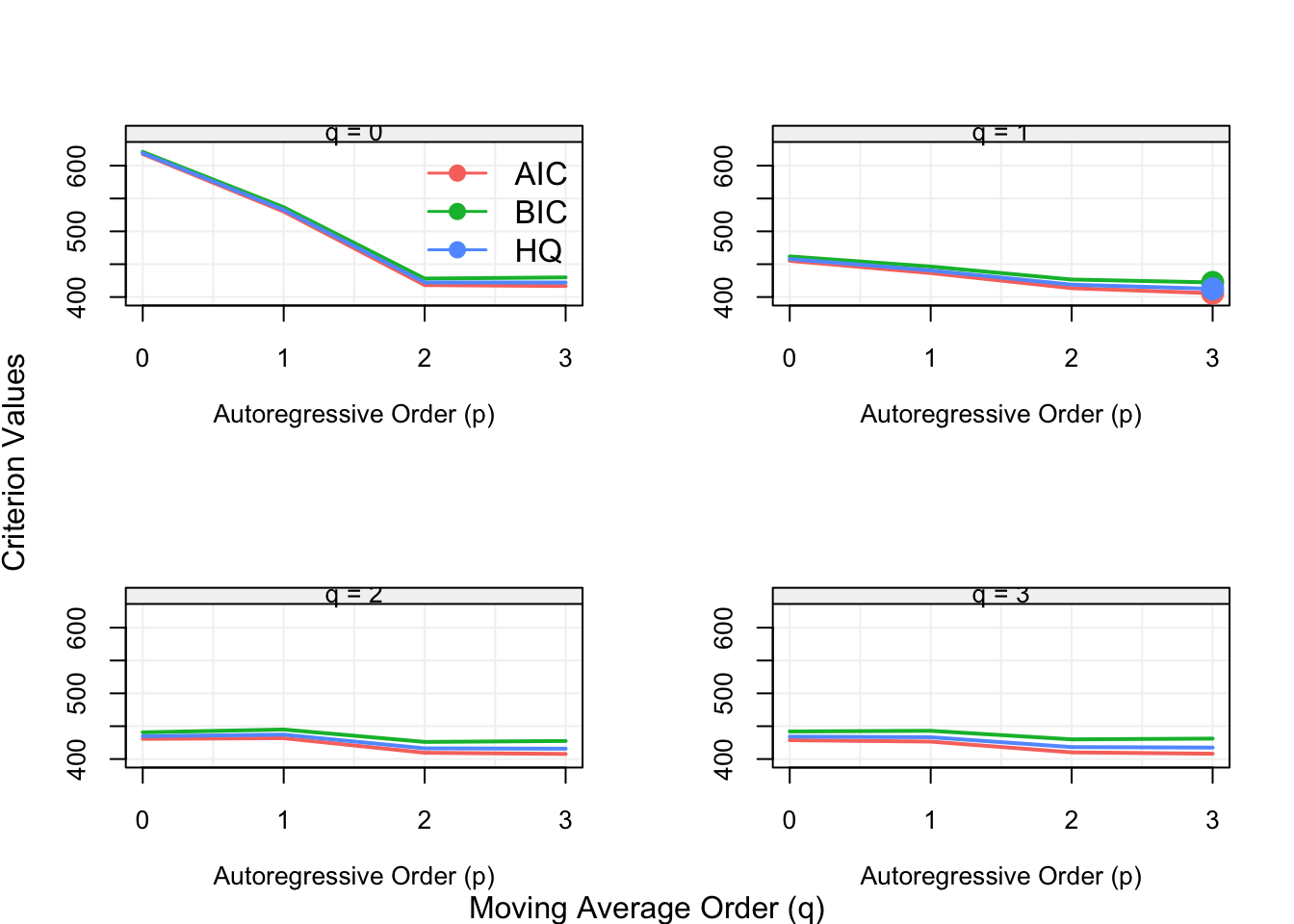

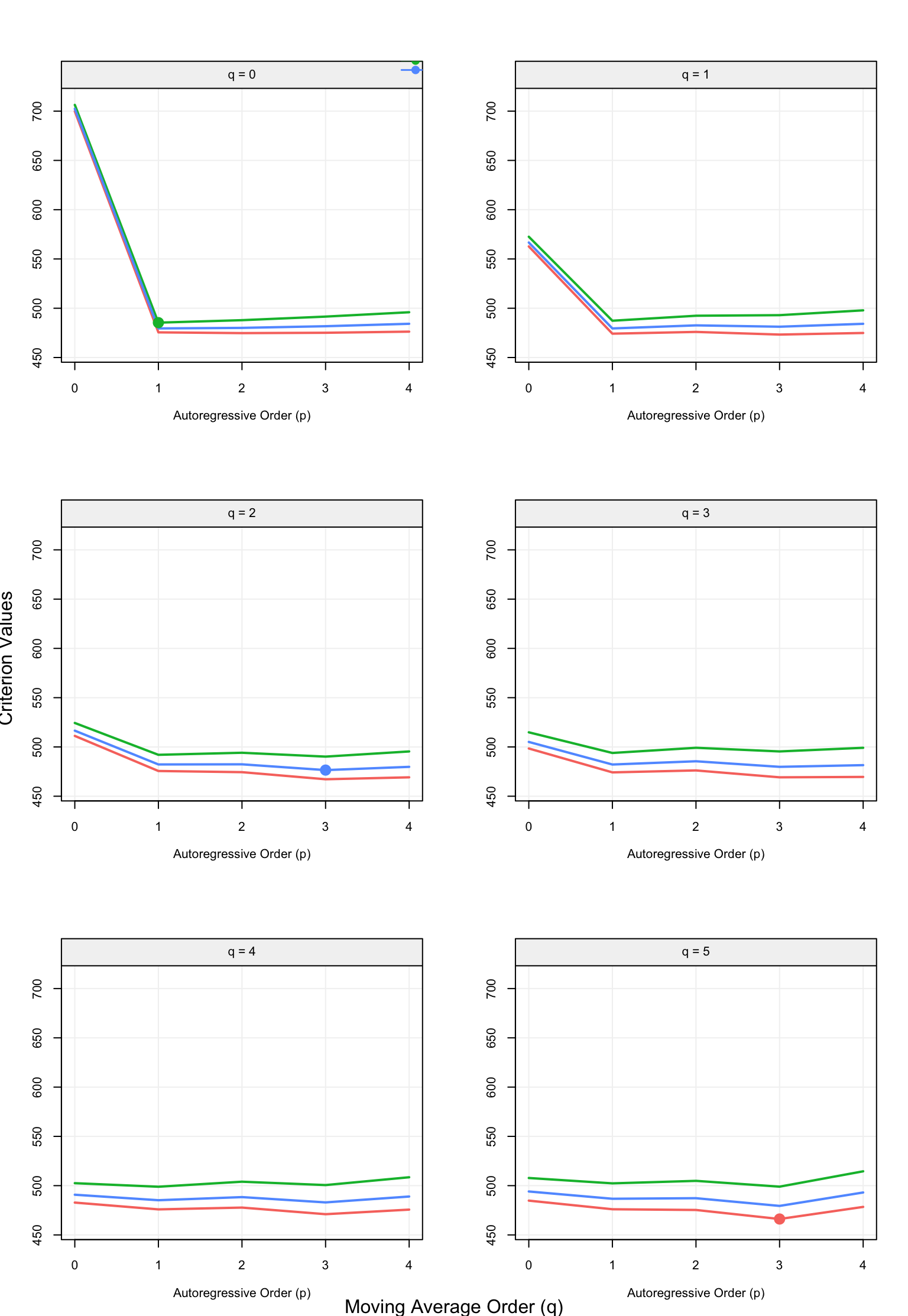

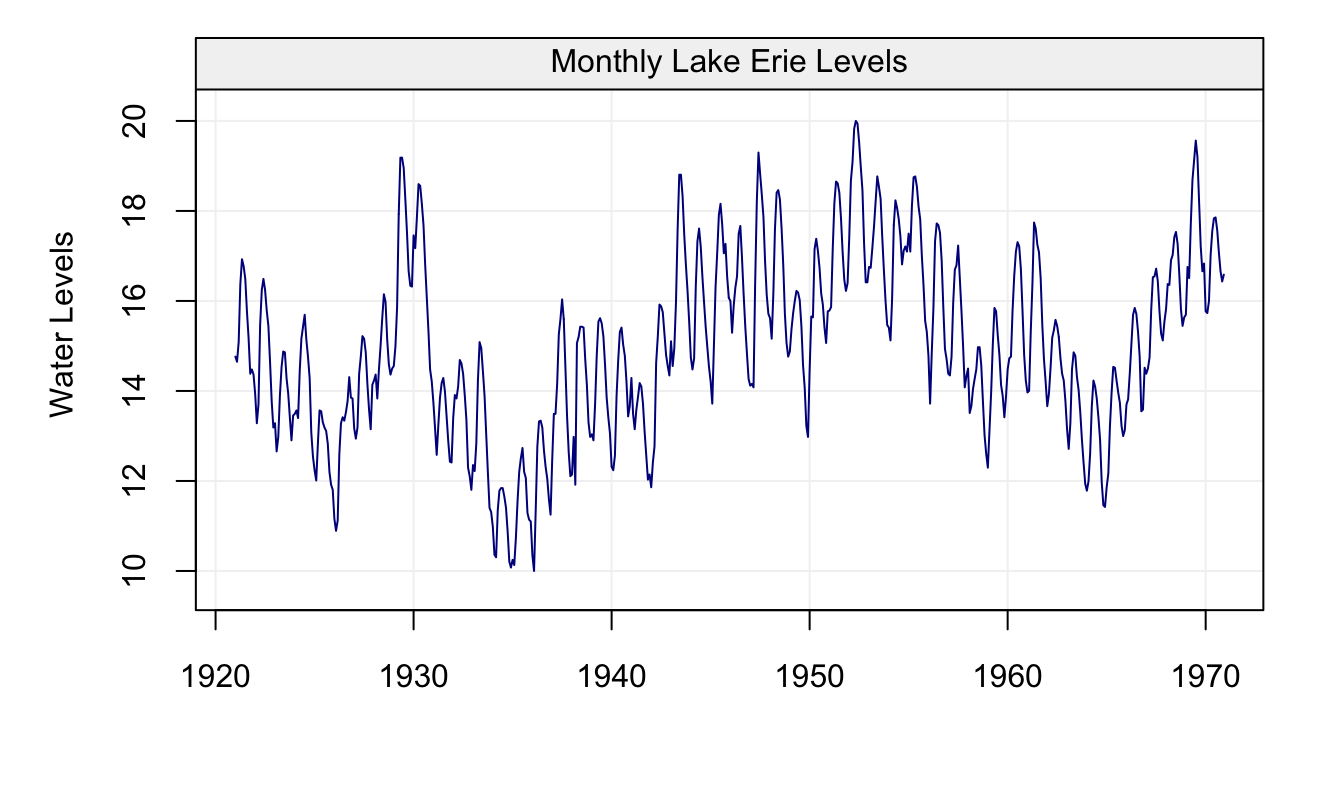

}Having defined the function above, let us consider a scenario where the model’s assumptions are not respected (i.e. wrong model and/or non-Gaussian time series). Consider an AR(1) and an AR(2) process with the same coefficients for the first lagged variable (i.e. \(\phi = \phi_1 = 0.5\)) but with different innovation noise generation procedures: