Simulate a lts object based on a supplied time series model.

gen_lts(

n,

model,

start = 0,

end = NULL,

freq = 1,

unit_ts = NULL,

unit_time = NULL,

name_ts = NULL,

name_time = NULL,

process = NULL

)Arguments

- n

An

intergerindicating the amount of observations generated in this function.- model

A

ts.modelorsimtsobject containing one of the allowed models.- start

A

numericthat provides the time of the first observation.- end

A

numericthat provides the time of the last observation.- freq

A

numericthat provides the rate/frequency at which the time series is sampled. The default value is 1.- unit_ts

A

stringthat contains the unit of measure of the time series. The default value isNULL.- unit_time

A

stringthat contains the unit of measure of the time. The default value isNULL.- name_ts

A

stringthat provides an identifier for the time series data. Default value isNULL.- name_time

A

stringthat provides an identifier for the time. Default value isNULL.- process

A

vectorthat contains model names of each column in thedataobject where the last name is the sum of the previous names.

Value

A lts object with the following attributes:

- start

The time of the first observation.

- end

The time of the last observation.

- freq

Numeric representation of the sampling frequency/rate.

- unit

A string reporting the unit of measurement.

- name

Name of the generated dataset.

- process

A

vectorthat contains model names of decomposed and combined processes

Details

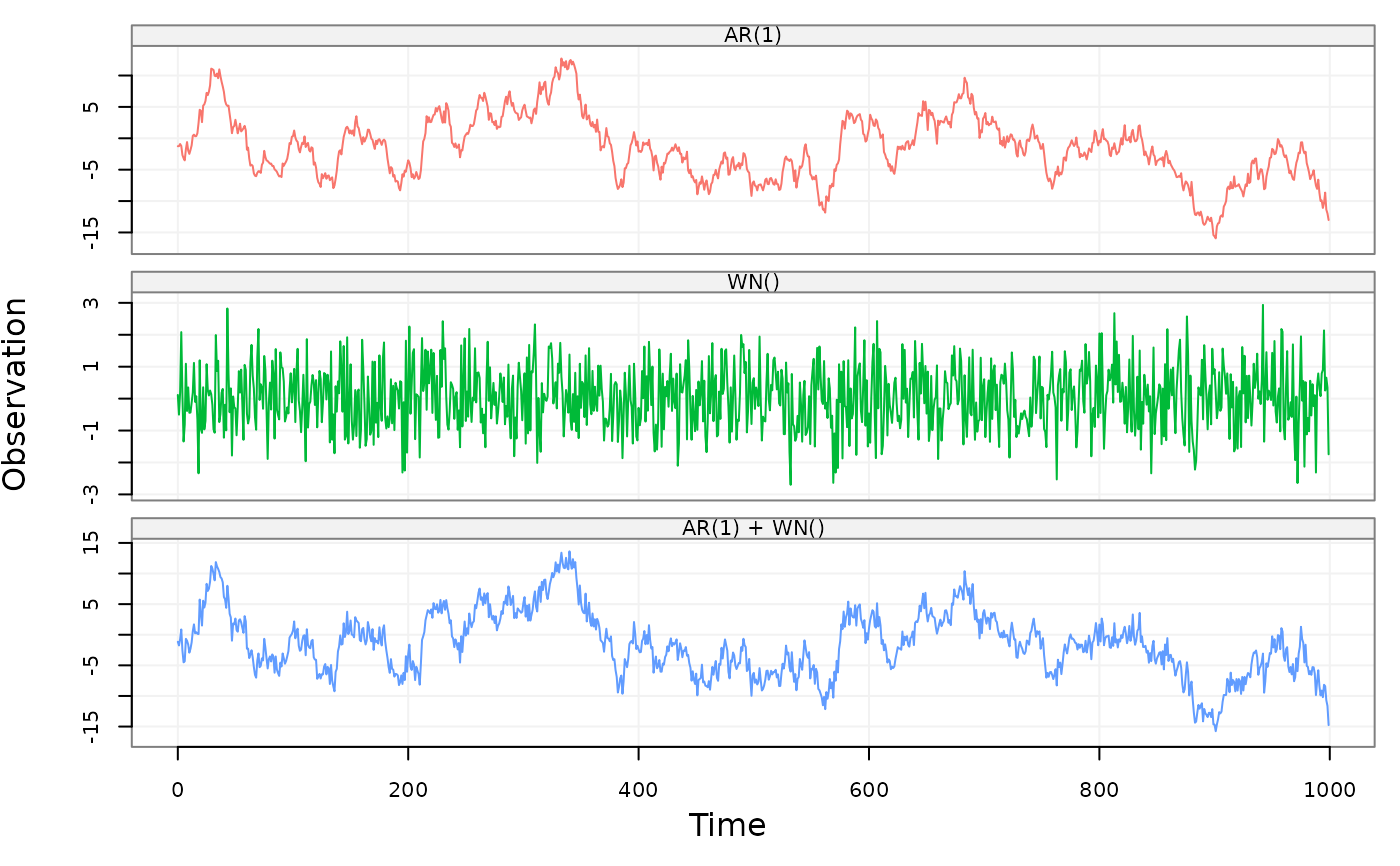

This function accepts either a ts.model object (e.g. AR1(phi = .3, sigma2 =1) + WN(sigma2 = 1)) or a simts object.